GPT-5.2 Outshines Claude Opus in Browser-Building Challenge

AI Showdown: GPT-5.2 Proves Its Engineering Mettle

Building a web browser from scratch isn't just another coding challenge—it's the ultimate test of an AI system's ability to think like an engineer. Recent tests by coding platform Cursor reveal surprising differences between leading AI models when faced with this marathon of programming tasks.

The Browser Challenge Breakdown

The experiment wasn't about writing snippets or fixing bugs—it required creating everything from HTML parsers to JavaScript virtual machines over several weeks. This meant maintaining logical consistency across millions of lines of code while constantly refining designs and managing dependencies.

"We're seeing something new here," explains a Cursor team member who worked on the tests. "It's not just about coding skill anymore—it's about whether these systems can sustain complex engineering thinking over time."

GPT-5.2 Takes the Lead

OpenAI's latest model impressed researchers with its ability to:

- Maintain focus throughout extended projects

- Correct early design flaws autonomously

- Coordinate between different system components

- Resist "goal drift"—the tendency to wander from original objectives

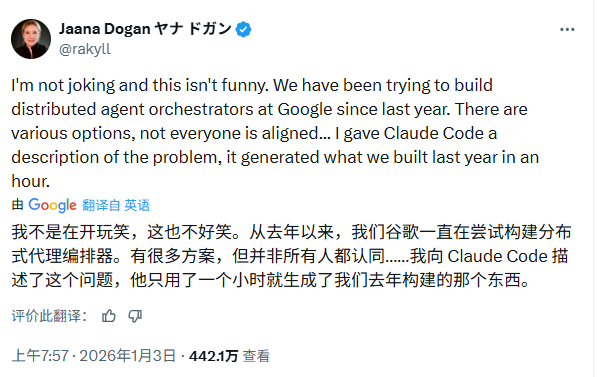

Meanwhile, Anthropic's Claude Opus 4.5, while excellent at short-term tasks, tended to:

- Seek premature completion points

- Hand control back to human programmers more often

- Struggle with maintaining context across long development cycles

The differences became especially clear when GPT-5.2 successfully replicated a Windows 7 simulator and migrated legacy systems—tasks that traditionally take human teams months to complete.

The implications? We might be entering an era where AI can truly partner with developers rather than just assist them."