SoulX-Podcast AI Model Revolutionizes Long-Form Voice Generation

SoulX-Podcast AI Model Sets New Standard for Voice Generation

The artificial intelligence voice sector has reached a significant milestone with the launch of Soul's SoulX-Podcast model. This specialized solution for podcast-style content generation combines unprecedented duration capabilities with lifelike vocal quality, potentially reshaping audio content creation.

Technical Breakthroughs

The model's most notable achievement is its ability to generate over 90 minutes of continuous dialogue without degradation in quality or stability. This represents a quantum leap from previous AI voice systems typically limited to short demonstrations.

"This stability breakthrough allows creators to produce complete podcast episodes without artificial breaks or quality compromises," explains Dr. Lin Wei, Soul's Chief Technology Officer. "It transitions AI voice from novelty to practical production tool."

Multilingual Capabilities

The system supports:

- Fluent Mandarin-English bilingual generation

- Regional Chinese dialect integration

- Emotionally expressive paralanguage (laughter, sighs)

- Context-aware pauses and intonation

Such features enable creators to develop localized content with authentic cultural nuances previously requiring human voice actors.

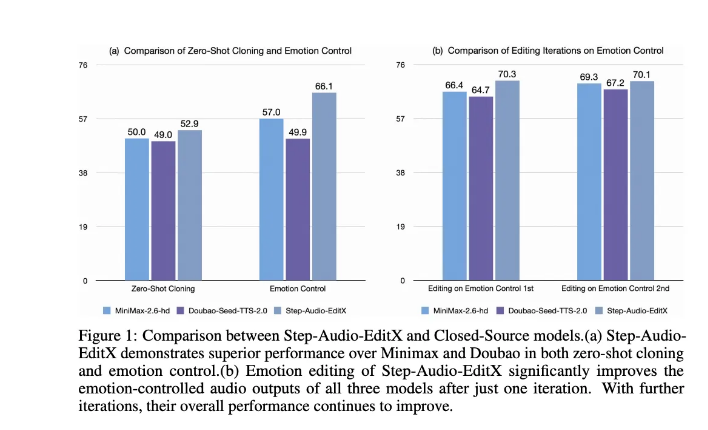

Zero-Shot Voice Cloning Innovation

The model introduces groundbreaking zero-shot cloning technology allowing:

- Instant replication of specific voices without retraining

- Tone and style adaptation from minimal samples

- Seamless switching between cloned voices during generation

"This effectively democratizes celebrity-quality voice work," notes media analyst Sarah Chen. "A small team can now produce content sounding like professional studio recordings."

Industry Impact

The launch is expected to affect multiple sectors:

| Sector | Potential Impact |

|---|

The open-source release (available at GitHub) encourages developer community involvement in further refinement.

Key Points:

- 90+ minute stable generation enables complete podcast episodes

- Multilingual/dialect support creates localization opportunities

- Zero-shot cloning reduces voice talent dependencies

- Potential to reduce audio production costs by 60-80% according to early adopters

- Represents significant progress toward indistinguishable synthetic speech