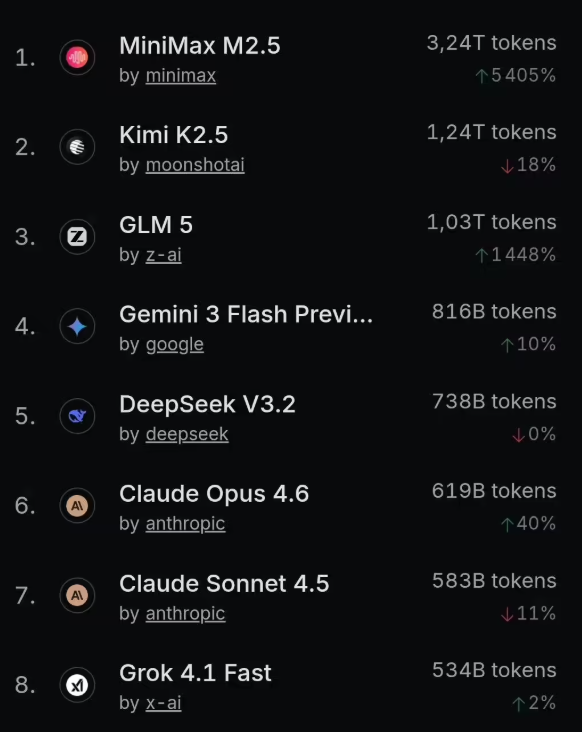

MiniMax Unveils M2 Inference Model for Smart Agents

MiniMax Launches M2 Inference Model Tailored for Smart Agents

At a pivotal moment in the AI industry's shift from parameter-centric competition to efficiency-driven innovation, MiniMax has unveiled its latest open-source reasoning model, M2. Released on October 27th, this model is engineered specifically for smart agents, positioning itself as a foundational tool for next-generation AI applications.

Technical Specifications and Performance

The M2 model adopts a Mixture-of-Experts (MoE) architecture, featuring a staggering 230 billion parameters. However, only 10 billion parameters are activated during each inference, enabling an impressive output speed of 100 tokens per second. This efficiency makes M2 particularly suited for real-time interaction scenarios.

Strategic Adjustments: Context Window Reduction

A notable departure from its predecessor, M1, is M2's reduced context window—down from 1 million tokens to 204,800 tokens. This adjustment reflects MiniMax's pragmatic approach to balancing long-text processing, reasoning speed, and deployment costs. While M1's million-token capability set benchmarks, its resource-intensive nature limited practical applications. In contrast, M2 prioritizes high-frequency agent tasks, ensuring optimal performance without compromising cost-effectiveness.

Designed for Smart Agents

The M2 model excels in scenarios requiring behavioral decision-making, multi-turn task planning, and environmental interaction. Its architecture enhances reasoning continuity and response efficiency—critical attributes for building truly autonomous AI agents. Developers can leverage M2 to create:

- Virtual assistants with complex task chains

- Automated workflow robots

- Decision-making agents integrated into enterprise systems

The open-source nature of M2 further lowers barriers for developers aiming to customize agent solutions.

The Future of AI Agents

MiniMax positions M2 as the "reasoning foundation of the Agent era." As AI transitions from mere question-answering tools to proactive agents capable of independent action, models like M2 underscore the importance of speed and cost-efficiency over sheer context length.

Key Points:

- 230B parameters, with only 10B activated per inference.

- Outputs 100 tokens/second, ideal for real-time interactions.

- Reduced context window (204.8K tokens) optimizes speed and cost.

- Open-source model accelerates development of customized smart agents.

- Targets next-gen AI applications requiring rapid decision-making.