Ant Group Open-Sources High-Performance Diffusion Model Framework dInfer

Ant Group Breaks New Ground With Open-Source AI Framework

Ant Group made waves in the AI community on October 13th by open-sourcing dInfer, a breakthrough high-performance inference framework specifically designed for diffusion language models. This release marks a significant milestone in making diffusion models practically viable for industrial applications.

Performance Breakthroughs

Benchmark testing reveals dInfer's impressive capabilities:

- 10.7x faster inference speeds compared to NVIDIA's Fast-dLLM framework

- Achieves 1011 Tokens/second in single-batch inference on HumanEval code generation tasks

- Outperforms comparable autoregressive models by 2.5x in average inference speed

The framework demonstrates that through systematic engineering innovation, diffusion language models can realize their theoretical efficiency potential while maintaining accuracy comparable to top autoregressive models.

Overcoming Diffusion Model Challenges

Diffusion language models treat text generation as a gradual "denoising" process from random noise, offering three key advantages:

- High parallelism

- Global perspective

- Flexible structure

However, practical implementation has faced three major bottlenecks:

- High computational costs

- KV cache failure issues

- Parallel decoding limitations

dInfer's architecture specifically addresses these challenges through its modular design.

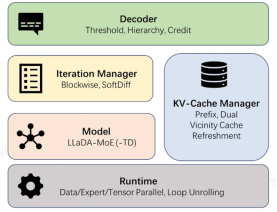

Figure: Architecture of dInfer

Figure: Architecture of dInfer

Technical Architecture

The framework features four core modules:

- Model - Supports various diffusion language model variants

- KV-Cache Manager - Optimizes memory usage

- Iteration Manager - Coordinates the denoising process

- Decoder - Handles output generation

This plug-and-play design allows developers to experiment with different optimization strategies while maintaining standardized evaluation metrics.

Industry Implications

The release connects cutting-edge AI research with practical applications, representing a crucial step toward making diffusion language models truly viable alternatives to autoregressive approaches.

Ant Group has positioned dInfer as an open invitation to the global developer community to collaboratively explore diffusion models' potential and build more efficient AI ecosystems.

The framework currently supports several model variants including LLaDA, LLaDA-MoE, and LLaDA-MoE-TD.

Key Points:

- First open-source framework achieving faster-than-autoregressive speeds for diffusion models

- Solves longstanding efficiency bottlenecks through systematic engineering

- Modular architecture enables flexible experimentation

- Represents significant progress toward practical AGI development paths