MiniMax's M2.5 Goes Open-Source: A Game-Changer for Affordable AI Agents

MiniMax Breaks New Ground with M2.5 Open-Source Release

In a move that could reshape the AI landscape, MiniMax has launched its M2.5 model just 108 days after its predecessor. The company has made the model weights openly available on ModelScope, delivering what many developers have been waiting for - high-performance AI at a fraction of the usual cost.

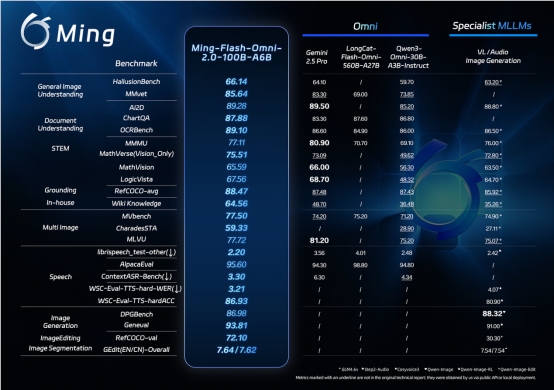

Performance That Turns Heads

The numbers speak for themselves:

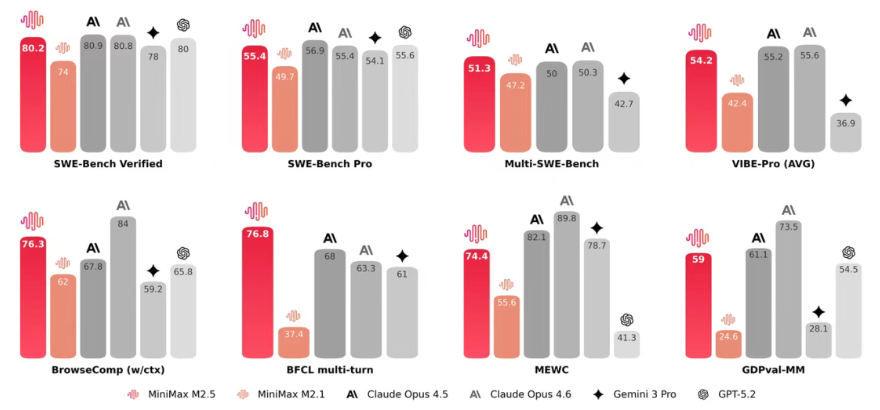

- 80.2% on SWE-Bench Verified, outperforming GPT-5.2 and nearing Claude Opus4.5

- 51.3% on Multi-SWE-Bench, topping multilingual programming benchmarks

- 76.3% on BrowseComp, showing exceptional search capabilities

What does this mean in practice? Developers get architecture-level planning support across multiple platforms, while businesses benefit from 20% fewer search iterations and specialized office capabilities in fields like finance and law.

Speed Meets Affordability

Here's where it gets interesting - M2.5 isn't just powerful; it's fast and economical too. Clocking in at 37% faster than M2.1 with response times matching Claude Opus4.6, the real shocker is the price tag: just one-tenth the cost of comparable models.

The Tech Behind the Speed

MiniMax credits three innovations for their rapid progress:

- The Forge native Agent RL framework, delivering 40x training acceleration

- CISPO algorithm ensuring stable large-scale training

- Novel Reward design balancing performance with response speed

The results? M2.5 now handles 30% of MiniMax's daily tasks and 80% of new code submissions internally.

Deployment Made Simple

Whether you're a non-technical user or a seasoned developer, M2.5 has you covered:

- No-code web interface with 10,000+ pre-built 'Experts'

- Free API access through ModelScope

- Local deployment options including SGLang, vLLM, Transformers, and MLX The company even offers two API versions (Lightning and Standard) costing just 1/10 to 1/20 of similar solutions.

Key Points:

- Open-source availability on ModelScope lowers barriers to entry

- Benchmark-leading performance across multiple domains

- Cost-effective operation at just 10% of comparable models

- Flexible deployment from no-code to full local implementation

- Tool integration with native support for structured tool calls