Ant Group's dInfer Boosts Diffusion Model Speed 10x

Ant Group Unveils Groundbreaking dInfer Framework

Ant Group has officially released dInfer, the industry's first high-performance inference framework specifically designed for diffusion language models. This open-source innovation achieves unprecedented speeds—10.7 times faster than NVIDIA's Fast-dLLM—while maintaining comparable performance metrics.

Benchmark Performance

In standardized tests:

- Achieved 1011 tokens/second on HumanEval code generation tasks (single inference)

- Delivered 681 tokens/second average speed vs Fast-dLLM's 63.6 tokens/sec (8x H800 GPUs)

- Outpaced autoregressive model Qwen2.5-3B by 2.5x when running on vLLM framework

Technical Breakthroughs

Diffusion language models treat text generation as a denoising process, offering:

- High parallelism capabilities

- Global context awareness

- Flexible structural design

However, previous implementations faced critical limitations:

- Prohibitive computational costs

- KV cache inefficiencies

- Parallel decoding challenges

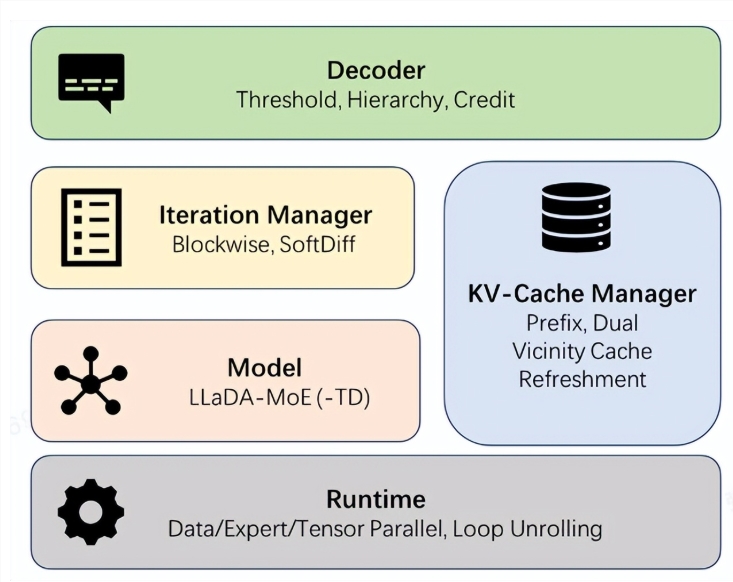

dInfer addresses these through four modular components:

- Model access layer

- KV cache manager

- Diffusion iteration controller

- Adaptive decoding strategies

The LEGO-like architecture allows developers to optimize each component independently while maintaining standardized evaluation protocols.

Industry Implications

The framework bridges cutting-edge research with practical deployment scenarios:

- Enables real-time applications previously constrained by speed limitations

- Opens new possibilities for AGI development pathways

- Provides measurable performance advantages over autoregressive approaches

"This release represents more than just a speed improvement," stated an Ant Group spokesperson. "It's about creating an ecosystem where diffusion models can realize their full potential alongside traditional architectures."

The company invites global researchers to collaborate on further optimizing the framework through its open-source platform.

Key Points:

- 10x speed boost over existing solutions

- First diffusion model to surpass autoregressive benchmarks

- Modular design enables targeted optimizations

- Potential game-changer for AGI development timelines