BytePush Launches 1.58-bit FLUX Model for Efficient AI

BytePush Unveils 1.58-bit Quantized FLUX Model

Introduction

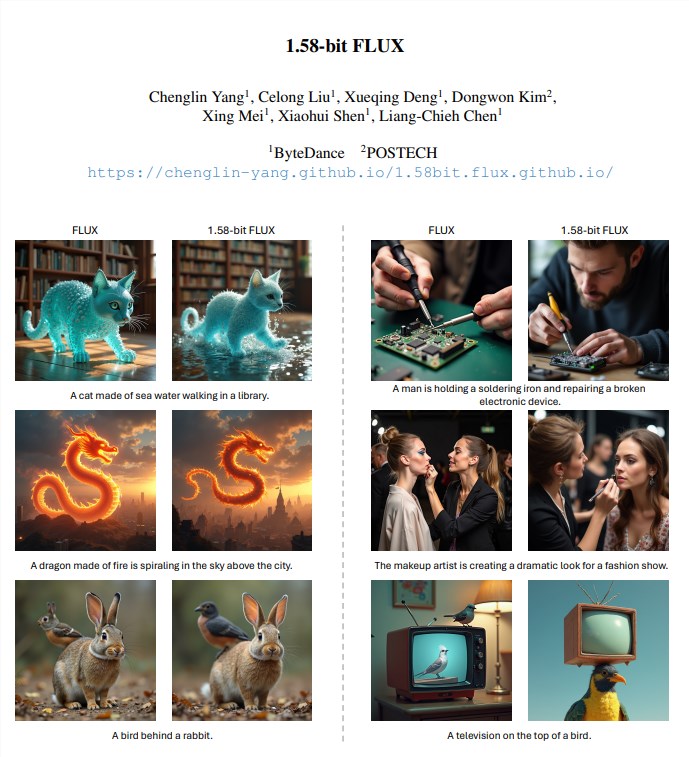

Artificial Intelligence (AI)-driven text-to-image (T2I) generation models like DALLE3 and Adobe Firefly3 have showcased remarkable capabilities, yet their extensive memory requirements pose challenges for deployment on devices with limited resources. To overcome these obstacles, researchers from ByteDance and POSTECH have introduced a 1.58-bit quantized FLUX model that significantly reduces memory usage while boosting performance.

The Challenge of Resource Constraints

T2I models typically contain billions of parameters, making them unsuitable for mobile devices and other resource-constrained platforms. The quest for low-bit quantization techniques is essential for making these powerful models more accessible and efficient in real-world applications.

Research Methodology

The research team focused on the FLUX.1-dev model, which is publicly available and recognized for its performance. They applied a novel 1.58-bit quantization technique that compresses the visual transformer weights into just three distinct values: {-1, 0, +1}. This method does not require access to image data, relying solely on the model's self-supervision. Unlike the BitNet b1.58 approach, which necessitates training a large language model from scratch, this post-training quantization solution optimizes existing T2I models.

Key Improvements

Using this 1.58-bit quantization method, the researchers achieved a 7.7 times reduction in storage space. The compressed weights are stored as 2-bit signed integers, transitioning from the standard 16-bit precision. Additionally, a custom kernel designed for low-bit computation was implemented, which reduced inference memory usage by over 5.1 times and improved inference speed.

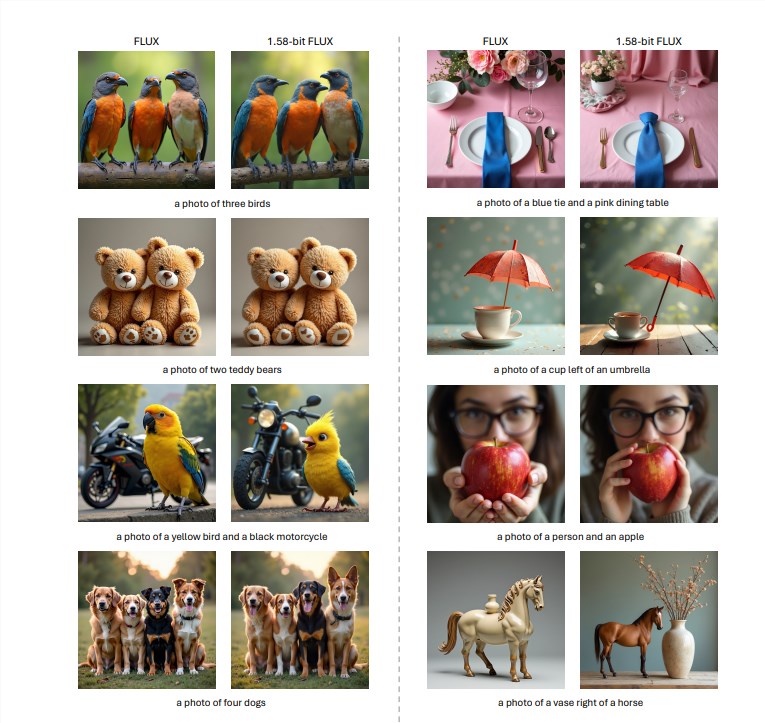

Evaluations against established benchmarks, including GenEval and T2I Compbench, demonstrated that the 1.58-bit FLUX model not only maintains generation quality comparable to the full-precision FLUX model but also enhances computational efficiency.

Performance Metrics

The researchers quantized an impressive 99.5% of the visual transformer parameters, amounting to 11.9 billion parameters in the FLUX model. Experimental results revealed that the 1.58-bit FLUX performs similarly to the original model on the T2I CompBench and GenEval datasets. Notably, the model exhibited more substantial improvements in inference speed on lower-performance GPUs, such as the L20 and A10.

Conclusion

The introduction of the 1.58-bit FLUX model represents a significant advancement in the deployment of T2I models on devices with limited memory and latency. Despite some constraints regarding speed improvements and high-resolution image rendering, the model's potential for enhancing efficiency and reducing resource consumption is promising for future research in AI.

Key Points

- Model storage space reduced by 7.7 times.

- Inference memory usage decreased by over 5.1 times.

- Performance maintained at levels comparable to the full-precision FLUX model in benchmarks.

- Quantization process does not require access to any image data.

- A custom kernel optimized for low-bit computation enhances inference efficiency.