AI's Learning Gap: Why Machines Can't Grow from Failure Like Humans

The Fundamental Flaw Holding Back Artificial Intelligence

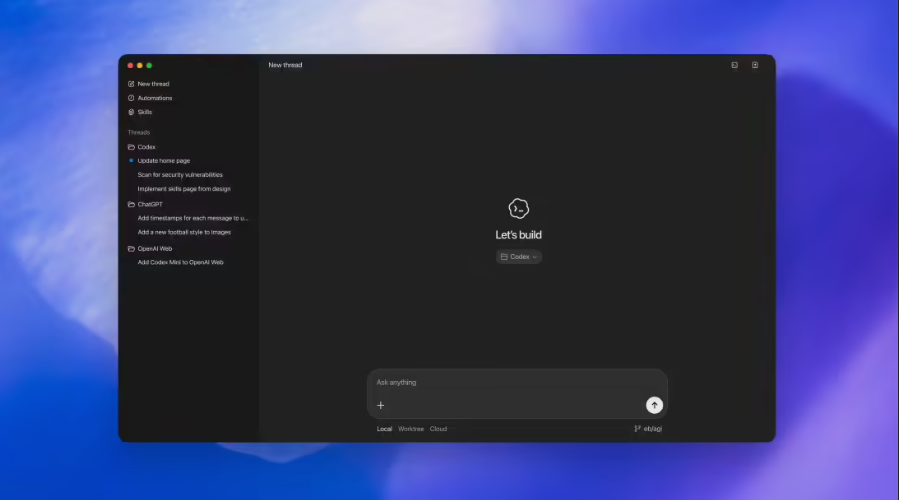

While artificial intelligence has made staggering advances in recent years, a former OpenAI insider warns we're missing something crucial - the ability to learn from failure. Jerry Tworek, who played key roles in developing OpenAI's o1 and o3 inference models, paints a sobering picture of today's AI limitations.

"When these systems get something wrong," Tworek explains, "they don't actually understand they've failed." Unlike humans who adjust their approach after mistakes, current AI models simply hit dead ends without mechanisms to update their knowledge or strategies.

The Fragility of Machine Learning

The problem reveals itself most clearly when AI encounters situations outside its training data. Tworek describes this as "reasoning collapse" - where the model essentially gives up rather than finding creative solutions. It's a stark contrast to human cognition, where setbacks often lead to breakthroughs.

This fragility prompted Tworek's departure from OpenAI to pursue what he sees as the holy grail: AI that can autonomously recover from failures. "True intelligence," he argues, "should be resilient like life itself - always finding pathways forward."

Why This Matters for AGI

The inability to learn from mistakes isn't just an inconvenience; it represents what Tworek calls "the fundamental barrier" to achieving artificial general intelligence (AGI). Without self-correction abilities comparable to humans, even our most advanced systems remain limited.

Tworek compares current AI training methods to teaching someone every possible scenario upfront - impossible given life's unpredictability. Humans succeed because we adapt; machines fail because they can't.

Key Points:

- Learning Disability: Current AI lacks mechanisms equivalent to human error correction and knowledge updating

- AGI Roadblock: The tendency toward "reasoning collapse" with unfamiliar problems may prevent true general intelligence

- New Approaches Needed: Experts like Tworek are leaving major labs to pioneer architectures capable of autonomous problem-solving