Aliyun Expands Qwen3-VL Models for Mobile AI Applications

Alibaba's Qwen3-VL Expands with Mobile-Optimized AI Models

Alibaba Cloud's AI research division has announced significant expansions to its Qwen3-VL visual language model family, introducing two new parameter sizes designed to bridge the gap between mobile accessibility and high-performance AI.

New Model Variants

The newly launched 2B (2 billion parameter) and 32B (32 billion parameter) models represent strategic additions to Alibaba's growing AI portfolio. These developments follow increasing market demand for:

- Edge-compatible lightweight models

- High-accuracy visual reasoning systems

- Scalable solutions across hardware platforms

Specialized Capabilities

Instruct Model Features:

- Rapid response times (<500ms latency)

- Stable execution for dialog systems

- Optimized for tool integration scenarios

Thinking Model Advantages:

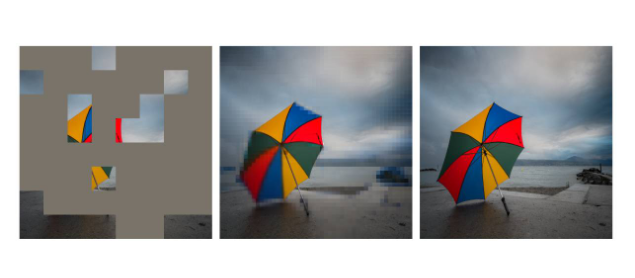

- Advanced long-chain reasoning capabilities

- Complex visual comprehension functions

- "Think while seeing" image analysis technology

The 32B variant demonstrates particular strength in benchmark comparisons, reportedly outperforming established models like GPT-5mini and Claude4Sonnet across multiple evaluation metrics.

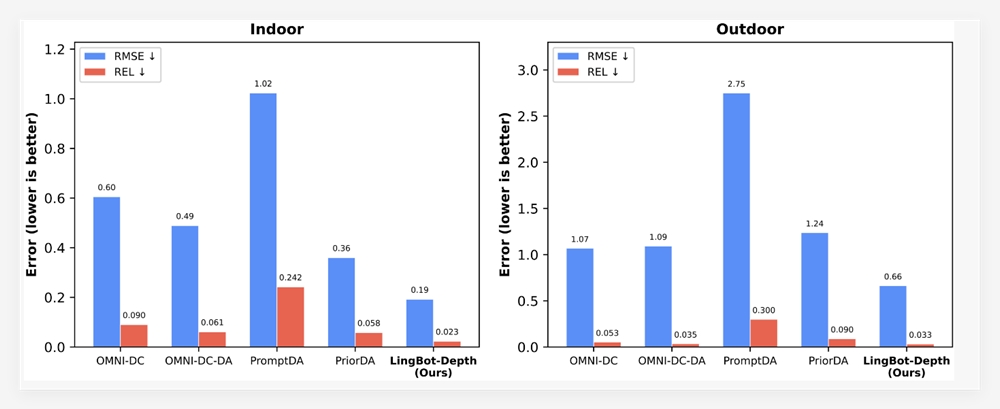

Performance Benchmarks

Independent testing reveals:

- The Qwen3-VL-32B achieves comparable results to some 235B parameter models

- Exceptional scores on the OSWorld evaluation platform

- The compact 2B version maintains usable accuracy on resource-limited devices

The models are now accessible through popular platforms including ModelScope and Hugging Face, with Alibaba providing dedicated API endpoints for enterprise implementations.

Developer Implications

The introduction of these models addresses three critical industry needs:

- Mobile deployment feasibility

- Cost-effective inference solutions

- Specialized visual-language task handling "These expansions demonstrate our commitment to making advanced AI accessible across the hardware spectrum," noted Dr. Li Zhang, Alibaba Cloud's Head of AI Research.

The company has also released optimization toolkits specifically designed for Android and iOS integration, potentially opening new avenues for on-device AI applications.

Key Points:

🌟 Dual expansion: New 2B (lightweight) and 32B (high-performance) variants added 📱 Mobile optimization: Smartphone-compatible implementations available 🏆 Competitive edge: Outperforms several market alternatives in benchmarks 🛠️ Developer ready: Available on ModelScope and Hugging Face platforms