Robots Can Now Grasp Glassware Thanks to Breakthrough Depth Perception Tech

Robots Finally Master the Art of Handling Glass

Ever watched a robot fumble with a wine glass? That frustrating limitation may soon be history. Ant Group's Lingbo Technology just unveiled LingBot-Depth, an open-source spatial perception model that gives machines remarkably precise vision - especially for tricky transparent and reflective objects.

Seeing Through the Invisible

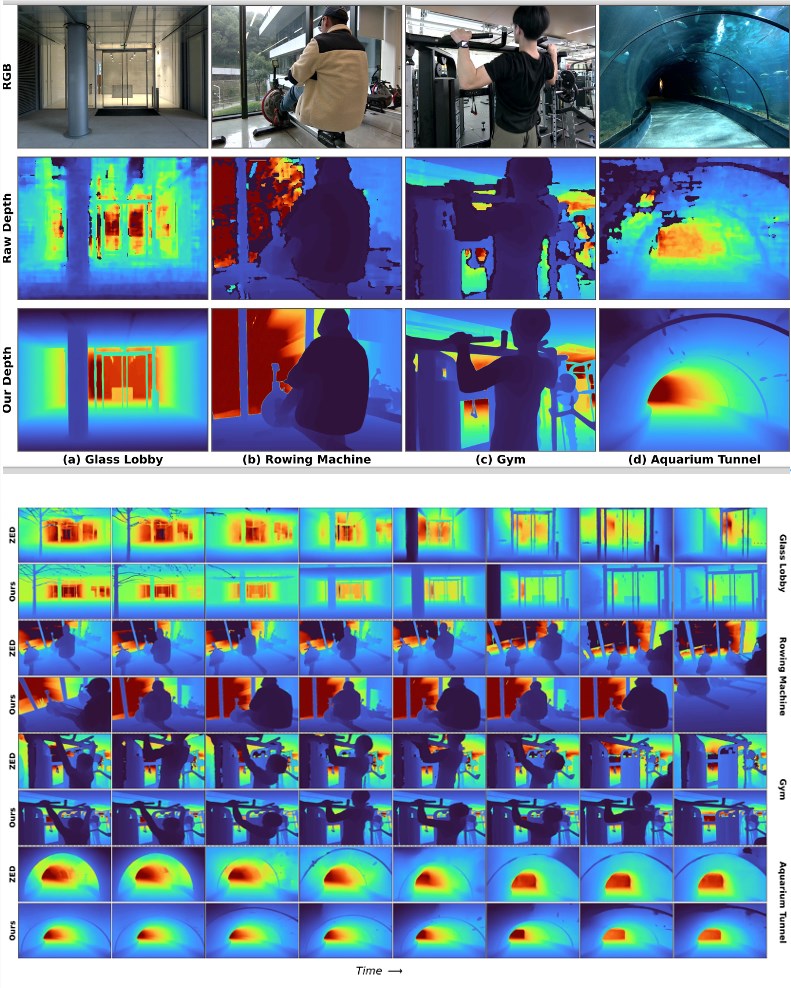

The breakthrough comes at an opportune time. As robots move from factories into homes and hospitals, their inability to reliably handle glassware, mirrors, and stainless steel equipment has been a persistent roadblock. Traditional depth cameras often fail when light passes through or bounces off shiny surfaces.

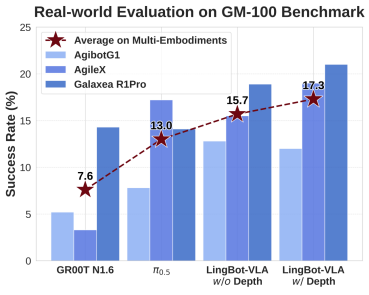

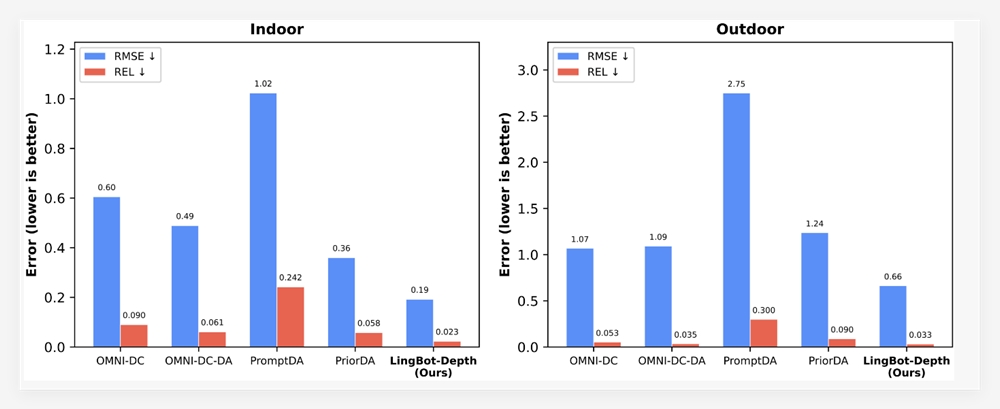

Caption: LingBot-Depth (far right) outperforms existing models in sparse depth completion tasks

"It's like giving robots X-ray vision," explains Dr. Wei Zhang, lead researcher on the project. "Where conventional systems see gaps or noise with glass objects, our model reconstructs the complete 3D shape by analyzing texture clues and contextual information."

The secret sauce? A novel approach called Masked Depth Modeling (MDM) that intelligently fills in missing depth data using color image cues. Paired with Orobote's Gemini330 stereo cameras, the system achieves what engineers previously thought impossible - accurate depth maps of transparent surfaces.

Putting Precision to the Test

In head-to-head comparisons against industry leaders:

- Reduced indoor scene errors by 70% versus standard solutions

- Cut sparse reconstruction errors nearly in half (47% improvement)

- Maintained clarity even with strong backlighting and complex curves

Caption: Top - LingBot-Depth reconstructs glass surfaces; Bottom - Outperforming ZED Stereo Depth

The implications stretch far beyond just handling fragile items. Autonomous vehicles could better detect wet roads or ice patches. Industrial robots might safely manipulate shiny machine parts without costly sensors.

Opening the Floodgates

In an unusual move for corporate research, Ant Lingbo is open-sourcing not just the model but also:

- 2 million real-world depth datasets

- 1 million simulated training samples

- Full documentation for integration

The data treasure trove represents six months of intensive field collection across homes, factories, and laboratories worldwide.

The company also announced plans for next-gen Orobote cameras optimized specifically for LingBot-Depth processing - potentially bringing this advanced vision to consumer robotics sooner than expected.

Key Points:

- Solves robotics' "glass problem" through innovative depth modeling

- Outperforms existing solutions by up to 70% in accuracy tests

- Massive dataset release accelerates industry adoption

- Coming soon to specialized stereo cameras

- Open-source approach could democratize advanced robotic vision