Tiny AI Brain Fits in Your Pocket: Liquid AI's Breakthrough Model Runs on Phones

Liquid AI Packs Serious Brainpower Into Smartphones

Imagine having an AI assistant that doesn't just chat but actually thinks through problems like a human - and fits comfortably in your pocket. That's exactly what Liquid AI has achieved with its groundbreaking new model.

Small Package, Big Brains

The LFM2.5-1.2B-Thinking model represents a major leap forward for edge computing. While other companies chase ever-larger language models requiring massive computing power, Liquid AI went the opposite direction - shrinking sophisticated reasoning capabilities down to smartphone size.

"We're not trying to recreate general conversation," explains the development team. "We focused laser-like on creating an AI that excels at logical reasoning and problem-solving while staying lean enough for mobile devices."

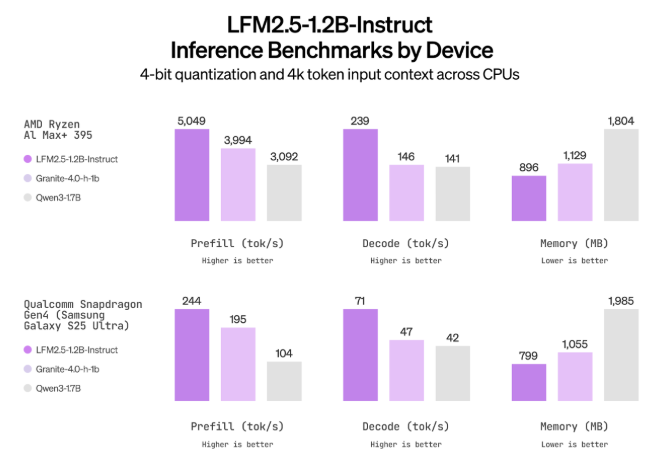

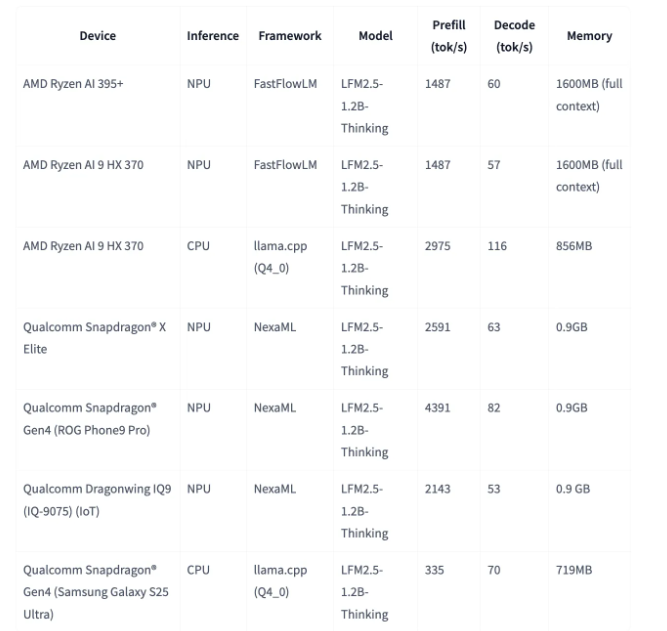

The numbers speak volumes:

- 900MB memory usage - smaller than most mobile games

- 239 characters/second processing speed on AMD CPUs

- 82 characters/second even on mobile NPUs

Thinking Like Humans Do

What sets this model apart is how it works through problems. Rather than instantly spitting out answers, LFM2.5 generates internal "thinking traces" - essentially showing its work before presenting conclusions.

This approach mirrors human cognition:

- Breaking down complex problems into steps

- Verifying intermediate results

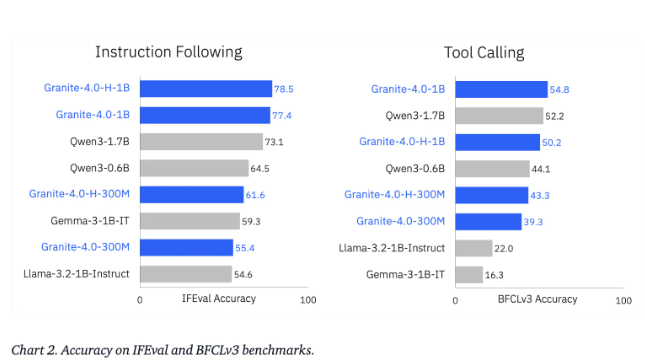

- Adjusting course when needed The result? Accuracy rates that leave competitors in the dust.

Solving the Stuck-in-a-Loop Problem

The team tackled one of AI's most frustrating quirks head-on: those moments when models get trapped repeating themselves endlessly.

Using innovative multi-stage reinforcement learning, they slashed:

- Loop occurrence from 15.74% → 0.36%

- Response lag by over 90%

The implications are enormous - imagine reliable medical diagnostics or financial analysis happening offline on a nurse's tablet or banker's phone.

What This Means For You

The era of waiting for cloud servers to process complex requests may be ending soon:

- Travelers could get real-time itinerary adjustments without WiFi

- Students might solve advanced math problems offline

- Field researchers can analyze data anywhere All powered by AI brains small enough to fit alongside your selfies and playlists.

The future isn't just smart - it's portable.