Liquid AI's Tiny Powerhouses Bring Big AI to Small Devices

Liquid AI's Compact AI Models Make Big Waves

In an era where bigger often means better in artificial intelligence, Liquid AI is taking a different approach. Their newly released LFM2.5 family proves that good things do come in small packages - especially when it comes to edge computing.

Small Models, Big Ambitions

The LFM2.5 series builds on the company's existing LFM2 architecture but brings significant upgrades under the hood. What makes these models special? They're specifically engineered to run efficiently on everyday devices rather than requiring massive cloud servers.

"We're seeing tremendous demand for capable AI that can operate locally," explains Dr. Sarah Chen, Liquid AI's lead researcher. "Whether it's privacy concerns or the need for real-time responsiveness, there are compelling reasons to bring intelligence directly to devices."

Technical Breakthroughs

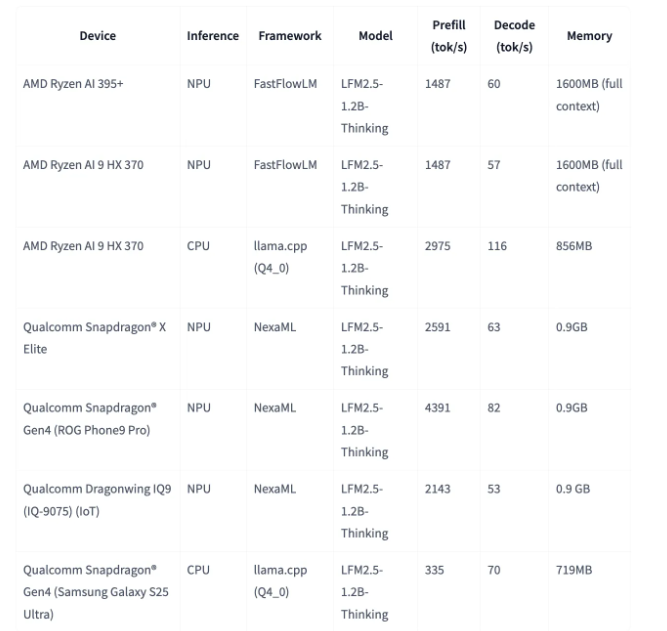

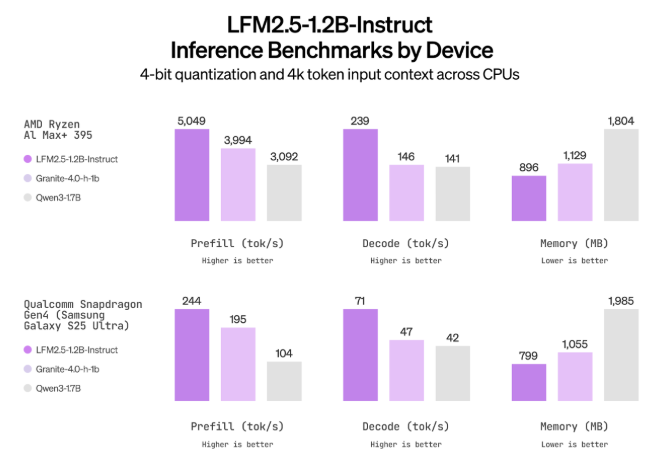

The numbers tell an impressive story:

- Training data nearly tripled from 10 trillion to 28 trillion tokens

- Parameters expanded to 120 million

- Includes specialized variants for Japanese, vision-language, and audio-language tasks

The models underwent rigorous fine-tuning through supervised learning and multi-stage reinforcement training. This focused approach helps them excel at specific challenges like mathematical reasoning and tool usage.

Benchmark Dominance

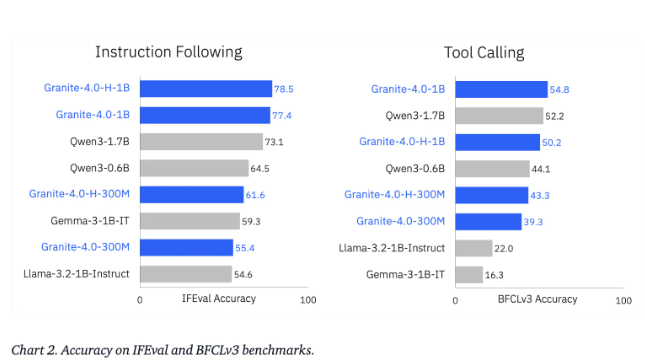

When put through their paces:

- The general-purpose LFM2.5-1.2B-Instruct scored 38.89 on GPQA and 44.35 on MMLU Pro tests

- Outperformed comparable models like Llama-3.2-1B Instruct by significant margins

- Japanese-specific version shows particular strength in local language tasks

The vision-language model (LFM2.5-VL-1.6B) brings image understanding capabilities ideal for document analysis and interface reading - think smarter scanning apps or accessibility tools.

Meanwhile, the audio model (LFM2.5-Audio-1.5B) processes speech eight times faster than previous solutions, opening doors for real-time voice applications without cloud dependency.

Why This Matters

The tech industry is waking up to the limitations of cloud-only AI solutions: 1️⃣ Privacy: Keeping sensitive data local 2️⃣ Reliability: Functioning without internet connections 3️⃣ Responsiveness: Eliminating network latency 4️⃣ Cost: Reducing expensive cloud computing needs

With these open-source models now available on Hugging Face and showcased on Liquid's LEAP platform, developers worldwide can start experimenting with powerful edge AI solutions today.

Key Points:

- Compact powerhouses: The LFM2.5 series delivers impressive performance in small packages optimized for edge devices.

- Multimodal mastery: From text processing to image and audio understanding - all running efficiently locally.

- Open access: Available as open-source weights encouraging widespread adoption and innovation.