Tencent's Hunyuan 2.0 AI Model Takes a Big Leap Forward

Tencent Unveils Major Upgrade to Its Flagship AI Model

Tencent has officially launched Hunyuan 2.0, the next generation of its homegrown large language model, bringing substantial improvements across multiple capabilities. The tech giant simultaneously announced deeper integration of DeepSeek V3.2 into its ecosystem, with both models now available in Tencent's AI-native applications including Yuanbao and ima.

What's New Under the Hood

The upgraded model adopts an advanced Mixture of Experts (MoE) architecture, packing a whopping 406 billion parameters (with 32 billion activated at any given time). Perhaps more impressively, it now supports context windows stretching to 256K tokens - allowing it to process and remember significantly more information during conversations or analyses.

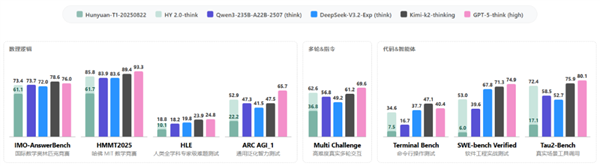

Compared to its predecessor (Hunyuan-T1-20250822), version 2.0 shows marked improvements thanks to enhanced pre-training data and refined reinforcement learning strategies. These upgrades translate to better performance in complex scenarios requiring:

- Mathematical and scientific reasoning

- Coding tasks

- Following detailed instructions

- Maintaining context in long conversations

Specialized Strengths Emerge

The model particularly shines in technical domains. Using high-quality training data and a technique called Large Rollout reinforcement learning, Hunyuan 2.0 has demonstrated exceptional performance on prestigious benchmarks including:

- The International Mathematical Olympiad (IMO-AnswerBench)

- Harvard-MIT Mathematics Competition (HMMT2025)

- Humanity's Last Exam (HLE)

The improvements extend beyond raw computational ability. Tencent's engineers have focused on making the model more consistent and reliable through methods like importance sampling correction, which helps align training with real-world usage patterns.

Practical Applications Expand

For developers and businesses, perhaps the most exciting development is the model's enhanced ability to work with:

- Complex coding environments (Agentic Coding)

- Sophisticated tool integration

- Multi-step problem solving (SWE-bench Verified, Tau2-Bench)

Tencent Cloud has already opened access to related APIs and platform services, allowing third parties to build on these capabilities.

Key Points:

- Architecture upgrade: MoE design with 406B total parameters (32B active)

- Memory boost: Supports 256K context windows for longer conversations/complex tasks

- Performance leaps: Shows particular strength in math/science reasoning and coding

- Ecosystem integration: Available now in Tencent apps with APIs open for developers