Tencent Releases Hunyuan World Model 1.1 with Breakthrough 3D Tech

Tencent Unveils Advanced Hunyuan World Model 1.1

Tencent has officially released and open-sourced Hunyuan World Model 1.1 (WorldMirror), representing a major leap forward in 3D reconstruction technology. This upgraded version introduces substantial improvements in processing speed, input flexibility, and deployment efficiency.

Key Advancements

The new model transforms professional 3D reconstruction into an accessible tool for general users. It can generate production-ready 3D scenes from videos or images within seconds - a process that traditionally required specialized equipment and hours of processing time.

Building on its predecessor (released July 2025), version 1.1 establishes itself as:

- The first open-source navigable world generation model compatible with traditional CG pipelines

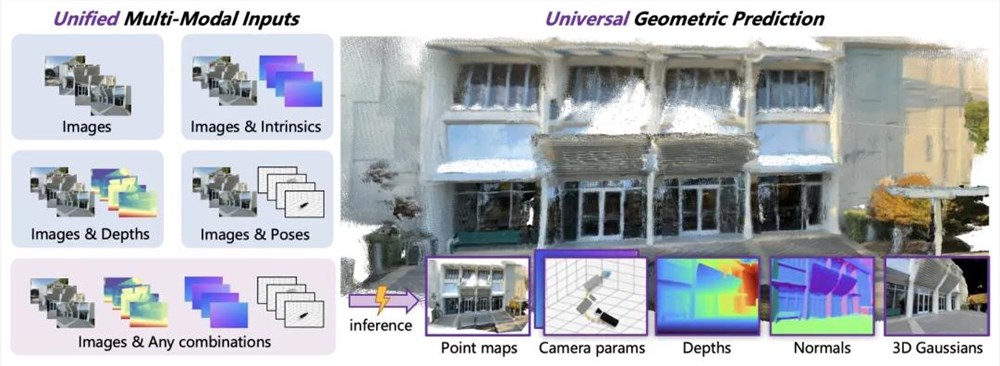

- Capable of end-to-end 3D reconstruction with multimodal prior injection

- Featuring unified output for multiple tasks

Technical Specifications

The model boasts three core capabilities:

- Flexible input processing: Handles various media formats including multi-view images and video streams

- General 3D visual prediction: Delivers comprehensive geometric outputs including point clouds and depth maps

- Single-card deployment: Enables second-level inference on standard hardware

The implementation uses a multimodal prior guidance mechanism, incorporating camera pose data, internal parameters, and depth maps to ensure geometric accuracy.

Performance Metrics

Compared to conventional methods, the pure feedforward architecture provides:

- Complete inference in ~1 second for typical inputs (8-32 views)

- Real-time application readiness

- Significant reduction in processing time through single-pass attribute output

The technical architecture combines:

- Multimodal prior prompts

- General geometric prediction framework

- Curriculum learning strategy This combination maintains parsing accuracy even in complex real-world environments.

Availability & Applications

The model is now accessible through:

- GitHub for local deployment

- HuggingFace Space for online experimentation Industry analysts predict broad impact across:

- Virtual reality development

- Game design pipelines

- Architectural visualization

The release represents a milestone in democratizing advanced 3D reconstruction technology.

Key Points:

- Generates professional 3D scenes from video/images in seconds

- Open-source implementation available immediately

- Compatible with standard CG workflows

- Processes multiple input types simultaneously

- Optimized for single-GPU deployment