Robots gain human-like vision with breakthrough depth perception model

Robots Finally See Through Glass Like Humans Do

Imagine a robot bartender confidently pouring drinks without fumbling glassware, or an industrial arm precisely handling shiny metal parts. This futuristic vision just got closer to reality thanks to Lingbo Technology's groundbreaking spatial perception model.

Seeing the Invisible

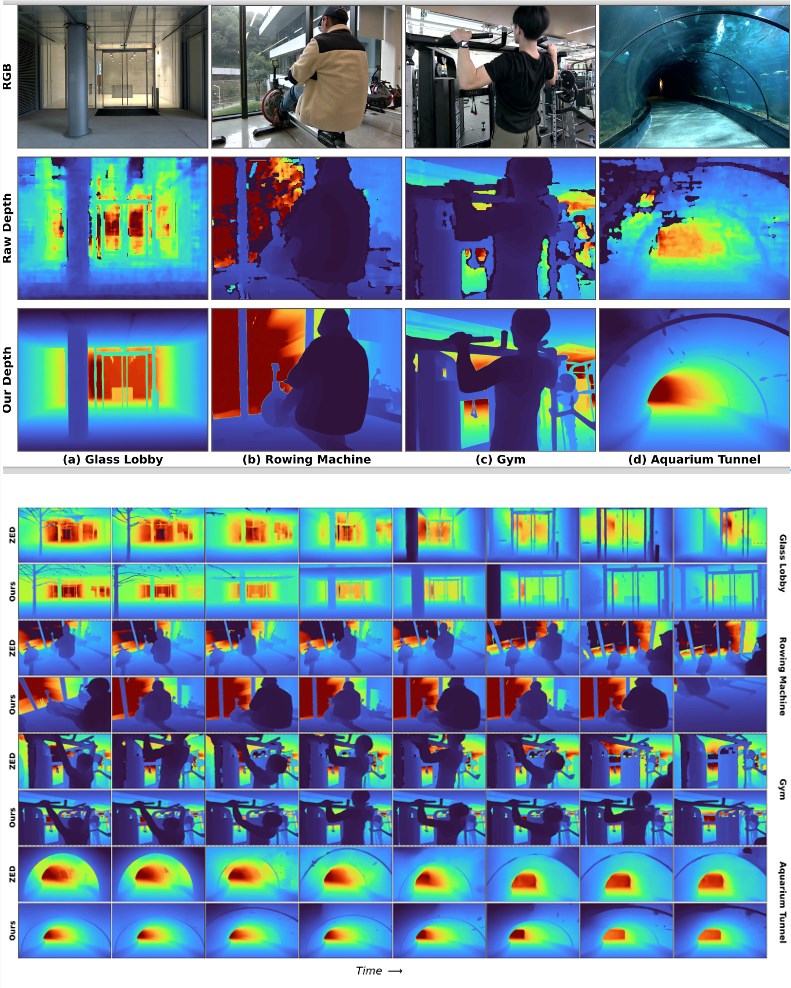

The newly open-sourced LingBot-Depth solves what engineers call the "glass ceiling" of robotics - literally. Traditional depth cameras struggle with transparent surfaces like windows or reflective materials like stainless steel, often seeing them as empty space or distorted shapes.

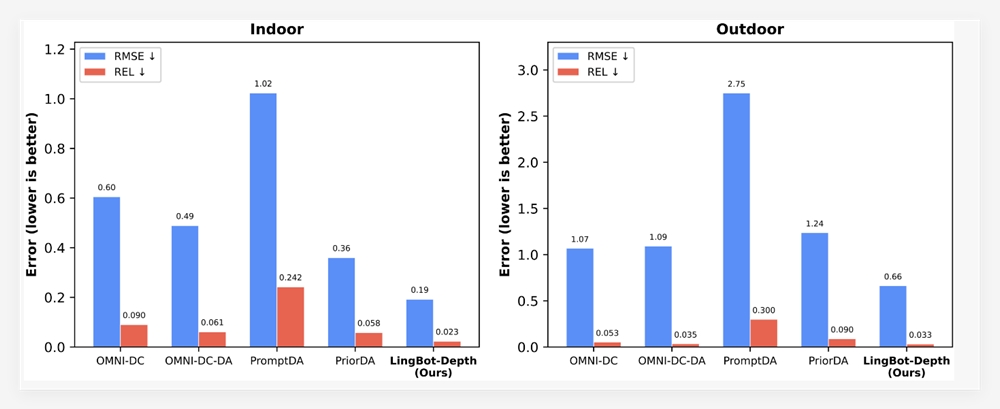

Caption: LingBot-Depth (far right) dramatically outperforms other models in completing missing depth information

"It's like giving robots X-ray vision," explains Dr. Wei Zhang, lead researcher on the project. "Where current systems see gaps or noise when looking at a wine glass, our model reconstructs the complete three-dimensional shape."

How It Works

The secret sauce lies in Masked Depth Modeling (MDM) technology. When the stereo camera misses depth data - say from a mirror's reflection - LingBot-Depth intelligently fills in the blanks using color image clues and contextual understanding.

Paired with Orobote's Gemini330 stereo cameras, the system achieves:

- 70% fewer errors than competitors indoors

- 47% improvement on sparse mapping tasks

- Crystal-clear edges on complex curved surfaces

Caption: Top shows LingBot-Depth's clean reconstruction; bottom compares favorably against industry leader ZED

Real-World Ready

The team didn't just test in labs. They collected 10 million real-world samples - from sunlit windows to crowded restaurant tabletops - distilling these into 2 million high-quality training pairs. This massive dataset will soon be available to researchers worldwide.

Industrial partners are already excited. "This could transform quality control in glass manufacturing," notes Lisa Chen from Precision Robotics Inc. "No more workarounds for shiny surfaces."

What's Next

Ant Lingbo plans to open-source more embodied intelligence models this week, while Orobote prepares new hardware leveraging these advances. The race is on to bring this human-like vision to everything from self-driving cars to smart home assistants.

Key Points:

- Breakthrough accuracy: Handles transparent/reflective objects better than humans in some tests

- Open-source advantage: Free for researchers and developers to implement

- Hardware compatible: Works with existing stereo cameras like Gemini330 series

- Coming soon: Massive training dataset will be publicly available