Qwen3 Model Achieves 10x Speed Boost with Fewer Parameters

Alibaba's Qwen3 Model Revolutionizes AI Efficiency

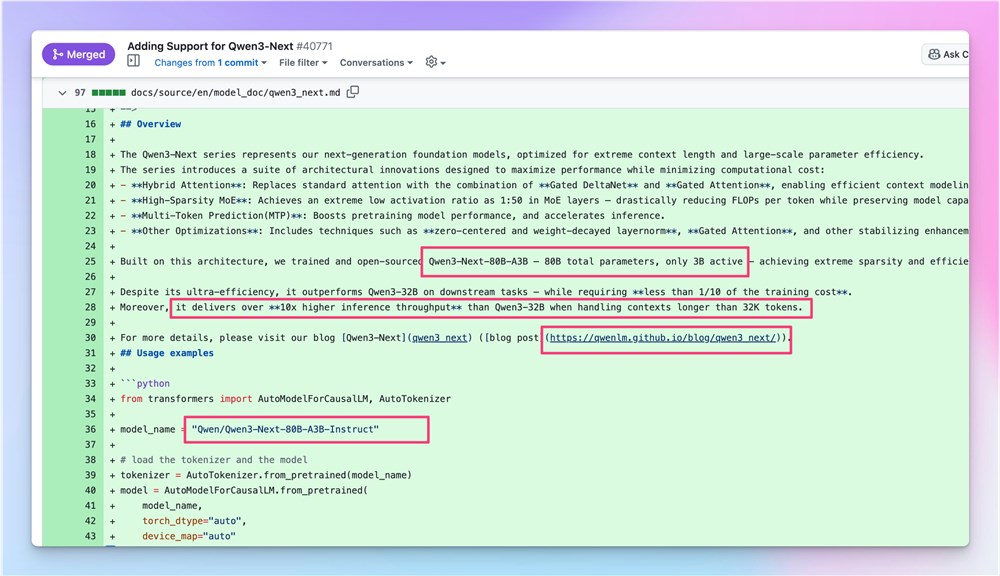

The AI research community is buzzing with excitement over Alibaba's latest breakthrough in large language models. The Qwen3-Next-80B-A3B-Instruct model introduces a paradigm-shifting approach that delivers 8 billion parameters worth of capability while only activating 3 billion parameters during operation.

Technical Breakthrough Explained

The key innovation lies in the model's Mixture of Experts (MoE) architecture, which selectively activates specialized components based on task requirements. This approach mimics having a team of specialists where only relevant experts contribute to each task, dramatically reducing computational overhead.

Performance Gains

Early testing shows remarkable improvements:

- 10x faster inference speeds compared to previous Qwen3-32B models

- Superior handling of long-context sequences (32K+ tokens)

- Maintains strong performance in code generation, mathematical reasoning, and multilingual tasks

The model has already been integrated into Hugging Face's Transformers library, making this technology immediately accessible to developers worldwide.

Cost and Accessibility Benefits

The efficiency gains extend beyond just performance:

- Training costs reduced to less than one-tenth of previous models

- Enables deployment on resource-constrained devices

- Opens possibilities for smaller organizations to leverage cutting-edge AI

Industry Impact

This development represents more than just incremental progress:

- Democratizes access to powerful AI models

- Reduces environmental impact through efficient computation

- Enables new applications previously limited by hardware constraints

The open-source release ensures widespread adoption and continued innovation built upon this foundation.

Key Points:

- Revolutionary MoE architecture activates only necessary parameters

- Delivers 10x speed improvement over previous generation

- Significant reduction in training and deployment costs

- Full open-source availability accelerates ecosystem development

- Potential to reshape edge computing and mobile AI applications