NVIDIA Makes AI Fine-Tuning Easier Than Ever

NVIDIA Democratizes AI Model Customization

The era of exclusive AI labs is fading fast. NVIDIA's latest release brings sophisticated language model fine-tuning within reach of everyday developers and enthusiasts. Their comprehensive guide walks users through the entire process using the powerful Unsloth framework optimized for NVIDIA hardware.

Unsloth: Turbocharging Your GPU

This open-source framework isn't just fast—it's revolutionary. Designed specifically for NVIDIA GPUs, Unsloth leverages CUDA and Tensor Core architecture to deliver jaw-dropping performance gains:

- 2.5x faster training speeds compared to standard implementations

- Dramatically reduced memory consumption

- Professional results achievable on RTX4090 laptops

"What used to require multi-GPU servers can now run on your coffee shop laptop," explains one early tester.

Three Paths to Perfect Tuning

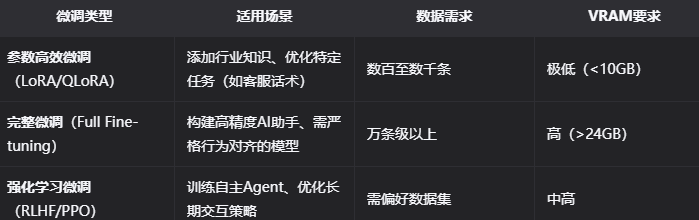

The guide doesn't take a one-size-fits-all approach. Instead, it carefully compares three mainstream methods:

- Full Fine-Tuning - For maximum accuracy when you have ample resources

- LoRA - Balanced approach preserving original model knowledge

- QLoRA - Memory-efficient option perfect for consumer hardware

The beauty? You can start small with a 7B model on an RTX3060 using QLoRA, then scale up as needed.

Ready-to-Run Resources Lower Barriers Further

NVIDIA understands that theory means little without practical tools. That's why they've included:

- Pre-configured Docker images

- Hands-on Colab examples

- Step-by-step optimization tips

The message is clear: AI customization shouldn't be gatekept by tech giants anymore.

Key Points:

- Unsloth delivers 2.5x speed boost on RTX GPUs

- Complete tutorials make professional tuning accessible

- Three methods adapt to different needs and budgets

- Consumer hardware now competes with server setups