MiniMax Opens Up Its M2.5 Model - A Game Changer for Affordable AI Agents

MiniMax Breaks New Ground With Open-Source M2.5 Model

The AI landscape just got more interesting with MiniMax's latest release. Their M2.5 model isn't just another incremental update - it represents a quantum leap in what affordable AI can achieve.

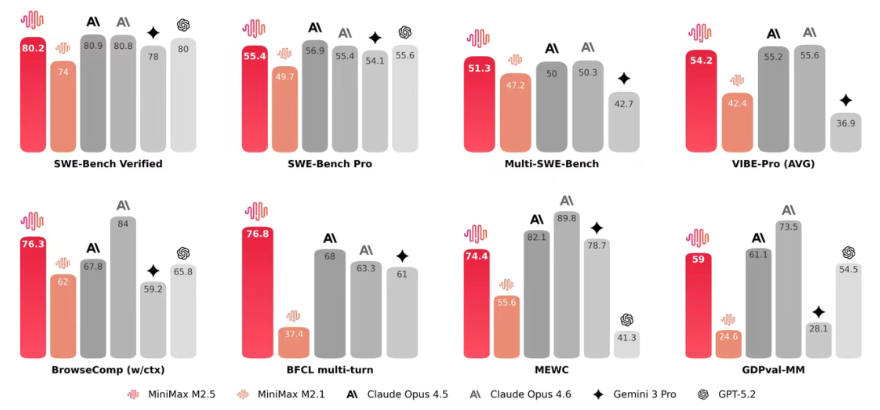

Performance That Turns Heads

Benchmark results tell an impressive story. The M2.5 scores 80.2% on SWE-Bench Verified, edging out GPT-5.2 and coming close to Claude Opus4.5's performance. But here's the kicker - it does this at just one-tenth the cost of comparable models.

"We're seeing architectural-level planning capabilities emerge," explains Dr. Li Wei, MiniMax's lead researcher. "The model handles everything from initial concept to final deployment across multiple platforms."

In practical terms:

- Reduces search iterations by 20%

- Excels at expert-level research tasks

- Integrates specialized knowledge for finance and legal applications

- Processes requests 37% faster than previous versions

Under the Hood: What Powers This Leap Forward?

The secret sauce lies in three key innovations:

- Forge Framework: Accelerates training by an astonishing 40x

- CISPO Algorithm: Solves tricky context allocation problems in large-scale training

- Smart Reward Design: Balances performance with response speed

The result? Within MiniMax itself, M2.5 now handles 30% of daily tasks and processes 80% of new code submissions.

Deployment Made Simple (Finally)

MiniMax understands that one size doesn't fit all when it comes to AI implementation:

- No-code users: Jump right in with the web interface (complete with thousands of pre-built "Experts")

- Developers: Choose between free API access or premium options on ModelScope

- Enterprise needs: Local deployment supports everything from high-volume production to Mac-based development

The pricing structure deserves special mention - with options costing just 5-10% of comparable solutions, financial barriers to entry have effectively disappeared.

Specialized Tools Get Special Treatment

The M2.5 shines when working with external tools:

- Native support for parallel tool calls

- Seamless OpenAI SDK integration (when using vLLM/SGLang)

- Comprehensive guides for optimizing tool interactions

The team even provides recommended parameter configurations out of the box, though they encourage experimentation based on specific use cases.

Key Points:

- Cost Revolution: High performance at a fraction of traditional costs

- Flexible Access: From no-code to full local deployment

- Programming Prowess: Handles over 10 languages with architect-level thinking

- Search Superiority: Fewer iterations needed for expert results

- Continuous Evolution: Rapid iteration cycle promises more improvements ahead