Ling-flash-2.0 Launches with Record Inference Speed

Silicon-Based Flow Unveils Ling-flash-2.0 with Breakthrough Performance

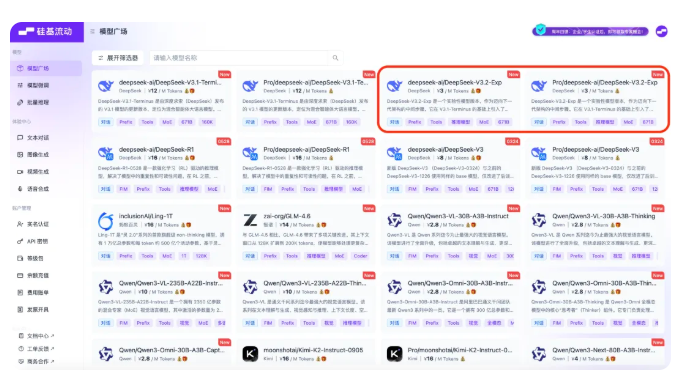

Silicon-Based Flow's large model service platform has officially launched Ling-flash-2.0, the latest open-source model from Ant Group's Bailing team. This marks the 130th model available on the platform, offering developers unprecedented capabilities in natural language processing.

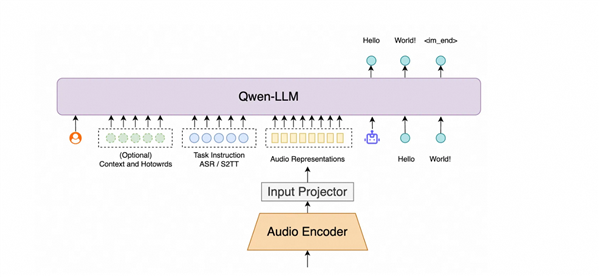

Model Architecture and Training

The MoE (Mixture of Experts) architecture powers Ling-flash-2.0, featuring:

- 10 billion total parameters

- Only 610 million parameters activated during use (480 million non-embedded)

- Trained on over 20TB of high-quality data

Through multi-stage training including pre-training, supervised fine-tuning, and reinforcement learning, the model achieves performance comparable to dense models with over 6 billion activated parameters.

Performance and Applications

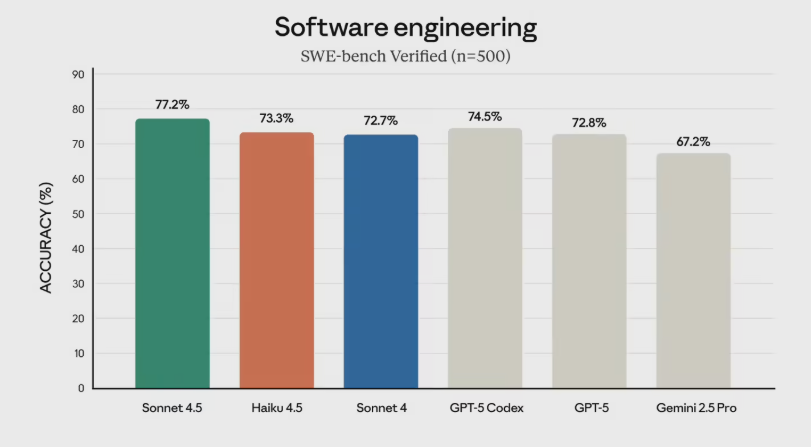

Ling-flash-2.0 excels in:

- Complex reasoning tasks

- Code generation

- Front-end development

The model supports an impressive 128K context length, significantly enhancing text processing capabilities. Pricing remains competitive at:

- 1 yuan per million tokens for input

- 4 yuan per million tokens for output

New users receive welcome credits:

- 14 yuan on domestic sites

- 1 USD on international platforms

Speed and Efficiency Advantages

The carefully optimized architecture delivers:

- Output speeds exceeding 200 tokens per second on H20 hardware

- Three times faster than comparable 36B dense models This breakthrough combines the performance advantages of dense architectures with MoE efficiency.

The Silicon Flow platform continues to expand its offerings across language, image, audio, and video models, enabling developers to: A) Compare multiple models B) Combine different AI capabilities C) Access efficient APIs for generative AI applications

Developers can experience Ling-flash-2.0 at: In China: https://cloud.siliconflow.cn/models Internationally: https://cloud.siliconflow.com/models

Key Points:

- 🚀 MoE architecture: Combines 10B total parameters with efficient activation of just 610M parameters

- ⚡️ Record speed: Processes over 200 tokens/second - triple the speed of comparable dense models 3. 💡 Advanced capabilities: Excels in complex reasoning and creative tasks with 128K context support