ByteDance Open-Sources Seed-X: A Compact 7B Translation Model

ByteDance Open-Sources High-Performance Translation Model Seed-X

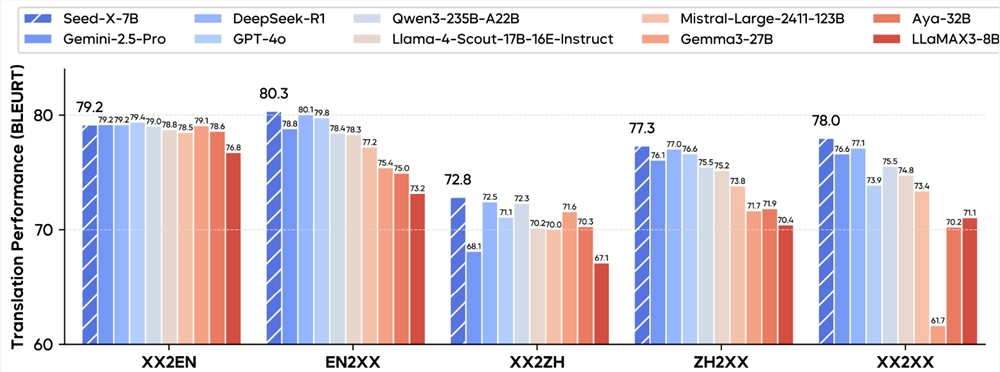

ByteDance's Seed team has officially open-sourced Seed-X, a compact yet powerful multilingual translation model with just 7 billion parameters (7B). The model supports bidirectional translation across 28 languages, including English, Chinese, Japanese, Korean, and major European languages, demonstrating performance comparable to industry-leading large models.

Lightweight Powerhouse

Seed-X achieves remarkable translation quality while maintaining a streamlined architecture. According to evaluations, it performs exceptionally well across diverse domains including:

- Internet and technology content

- Business communications

- E-commerce and finance

- Legal and medical texts

- Literature and entertainment

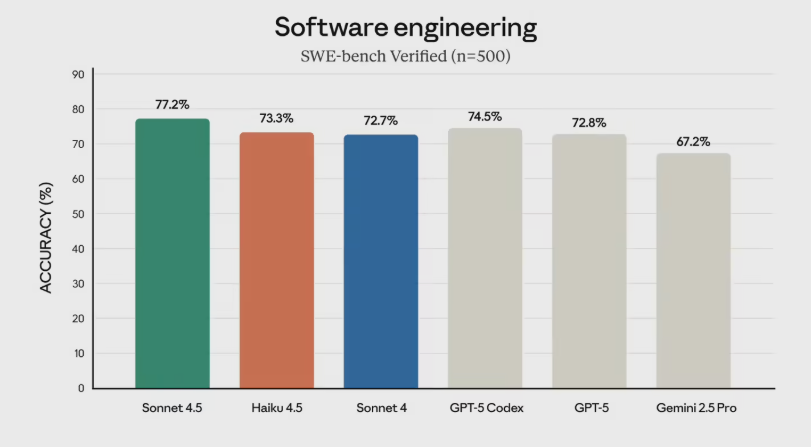

The model's performance reportedly matches or exceeds that of heavyweight models like Gemini-2.5, Claude-3.5, and GPT-4 in specific translation tasks.

Optimized for Efficiency

Built on the Mistral architecture, Seed-X was specifically designed to excel at translation tasks. The development team made strategic decisions to:

- Exclude STEM, coding, and reasoning-related training data

- Focus exclusively on translation accuracy and efficiency

- Optimize for deployment in resource-constrained environments

The result is a model that performs nearly as well as DeepSeek R1 and Gemini Pro2.5 in human evaluations while being significantly more efficient to run.

Innovative Training Approach

The Seed team employed novel training strategies that minimized manual intervention:

- Implemented an LLM-centric data processing pipeline

- Automated generation and filtering of high-quality training data

- Focused on maximizing multilingual generalization capabilities

The model has been released under a permissive MIT license through Hugging Face, significantly lowering barriers for developer adoption.

ByteDance's Growing AI Portfolio

Seed-X represents ByteDance's latest contribution to the open-source AI community, joining previous releases including:

- Multimodal model BAGEL

- Code generation model Seed-Coder

- Speech synthesis system Seed-TTS

The release demonstrates ByteDance's commitment to advancing AI translation technology while providing practical tools for:

- Automated translation systems

- Cross-language content creation

- International application development

Project Homepage: https://huggingface.co/collections/ByteDance-Seed/seed-x

Key Points:

- Compact size: 7B parameters make it highly deployable

- Broad language support: 28 languages with bidirectional translation

- Focused training: Specialized exclusively for translation tasks

- Open access: MIT license encourages widespread adoption

- Performance parity: Matches leading models in specific domains