Elastic's New Protocol Lets AI Agents Jam Like Musicians

Elastic's Breakthrough: AI Agents That Collaborate Like Humans

Imagine a newsroom where AI reporters, editors, and researchers work together as smoothly as a jazz ensemble - that's the vision behind Elastic's new Agent2Agent (A2A) protocol. Released yesterday, this open standard could fundamentally change how multiple AI systems interact.

How It Works: Simplicity Meets Power

The magic lies in A2A's elegant design. Instead of complex integrations, agents communicate through lightweight JSON-RPC messages. No shared memory required. No tool synchronization headaches. Just clean, structured data passing between specialized AI performers.

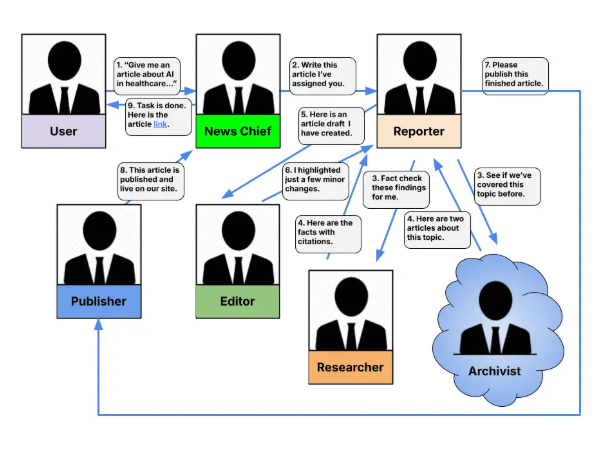

Elastic demonstrates this with their open-source "digital newsroom" example. Six distinct agents:

- The news director sets the agenda

- Reporters gather information

- Researchers verify facts

- The archive agent pulls historical context

- Editors refine the content

- The publisher handles distribution

Together, they complete entire news cycles autonomously through A2A's messaging framework.

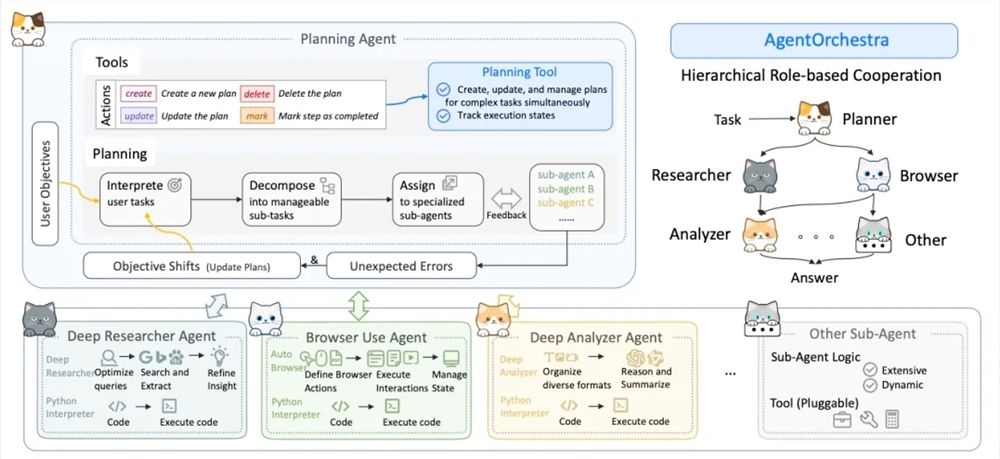

Four Pillars of Effective Collaboration

The protocol stands on these core principles:

- Smart Messaging: Structured Task & Artifact objects that support real-time streaming updates

- Self-Coordination: Automatic agent discovery and dynamic dependency management

- Deep Specialization: Each agent masters one domain while scaling horizontally

- Transparent Workflow: Distributed state broadcasting eliminates central bottlenecks

"It's like watching a well-rehearsed orchestra," explains Elastic's CTO Michelle Zhou. "Each musician knows their part, but they're constantly listening and adapting to others."

Playing Nice With Existing Systems

The protocol complements rather than replaces current standards:

- For individual agent capabilities: Use MCP (Multi-tool Calling Protocol)

- For team coordination: Bring in A2A The combination creates powerful hybrid architectures - imagine a reporter agent using MCP to access image databases while submitting stories via A2A to editing bots.

Already Making Waves

The tech community is embracing A2A rapidly:

- Integrated into Google ADK within weeks of announcement

- Native support added to LangGraph and Crew.AI platforms

- Developer sandbox available via Docker-Compose

The "AI newsroom" demo lets anyone experience automated journalism firsthand - from topic selection to published article in under 15 minutes.

Key Points:

- 🎼 A2A enables multi-agent collaboration without shared memory requirements

- 📰 Open-source demo shows six specialized agents completing news production cycles

- 🔌 Plays well with existing protocols like MCP for comprehensive solutions

- 🚀 Already adopted by major platforms including Google ADK