Patronus AI Unveils Percival: Rapid Fault Detection for AI Agents

As enterprises increasingly rely on autonomous AI systems, the challenge of monitoring these complex networks grows exponentially. San Francisco-based Patronus AI has responded with Percival, a groundbreaking monitoring platform that automatically identifies fault patterns in AI agent chains and suggests repairs—all in about a minute.

"Percival represents the first intelligent agent capable of tracking agent trajectories, pinpointing complex faults, and systematically generating repair recommendations," explained Anand Kannappan, CEO and co-founder of Patronus AI, during an exclusive interview.

Addressing the 'Black Box' Problem in AI Agents

Unlike traditional machine learning models, AI agents execute multi-stage processes autonomously. This very capability creates debugging nightmares—a minor early-stage error can snowball into major deviations across hundreds of subsequent steps. Multi-agent collaboration compounds these challenges further.

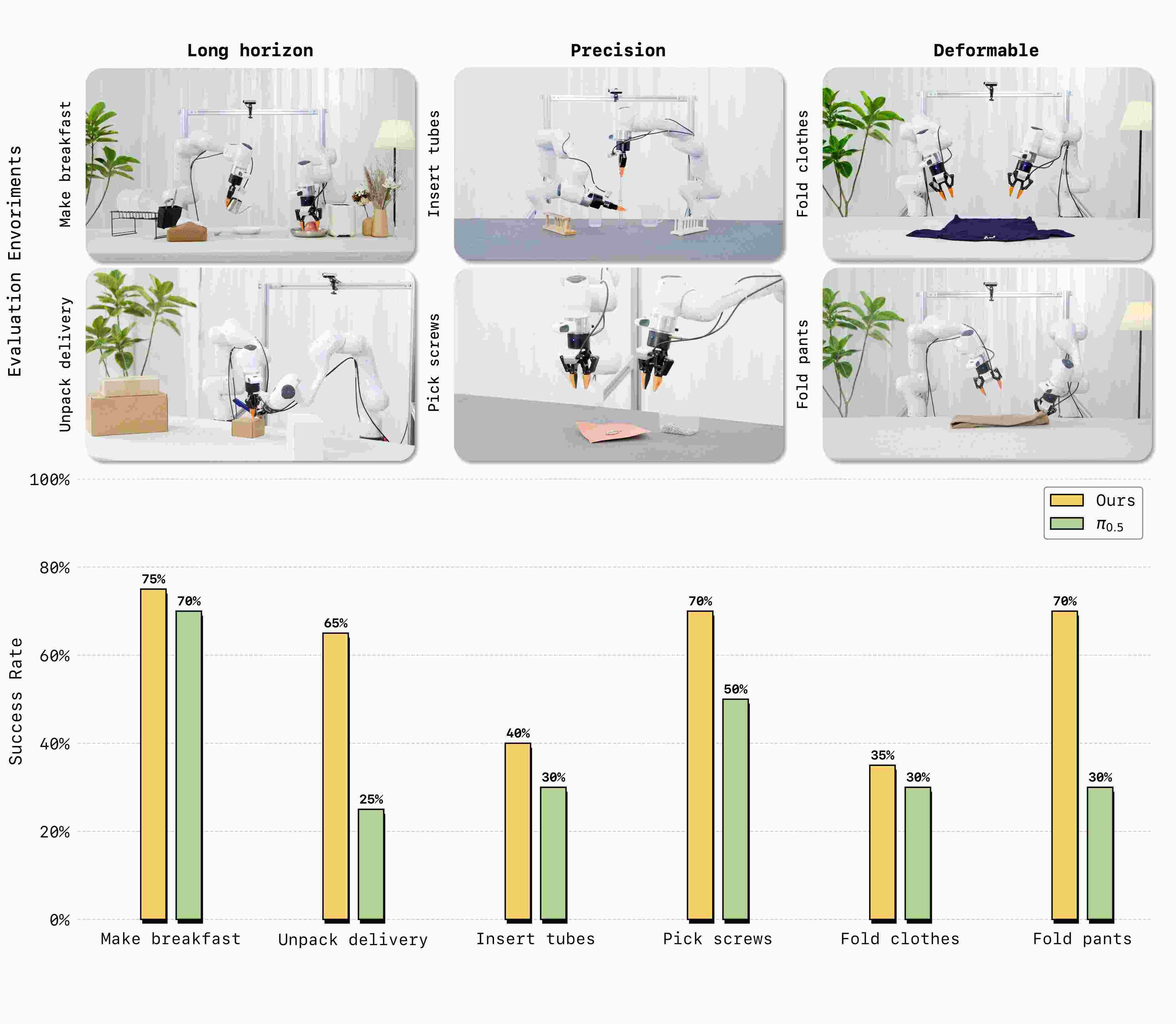

Percival tackles this by detecting over 20 common faults across four categories:

- Reasoning errors

- Execution failures

- Planning misalignments

- Domain-specific issues

The system actively monitors entire agent trajectories with contextual memory, understanding how errors propagate through specific workflows.

Image source note: Image generated by AI, licensed by Midjourney

Image source note: Image generated by AI, licensed by Midjourney

Slashing Debugging Time from Hours to Minutes

Early adopters report dramatic efficiency gains. Where diagnosing complex agent processes once took about an hour, Percival delivers analysis in 1 to 1.5 minutes—a 97% reduction that significantly lightens engineering workloads.

To benchmark performance, Patronus introduced the TRAIL Benchmark Test (Tracking Reasoning and Agent Issue Localization). Results revealed even top-tier models score just 11% on this evaluation—underscoring the urgent need for specialized monitoring tools.

Enterprise Adoption and Ecosystem Integration

Several industry players have already implemented Percival:

- Emergence AI uses it to ensure controllability in large-scale autonomous systems

- Nova employs the platform for SAP migration projects involving hundred-step agent chains

The technology integrates seamlessly with major frameworks including Hugging Face Smolagents, Langchain, Pydantic AI, and OpenAI Agent SDK.

The Growing Imperative for AI Oversight

With enterprises generating billions of AI code lines daily, Kannappan observes: "Systems grow more autonomous while human supervision struggles to keep pace." As agent complexity escalates, solutions like Percival may become essential safety nets rather than optional upgrades.

Key Points

- Percival reduces fault diagnosis time in AI agents from ~60 minutes to 1-1.5 minutes

- Identifies 20+ error types across reasoning, execution, planning and domain-specific categories

- Features contextual memory to track error evolution through multi-step processes

- Integrated with major development frameworks including Langchain and OpenAI Agent SDK

- TRAIL Benchmark shows current models score just 11% on fault detection