Robots Get Smarter: Antlingbot's New AI Helps Machines Think Like Humans

Robots Learn to Think Before They Act

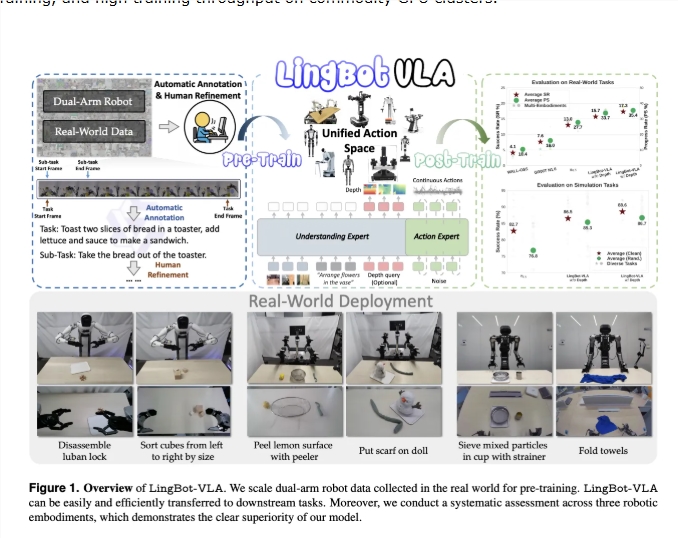

Imagine a robot that doesn't just follow pre-programmed instructions, but actually considers its actions like a human would. That's the promise of LingBot-VA, the latest innovation from Antlingbot Technology now available as open-source software.

How It Works

The system uses what developers call an "autoregressive video-action world modeling framework" - essentially giving robots the ability to visualize outcomes before moving. By merging large-scale video generation models with robotic control systems, LingBot-VA creates simulations of possible actions and their consequences.

Caption: Robots using LingBot-VA demonstrate superior performance in delicate tasks compared to previous systems

Putting It to the Test

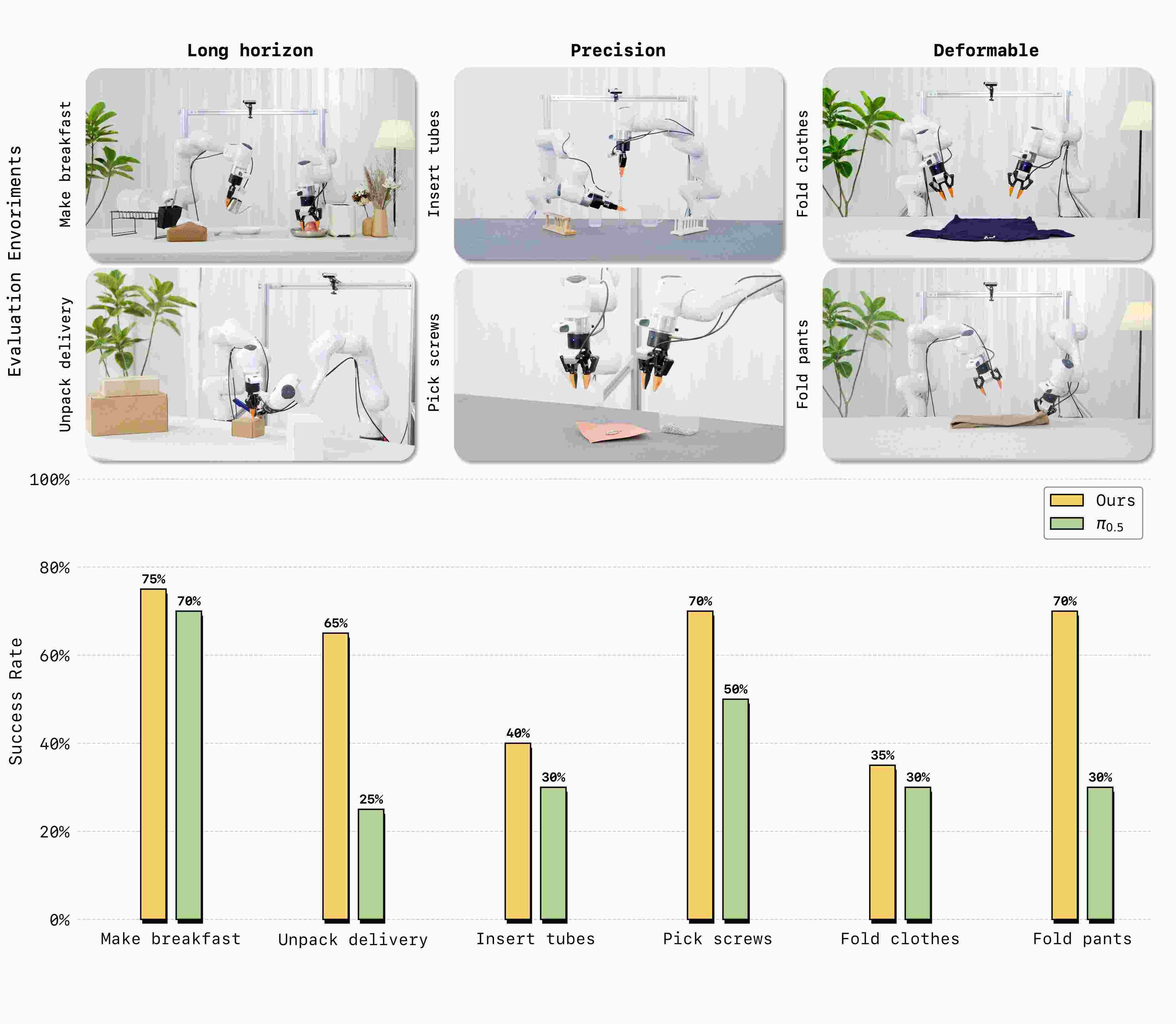

The real proof comes from practical demonstrations. When faced with six challenging scenarios - from making breakfast to folding delicate clothing - robots equipped with LingBot-VA needed just 30-50 real-world examples to adapt. Their success rate averaged 20% higher than current industry standards.

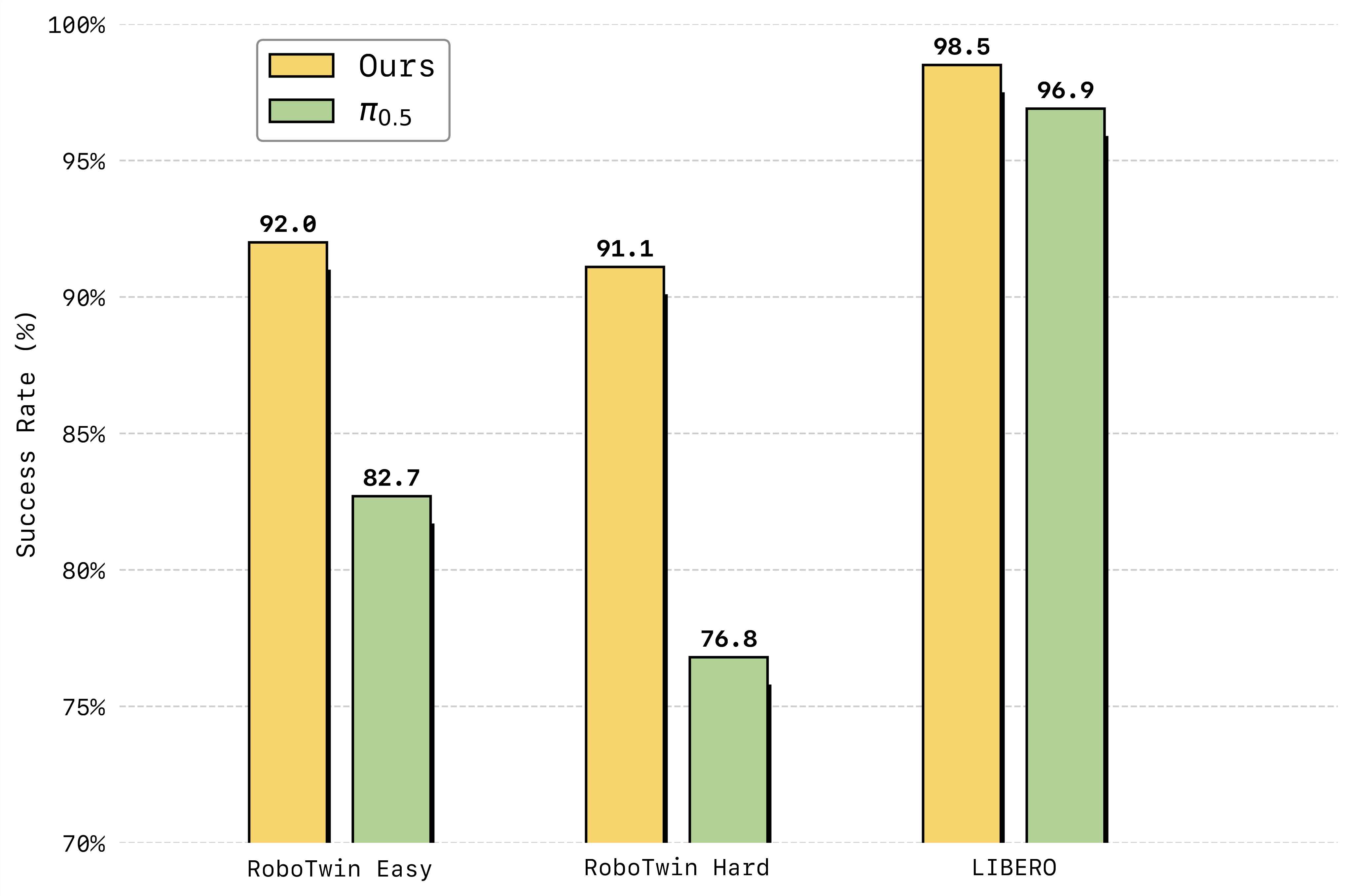

In simulated environments, the results were even more impressive:

- 98.5% success rate on long-term learning benchmarks

- Over 90% accuracy in complex dual-arm operations

Caption: Simulation tests show LingBot-VA setting new performance records

Under the Hood

The secret sauce lies in LingBot-VA's Mixture-of-Transformers architecture, which blends video processing with action control. A clever closed-loop system incorporates real-world feedback at each step, keeping simulations grounded in physical reality.

To address concerns about processing power, engineers developed:

- Asynchronous inference pipelines for smoother operation

- Memory caching systems for faster response times

The result? Robots that combine deep understanding with lightning-fast reactions.

What This Means for Robotics

This release marks another step forward in Antlingbot's mission to create more capable machines. Previous open-source projects focused on simulation environments and spatial awareness - now they're tackling higher-level cognition.

The company sees this as part of broader efforts to develop artificial general intelligence (AGI) that can handle real-world challenges. With all model weights and code freely available, researchers worldwide can build upon this foundation.

Key Points:

- Human-like decision making: Robots can now simulate outcomes before acting

- Proven performance: Outperforms existing systems by 20% in real-world tests

- Open access: Complete technical specifications available for public use

- Future applications: Could lead to more capable service and industrial robots