Yuchu's New AI Model Gives Robots Common Sense

Robots Get Smarter with New Open-Source AI Brain

Imagine a robot that doesn't just follow commands blindly, but understands how objects move in space - where to grip a cup so it doesn't slip, how much force to use when opening a door. That's exactly what Yuchu's new UnifoLM-VLA-0 model brings to humanoid robots.

From Screen Smarts to Street Smarts

The big leap here? This isn't another chatbot pretending to understand the world through text alone. UnifoLM-VLA-0 actually grasps physical reality:

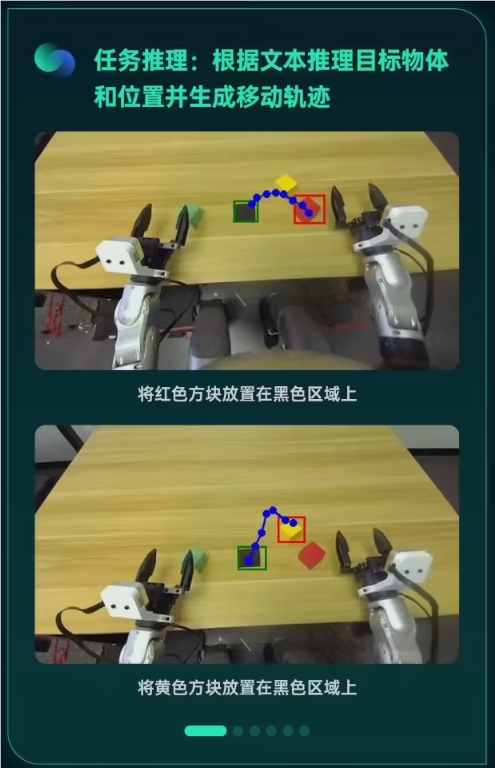

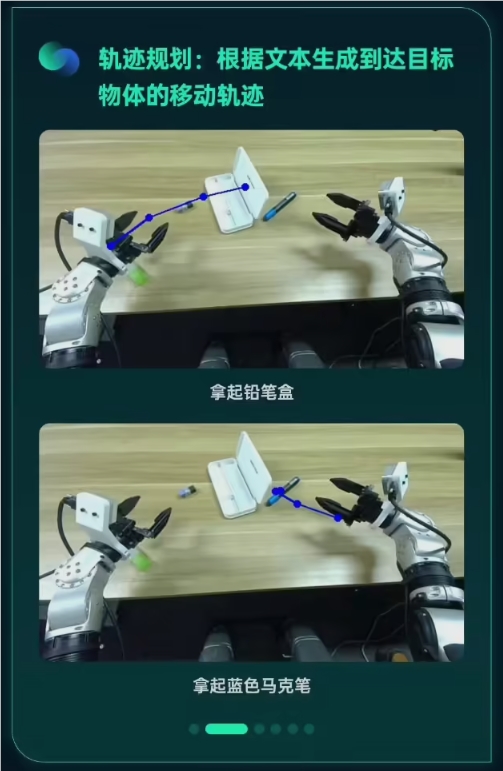

- Spatial intuition: It aligns text instructions with 3D environments like humans do instinctively

- Action planning: Predicts sequences of movements while accounting for real-world physics

- Adaptability: Maintains stability even when bumped or interrupted mid-task

Built Smart, Not Hard

Yuchu didn't start from scratch. They took the solid foundation of Alibaba's Qwen2.5-VL model and supercharged it:

- Trained with just 340 hours of real robot data - surprisingly efficient for such capabilities

- Outperforms its parent model significantly in spatial reasoning tests

- Nips at the heels of Google's Gemini-Robotics in certain scenarios

The secret sauce? A meticulously cleaned dataset focusing on physical interactions rather than abstract knowledge.

Real-World Robot Proof

The rubber meets the road on Yuchu's G1 humanoid platform, where UnifoLM-VLA-0 handles:

- Precise object manipulation (no more fumbling coffee cups!)

- Complex multi-step tasks without reprogramming

- Unexpected disturbances without catastrophic failures

Key Points:

- Open access: Full model now available on GitHub for developers worldwide

- Physical intelligence: Represents a shift from pure cognition to embodied understanding

- Commercial potential: Could accelerate practical applications for service robots

- Community benefit: Open-source approach invites global collaboration on robot brains