OpenAI Quietly Drops 'Safety First' Pledge From Its Mission

OpenAI's Evolving Priorities: Safety Takes a Backseat?

In a move that's raising eyebrows across the tech community, OpenAI has significantly revised its core mission statement, removing explicit commitments to AI safety and nonprofit ideals. The changes, spotted in recent tax filings, suggest a notable shift in the company's foundational principles.

What Changed?

The 2022-2023 documents clearly stated OpenAI would build "safe AI that benefits humanity without being restricted by financial returns." Fast forward to late 2025 filings, and two critical elements have disappeared:

- The word "safe" vanished entirely

- The profit-limiting clause evaporated

The pared-down version now simply pledges to "ensure general AI benefits all humanity" - leaving plenty of room for interpretation.

Behind the Scenes Turmoil

These paperwork changes reflect real-world shifts within the company. Sources confirm OpenAI recently dissolved its mission alignment team - the group responsible for keeping AI development ethically grounded. The departure wasn't smooth; a fired executive publicly accused the company of compromising standards to please certain user bases.

When questioned about adult content features allegedly added against some team members' advice, OpenAI countered with discrimination allegations - creating messy PR fallout rather than addressing core concerns.

From Idealists to Pragmatists?

The mission statement rewrite appears to formalize OpenAI's gradual transition from wide-eyed idealists to bottom-line-focused operators:

2015: Founded as nonprofit research lab 2019: Created capped-profit arm OpenAI LP 2023: Secured $10B Microsoft investment 2025: Drops safety/profit constraints from charter

The company insists this doesn't mean abandoning safety entirely - but critics argue when something disappears from your guiding principles, it inevitably falls down the priority list.

What Comes Next?

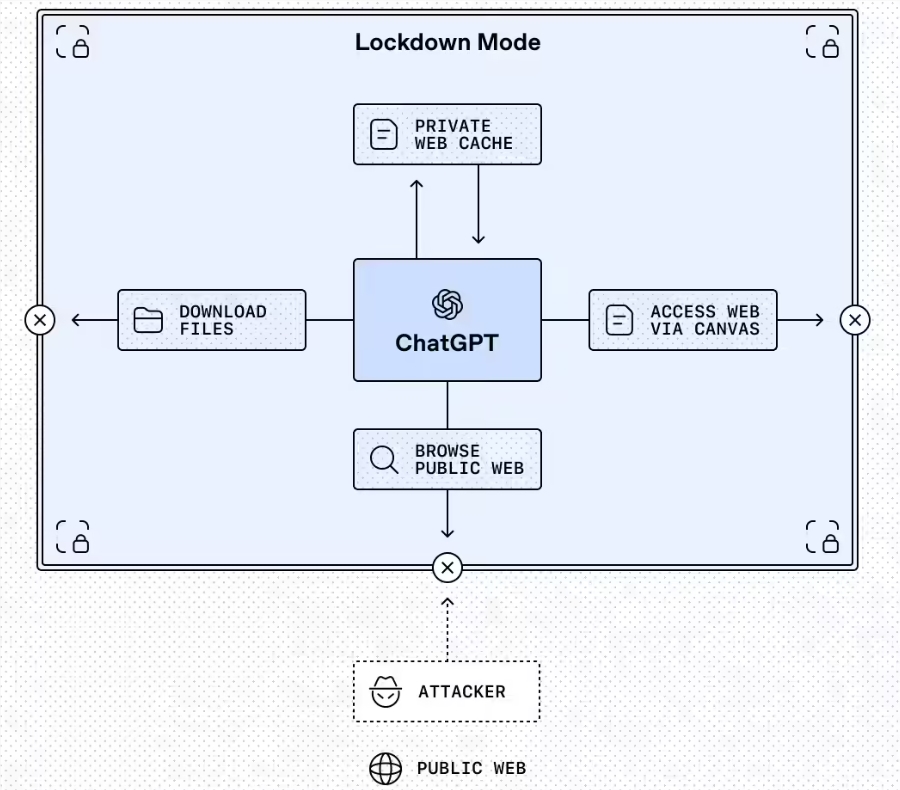

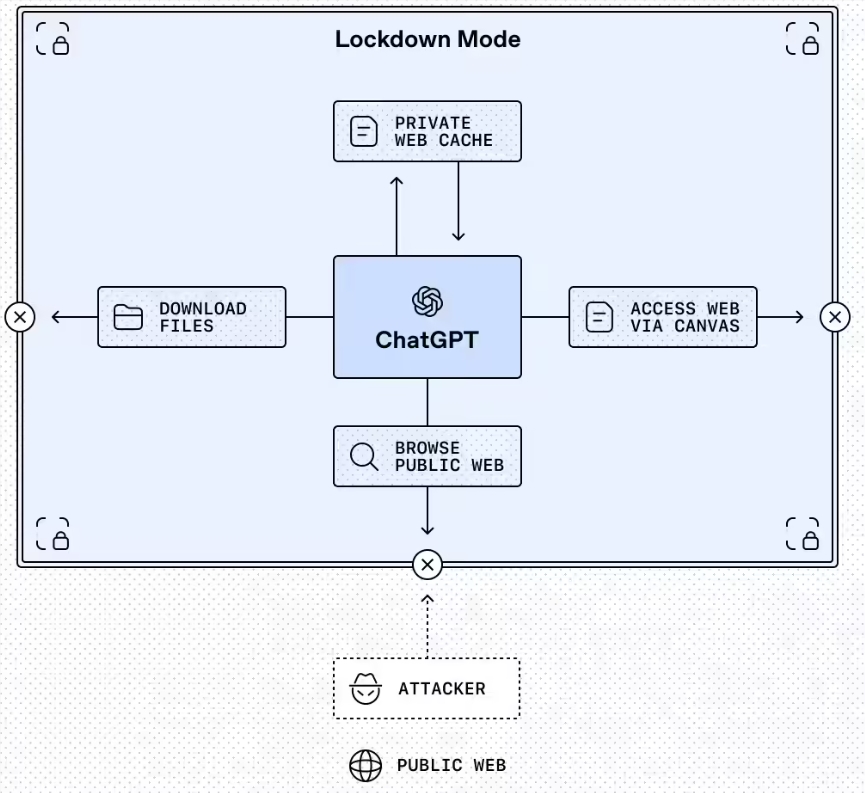

The changes coincide with plans to introduce ads into GPT products, triggering fresh privacy debates. When AI systems handle our most sensitive data while chasing advertising dollars, can ethical safeguards hold?

The parallels with Google's faded "Don't Be Evil" motto are hard to ignore. As OpenAI matures commercially, can it avoid following Big Tech's well-worn path from idealism to pragmatism? Or has that ship already sailed?

Key Points:

- Mission drift: Safety and nonprofit commitments removed from official documents

- Internal conflict: Ethics team disbanded amid policy disagreements

- Commercial push: Advertising plans raise privacy concerns

- Historical echoes: Similarities noted with Google's abandoned ideals