OpenAI Bolsters ChatGPT Security Against Sneaky Prompt Attacks

OpenAI Tightens ChatGPT's Security Belt

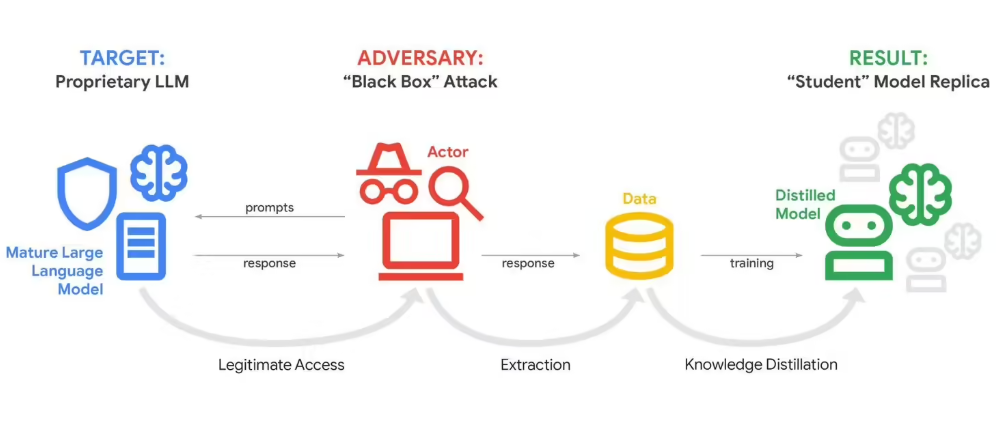

In response to rising concerns about AI vulnerabilities, OpenAI has implemented two significant security upgrades for ChatGPT. These changes specifically target prompt injection attacks - clever manipulations where third parties trick the AI into performing unauthorized actions or revealing sensitive data.

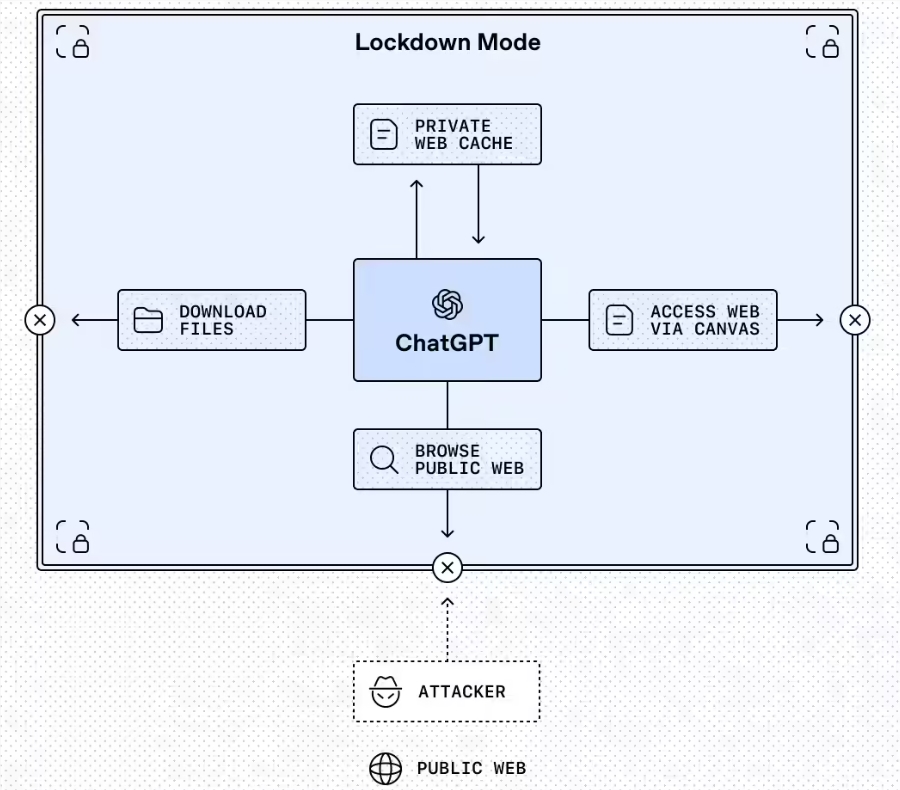

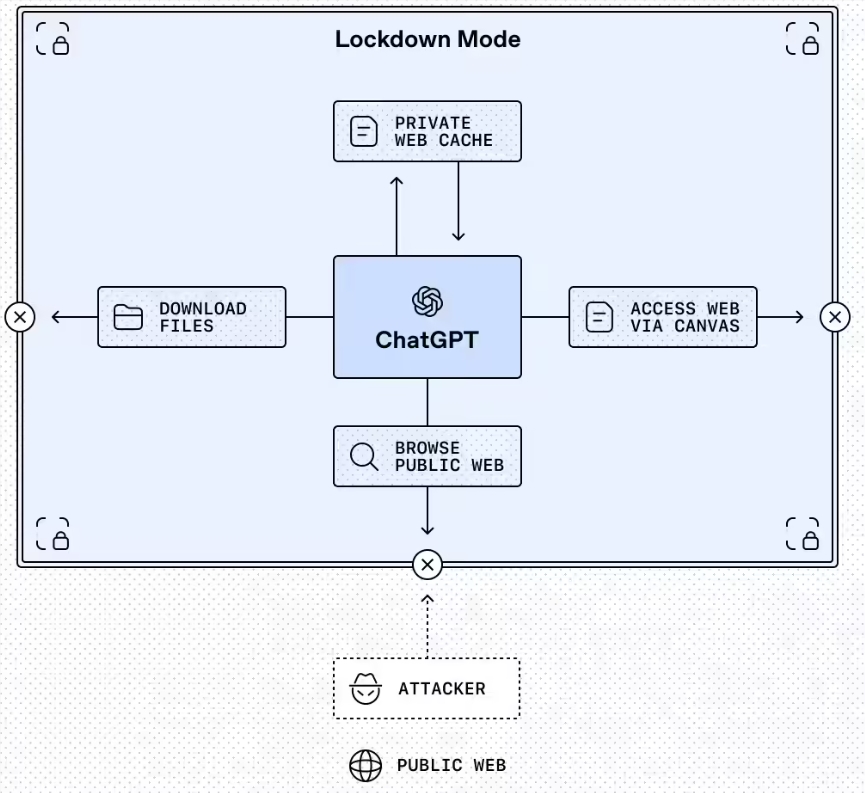

Lockdown Mode: Fort Knox for ChatGPT

The standout feature is Lockdown Mode, an optional setting currently available for enterprise, education, healthcare, and teacher versions. Think of it as putting ChatGPT in a protective bubble:

- Web browsing gets restricted to cached content only

- Features without robust security guarantees get automatically disabled

- Administrators gain granular control over permitted external applications

The mode operates deterministically - meaning it makes predictable, consistent decisions about what to block rather than relying on probabilistic filtering. "We're giving organizations tools to make informed security trade-offs," explained an OpenAI spokesperson.

Interestingly, while designed primarily for high-security environments now, OpenAI plans to bring Lockdown Mode to consumer versions within months.

Seeing Red: The 'Elevated Risk' Label System

The second major change introduces standardized warning labels across ChatGPT and related products like Codex. Functions carrying higher risks now sport bright "Elevated Risk" tags accompanied by:

- Clear explanations of potential dangers

- Suggested mitigation strategies

- Appropriate use case guidance

The labeling particularly affects network-related capabilities - features that boost usefulness but come with unresolved security questions.

Why This Matters Now

The updates arrive as businesses increasingly integrate AI into sensitive workflows. Recent incidents have shown how seemingly innocent prompts can bypass safeguards when AI systems interact with external tools.

OpenAI's approach stands out by:

- Providing actual technical constraints (Lockdown Mode)

- Improving transparency (risk labeling)

- Maintaining flexibility (optional implementations)

The Compliance API Logs Platform complements these features by enabling detailed usage audits - crucial for regulated industries.

Key Points:

- Lockdown Mode restricts risky external interactions deterministically

- "Elevated Risk" labels standardize warnings across OpenAI products

- Both measures build on existing sandbox and URL protection systems

- Enterprise versions get first access with consumer rollout planned