OpenAI Bolsters ChatGPT Security with New Safeguards Against Hacking Attempts

OpenAI Tightens ChatGPT's Digital Defenses

In response to growing cybersecurity threats targeting AI systems, OpenAI has implemented two robust security features for ChatGPT. These updates specifically address prompt injection vulnerabilities - a type of attack where malicious actors trick the AI into performing unauthorized actions or revealing sensitive information.

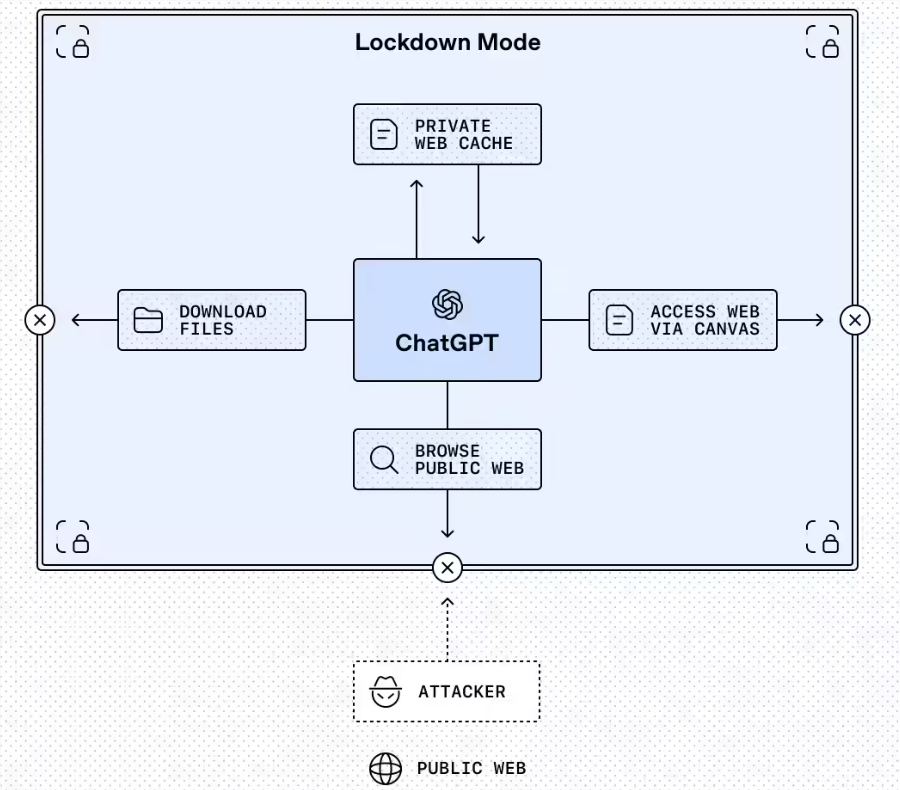

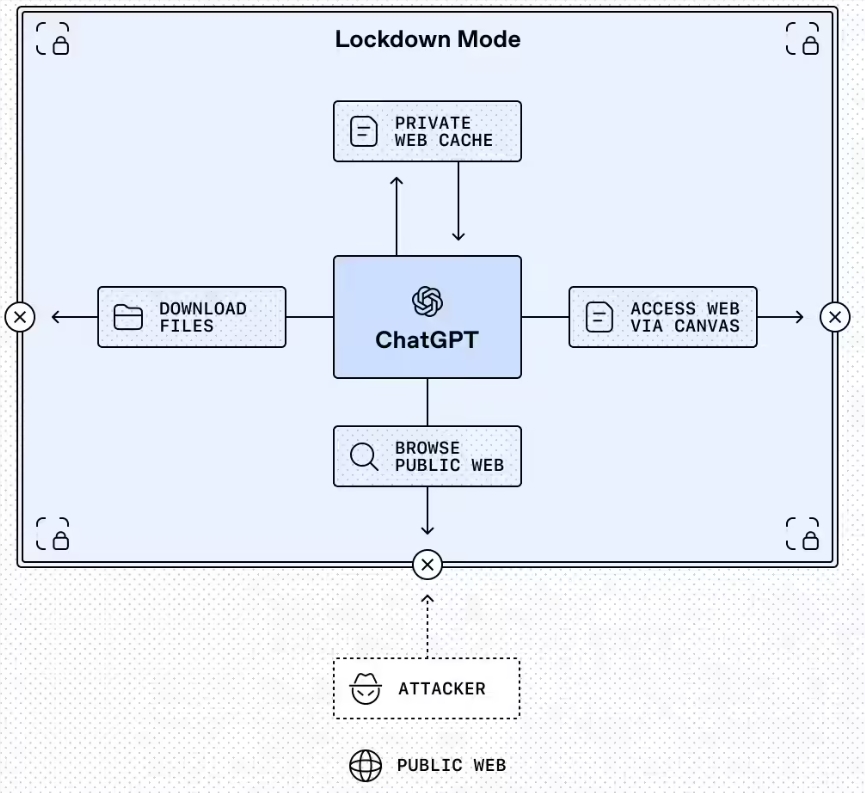

Lockdown Mode: A Digital Fort Knox

The first enhancement introduces Lockdown Mode, an optional setting designed for organizations handling sensitive data. Think of it as putting ChatGPT in a protective bubble - it significantly restricts how the AI interacts with external systems to minimize potential data leaks.

When activated, this mode:

- Limits web browsing to cached content only

- Automatically disables features lacking strong security guarantees

- Allows administrators to precisely control which external applications can interact with ChatGPT

Currently available for Enterprise, Education, Healthcare, and Teacher versions, Lockdown Mode will soon extend to consumer accounts. OpenAI has also developed a Compliance API Logs Platform to help organizations track and audit how their teams use these features.

Clear Warning Labels for Risky Features

The second update introduces standardized "Elevated Risk" labels across ChatGPT, ChatGPT Atlas, and Codex. These warnings appear whenever users enable functions that carry higher security risks, particularly those involving network access.

"Some capabilities that make AI more useful also introduce risks the industry hasn't fully solved," explained an OpenAI spokesperson. "These labels help users make informed decisions about when to use certain features."

The warnings don't just flag potential dangers - they also provide:

- Clear explanations of what changes when activating a feature

- Specific risk scenarios to watch for

- Practical suggestions for mitigating vulnerabilities

- Guidance on appropriate use cases

Why These Updates Matter Now

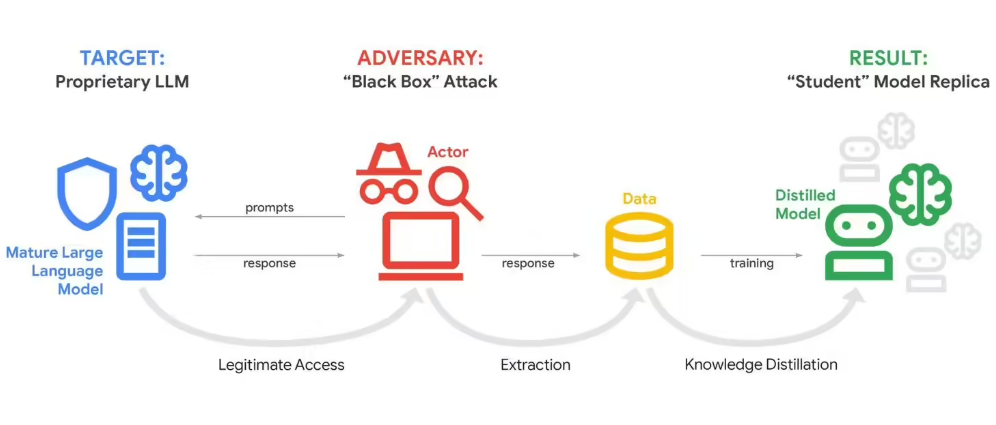

As AI systems increasingly connect to other software and websites, they become potential gateways for cyberattacks. Prompt injection attacks have emerged as a particularly sneaky threat - imagine someone slipping hidden instructions into a webpage that trick ChatGPT into revealing confidential data or performing unauthorized actions.

These new protections build upon OpenAI's existing security measures like sandboxing and URL data leakage prevention. They represent a shift toward giving users more transparency and control over their AI interactions.

The rollout comes as businesses increasingly adopt AI tools while grappling with how to balance functionality with data protection requirements. For organizations in regulated industries like healthcare or finance, these updates could make the difference between embracing AI innovation and keeping it at arm's length.

Key Points:

- OpenAI adds Lockdown Mode for high-security ChatGPT use cases

- New "Elevated Risk" labels warn users about potentially vulnerable functions

- Updates target prompt injection attacks that manipulate AI behavior

- Changes build on existing protections while increasing user control

- Enterprise versions get first access, with consumer rollout coming soon