OpenAI Bolsters ChatGPT Security Against Sneaky Prompt Attacks

OpenAI Tightens ChatGPT's Digital Seatbelt

ChatGPT just got smarter about spotting digital pickpockets. OpenAI announced significant security upgrades designed to thwart prompt injection attacks - clever manipulations that can trick AI assistants into revealing secrets or performing unwanted actions.

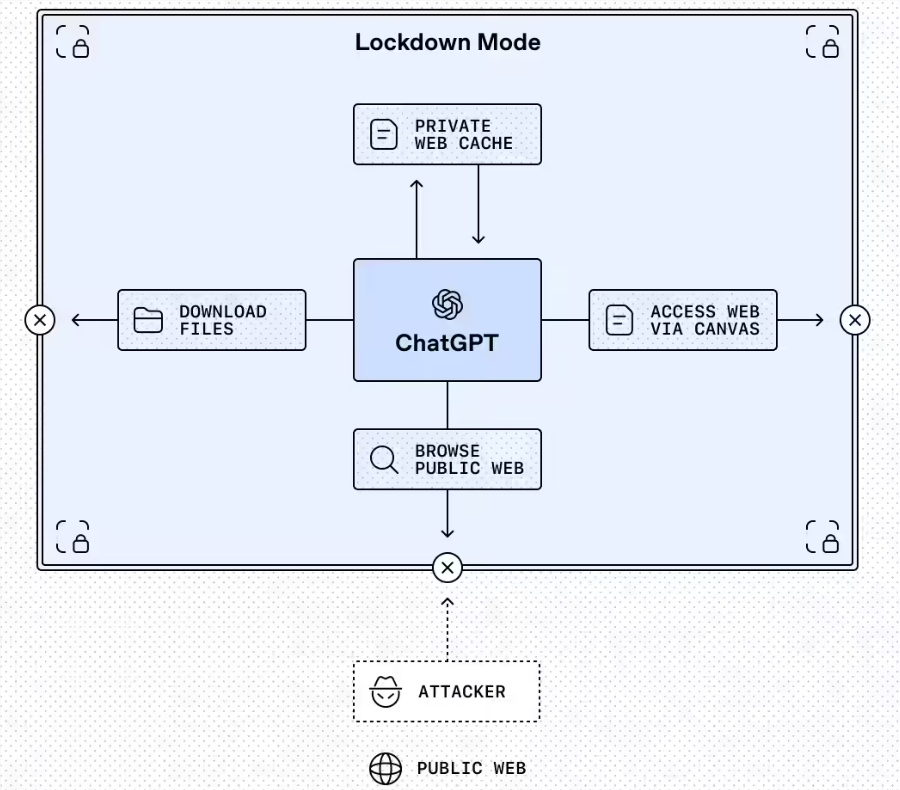

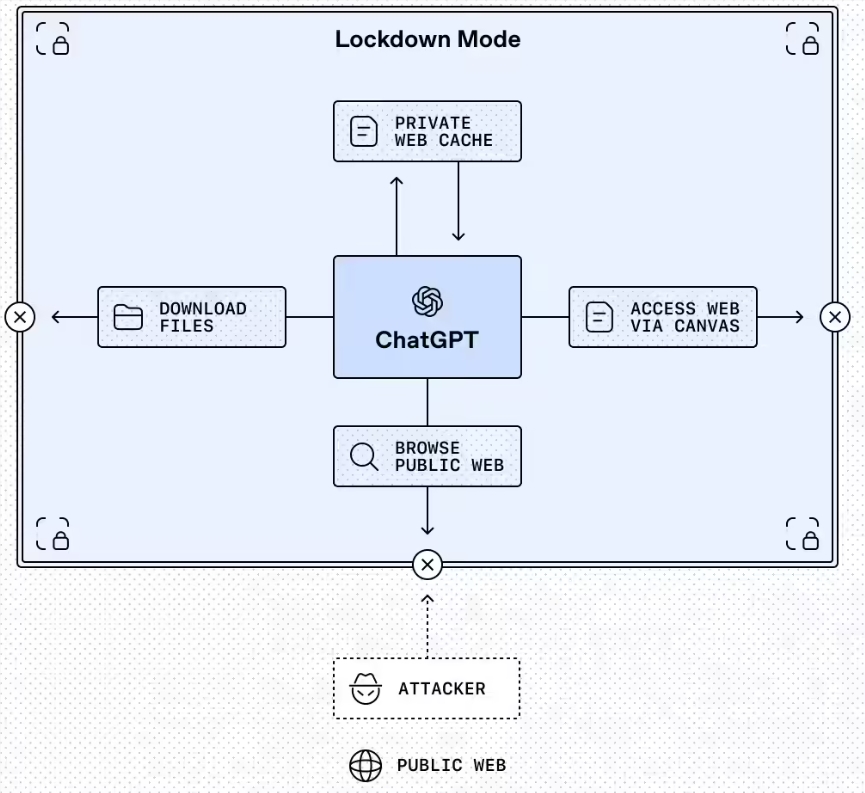

Lockdown Mode: Digital Fort Knox

The standout feature is Lockdown Mode, essentially turning ChatGPT into a digital fortress when handling sensitive information. Picture this: web browsing gets restricted to cached content only, and any feature without ironclad security guarantees gets temporarily benched.

"We're giving organizations dealing with healthcare records, student data, or corporate secrets an extra layer of protection," explained an OpenAI spokesperson. Enterprise and education users can now activate this mode through administrator dashboards, with consumer access coming soon.

Seeing Red Flags Clearly

The second upgrade introduces standardized "Elevated Risk" warning labels - think of them as bright orange cones around digital construction zones. These tags appear whenever developers enable higher-risk functions like unrestricted web access.

"Transparency matters," noted the spokesperson. "If Codex needs to fetch live web data during programming tasks, we want developers making informed choices about when that risk makes sense."

Why This Matters Now

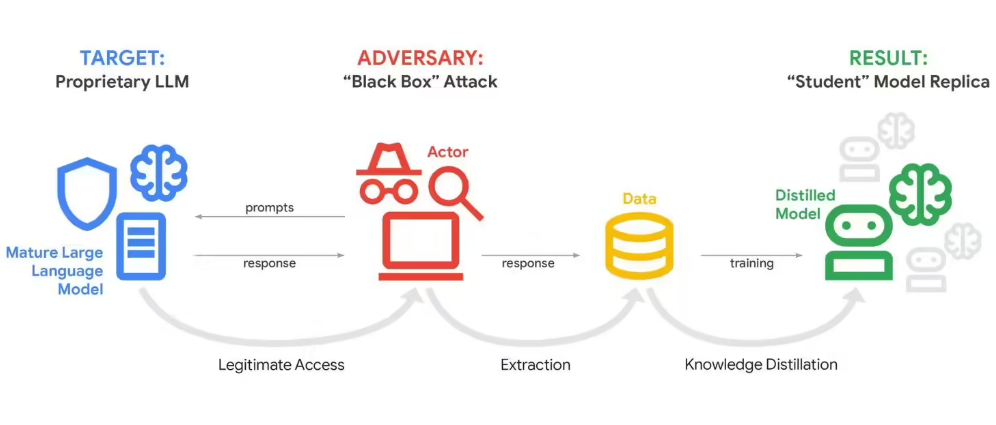

As AI assistants increasingly connect to other apps and websites, they inherit new vulnerabilities. Hackers have gotten creative with prompt injections - hiding malicious instructions in seemingly normal requests that bypass traditional security checks.

The upgrades complement existing safeguards like sandboxing and URL filtering. Compliance tools also help organizations track how protected modes get used - crucial for regulated industries facing audit requirements.

Key Points:

- Lockdown Mode restricts external interactions for sensitive ChatGPT deployments

- Warning labels now clearly mark higher-risk functions

- Protections currently focus on enterprise and education users

- Consumer version expected within months

- Builds on existing sandbox and filtering systems