OpenAI Bolsters ChatGPT Security Against Sneaky Prompt Attacks

OpenAI Tightens ChatGPT's Defenses Against Manipulation

ChatGPT just got tougher against digital tricksters. OpenAI announced significant security upgrades designed to thwart prompt injection attacks - a growing concern as AI systems integrate more deeply with websites and external apps.

Locking Down Sensitive Interactions

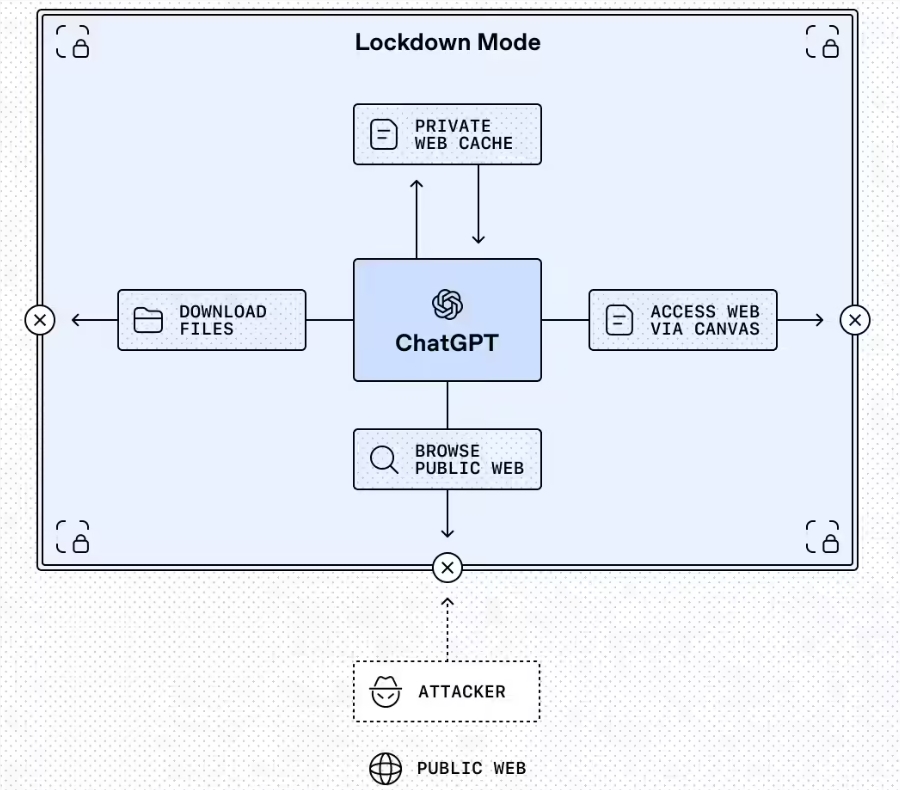

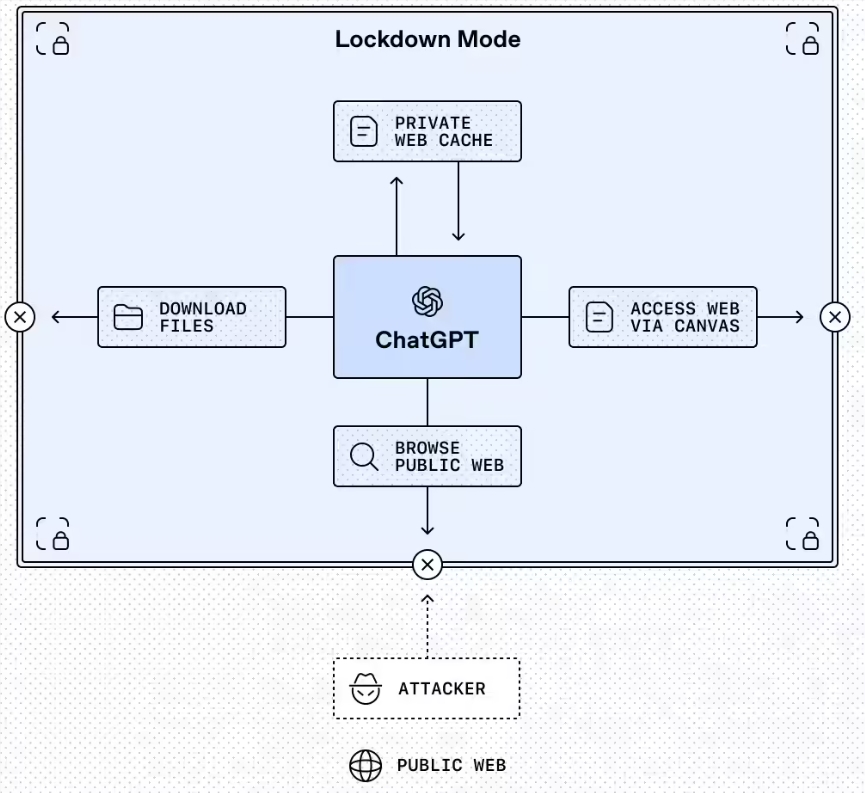

The standout feature is Lockdown Mode, an optional setting currently available for enterprise, education, healthcare, and teacher versions. Think of it as ChatGPT's version of going off-grid when handling sensitive data.

"This isn't your everyday security toggle," explains OpenAI's announcement. "Lockdown Mode fundamentally changes how ChatGPT interacts with the outside world."

The mode works by:

- Restricting web browsing to cached content only

- Disabling features without robust security guarantees

- Giving administrators granular control over permitted applications

The company plans to extend Lockdown Mode to consumer versions in coming months, along with new Compliance API Logs to help organizations track usage.

Clear Warning Labels for Risky Features

The second major change introduces standardized "Elevated Risk" labels across ChatGPT, ChatGPT Atlas, and Codex. These warnings appear whenever users activate functions that could potentially compromise security.

"Some capabilities enhance usefulness but carry risks the industry hasn't fully solved," OpenAI acknowledges. The labels provide:

- Clear explanations of potential dangers

- Suggested mitigation strategies

- Guidance on appropriate use cases

The warnings are particularly crucial when developers enable network access or other functions that might expose private data.

Why These Changes Matter Now

The updates arrive as businesses increasingly connect AI systems to their internal tools and customer-facing applications. While this integration unlocks powerful capabilities, it also creates new vulnerabilities.

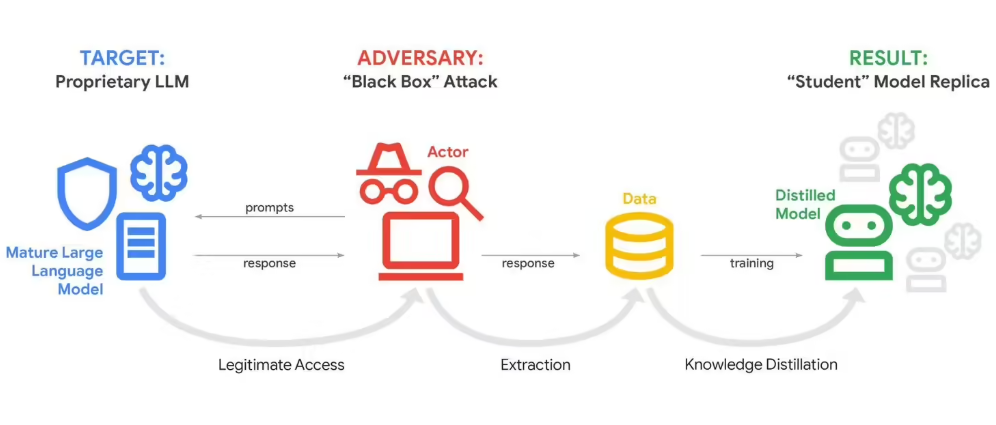

Prompt injection attacks can manipulate AI behavior through carefully crafted inputs - potentially tricking chatbots into revealing confidential information or performing unauthorized actions. Recent incidents across the industry have highlighted these risks.

OpenAI emphasizes these protections complement existing safeguards like sandboxing and URL filtering rather than replacing them.

The company recommends administrators review their security needs carefully before enabling Lockdown Mode, noting its restrictions may impact functionality for general use cases.

Key Points:

- New Lockdown Mode severely limits external interactions for high-security scenarios

- Standardized risk labels help users understand potential dangers before activating features

- Protections target prompt injection attacks that manipulate AI behavior

- Updates currently available for enterprise and institutional versions, coming soon to consumers

- Measures build upon existing sandboxing and data leakage protections