MiniMax M2.5 Goes Open-Source: A Game Changer for Affordable AI Agents

MiniMax M2.5 Goes Open-Source: A Game Changer for Affordable AI Agents

In a move that could democratize access to advanced AI, MiniMax has released its M2.5 model as open-source software on ModelScope. This third iteration in their rapid-fire release cycle (just 108 days since the last version) brings professional-grade AI capabilities within reach of individual developers and small businesses.

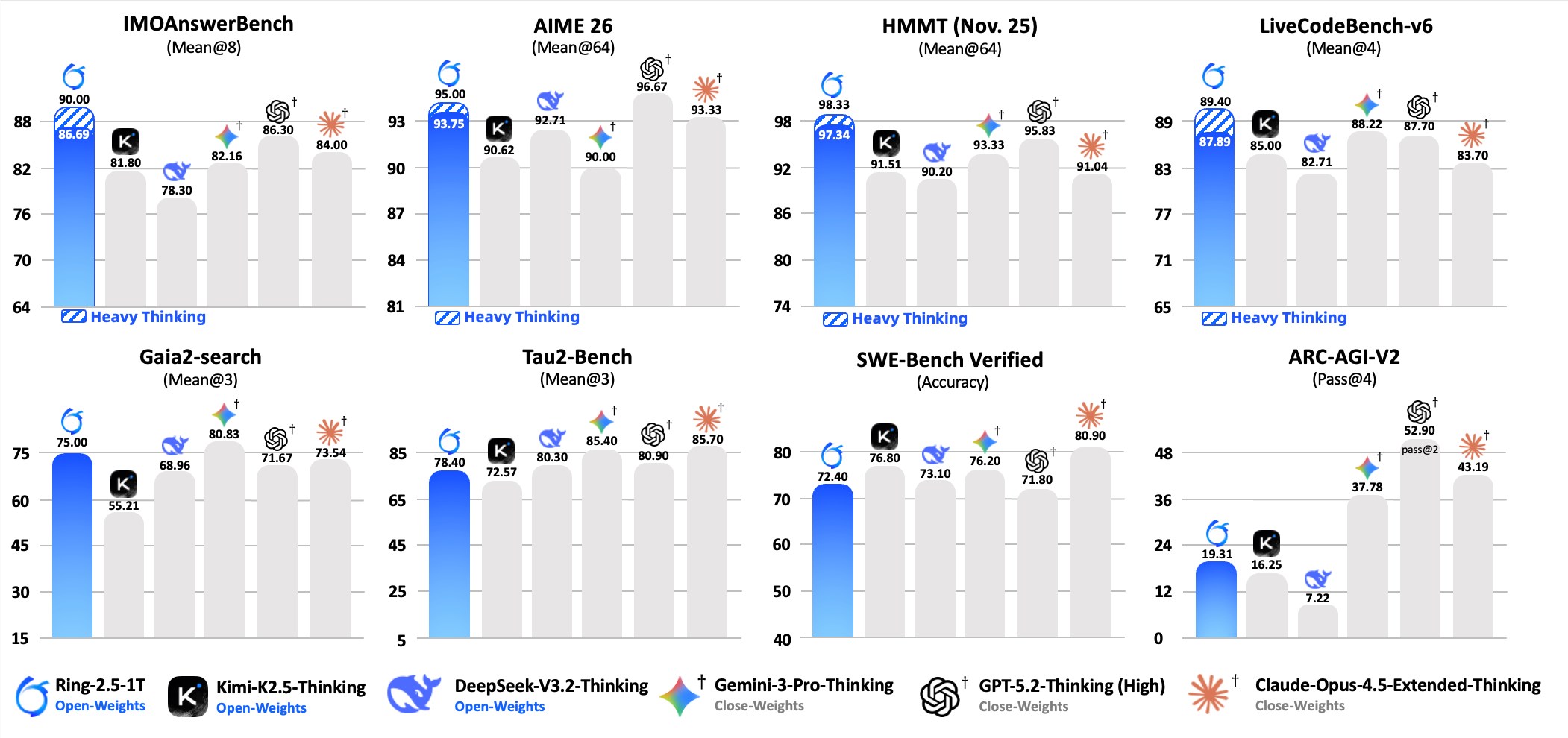

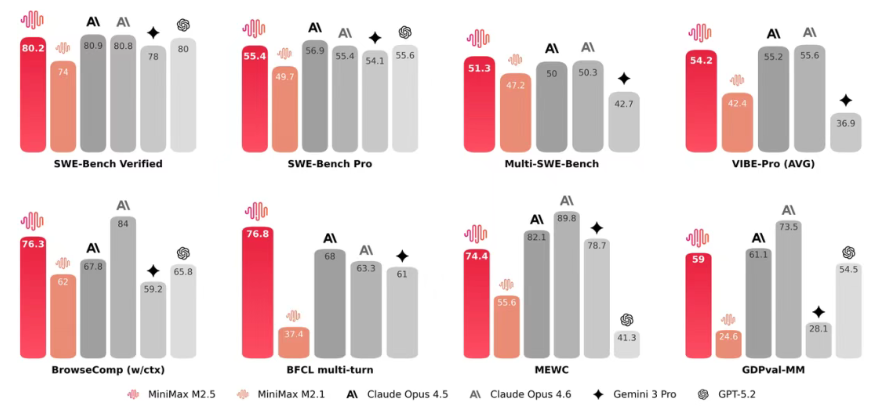

Performance That Turns Heads

The numbers speak volumes: M2.5 achieves an impressive 80.2% on SWE-Bench Verified, edging past GPT-5.2 and nipping at the heels of Claude Opus4.5. For multilingual programming tasks, it's currently unmatched with a 51.3% score on Multi-SWE-Bench.

What does this mean practically? Developers report the model shows unusual sophistication in architectural planning, handling everything from initial design to final implementation across multiple platforms better than most alternatives.

"We've been testing various models for our workflow," shares Marco Lin, CTO at DevFlow Solutions. "M2.5's framework generalization surprised us - it adapted to our legacy systems faster than specialized tools."

Speed Meets Affordability

The real kicker? While matching Claude Opus4.6's response times, MiniMax claims M2.5 operates at just one-tenth the cost of comparable models.

Three technological breakthroughs power this efficiency:

- The Forge native Agent RL framework delivers 40x training acceleration

- CISPO algorithm stabilizes large-scale training

- Innovative Reward design balances performance with speed

The result? Inside MiniMax's own operations, M2.5 now handles 30% of daily tasks and processes 80% of new code submissions.

Deployment Options Galore

MiniMax understands one size doesn't fit all:

- No-code users: Jump right in with the web interface featuring over 10,000 pre-built "Experts"

- Developers: Choose between free API calls or official ModelScope integration

- Enterprise needs: Local deployment supports SGLang (high-concurrency), vLLM (mid-range), Transformers (quick tests), and MLX (Mac development)

The company even offers cost-conscious API tiers - Lightning and Standard versions priced at just 1/10 to 1/20 of competitor rates.

Optimizing Your Experience

The model shines brightest when properly configured:

- Default settings work well (temperature=1.0, top_p=0.95)

- Native support for parallel tool calls saves precious development time

- Comprehensive guides help integrate tool results back into workflows

As AI becomes increasingly essential yet expensive to implement, MiniMax's open-source approach with M2.5 could mark a turning point - putting sophisticated AI assistance within reach without sophisticated budgets.

Key Points:

- Open-source access on ModelScope lowers barriers to entry

- Benchmark-beating performance in programming and search tasks

- Cost-effective operation at just 10% of comparable models

- Flexible deployment from no-code web apps to local implementations

- Rapid iteration cycle shows MiniMax's commitment to continuous improvement