Meta's New AI Tool Peers Inside Chatbot Brains to Fix Reasoning Flaws

Meta's Breakthrough: Seeing Inside AI Reasoning

In a significant leap forward for AI transparency, Meta's research team has developed what might be called an "X-ray machine" for chatbot reasoning. Their newly released CoT-Verifier tool, built on the Llama3.18B Instruct architecture, gives developers unprecedented visibility into how large language models think - and more importantly, where their logic breaks down.

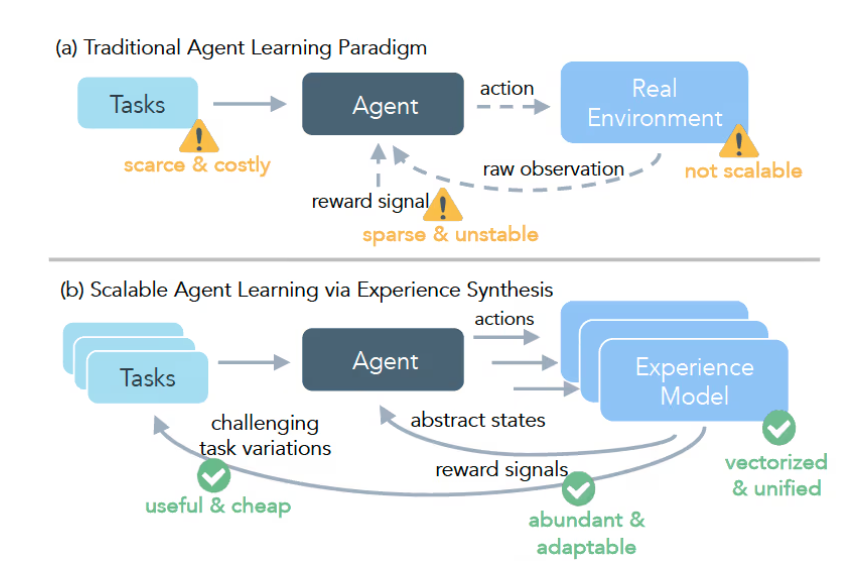

Why Current Methods Fall Short

Until now, checking an AI's reasoning typically meant either:

- Looking at final outputs (black-box)

- Or analyzing activation signals (gray-box)

"It's like trying to diagnose a car problem just by listening to the engine," explains lead researcher Mark Chen. "You might hear something's wrong, but you can't see which piston is misfiring."

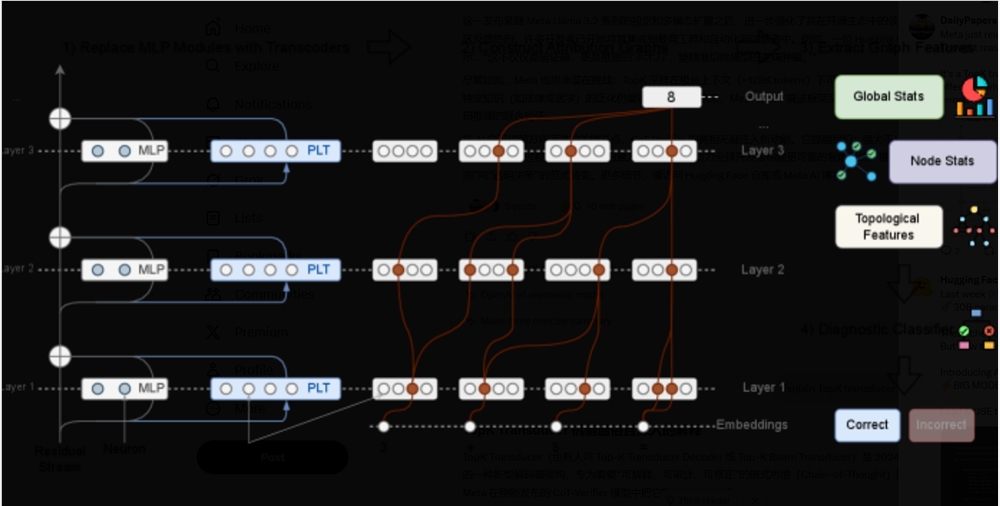

The Meta team discovered that correct and incorrect reasoning steps leave dramatically different "fingerprints" in what they call attribution graphs - essentially maps of how the model processes information internally. Correct reasoning creates clean, efficient patterns while errors produce chaotic detours.

How It Works: The Science Behind the Breakthrough

The system works by training classifiers to recognize these structural patterns:

- Pattern Recognition: The tool identifies hallmark features of flawed reasoning paths

- Error Prediction: It flags likely mistakes before they affect outputs

- Targeted Correction: Developers can then adjust specific components

Early tests show particular promise for complex tasks requiring multi-step logic, where traditional methods often miss subtle errors cascading through later stages.

What This Means for AI Development

The implications extend far beyond simple error detection:

- New Training Methods: Models could potentially learn from their own reasoning mistakes

- Domain-Specific Improvements: Different error patterns emerge in math vs language tasks

- Foundation for Smarter AI: Understanding failure modes helps build more robust systems

The team emphasizes this isn't just about fixing today's chatbots. "We're laying groundwork," says Chen. "Future systems that explain their thinking could transform everything from medical diagnosis to legal analysis."

The CoT-Verifier is now available on Hugging Face as Meta continues refining its capabilities.

Key Points:

- White-box visibility: First tool showing exactly how LLMs reason internally

- Structural analysis: Identifies distinct patterns between correct/incorrect logic paths

- Beyond detection: Enables targeted corrections to flawed reasoning components

- Open access: Available now on Hugging Face platform