Meituan's New AI Model Excels at Complex Problem-Solving

Meituan's AI Breakthrough: Smarter Thinking for Complex Tasks

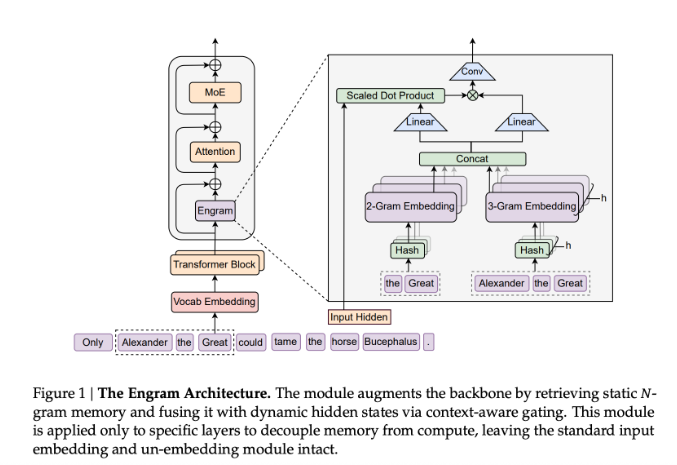

Meituan's research team just leveled up the AI game with LongCat-Flash-Thinking-2601, their newest open-source model that thinks more like humans do. Unlike typical AI that processes information linearly, this system introduces a groundbreaking "rethinking mode" - splitting analysis into parallel thinking and summarization phases.

Why This Matters

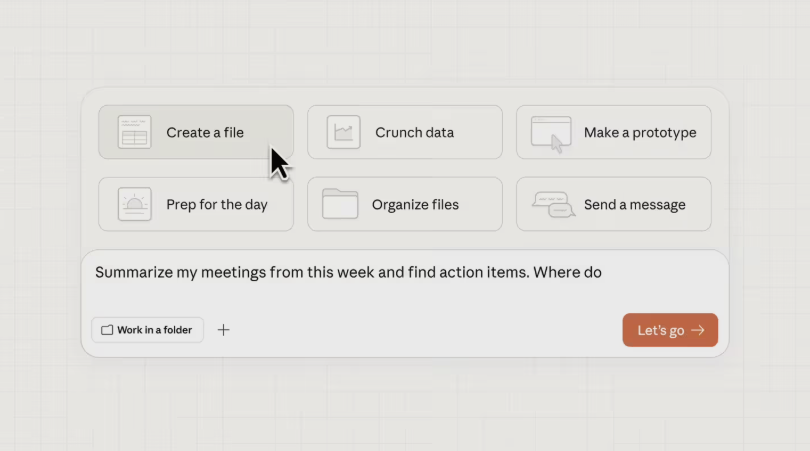

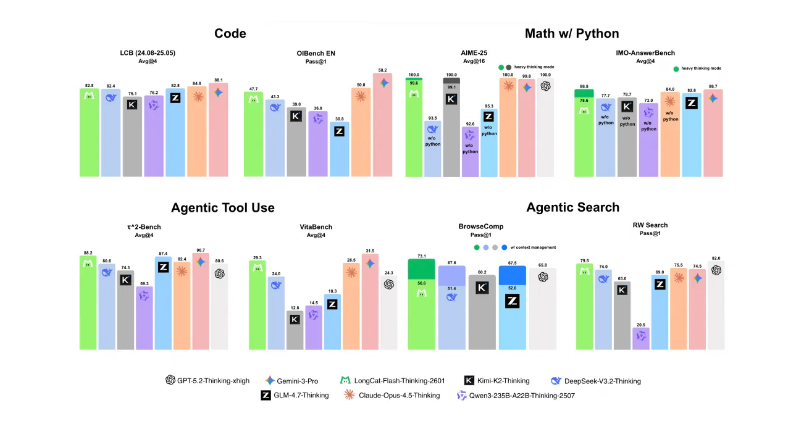

The model isn't just theoretically impressive - it delivers where it counts. Scoring 82.8 on programming evaluations and a perfect 100 in mathematical reasoning tests demonstrates real-world capability. But what really sets it apart is how it handles tools. Imagine an assistant that not only understands your request but instinctively knows which digital tools to use to get the job done.

Built for the Real World

"We didn't just train this model in ideal conditions," explains the development team. They subjected the AI to what they call "environment expansion" - throwing everything from API failures to missing data at it during training. The result? An unusually resilient system that keeps working when others might crash.

For developers, the open-source approach makes this particularly exciting. Complete access to weights and inference code means teams can build upon Meituan's work rather than starting from scratch. The model is already available on GitHub, Hugging Face, and ModelScope, with live demos at longcat.ai.

Key Points:

- Human-like thinking: Introduces innovative two-phase "rethinking mode"

- Top-tier performance: Scores 100/100 on mathematical reasoning tests

- Real-world ready: Trained with intentional noise and failures for robustness

- Developer-friendly: Fully open-sourced with weights and code available