Falcon H1R7B: The Compact AI Model Outperforming Larger Rivals

Falcon H1R7B Proves Size Isn't Everything in AI

The Abu Dhabi Innovation Institute (TII) just turned heads with their latest release - Falcon H1R7B. This compact open-source language model packs just 7 billion parameters but delivers reasoning power that gives much larger models a run for their money.

Smart Training Behind the Power

TII's engineers took a two-pronged approach to training:

Phase One: Building on their existing Falcon-H1-7B foundation, they focused intensive training on mathematics, programming, and scientific reasoning through "Cold Start Supervised Fine-Tuning" (SFT).

Phase Two: They then implemented "Reinforcement Learning Enhanced" (GRPO), using reward mechanisms to sharpen the model's logical reasoning and diversify its outputs.

"We're seeing smaller models achieve what previously required massive parameter counts," explains Dr. Sarah Khalil, lead researcher on the project. "It's about smarter training, not just bigger models."

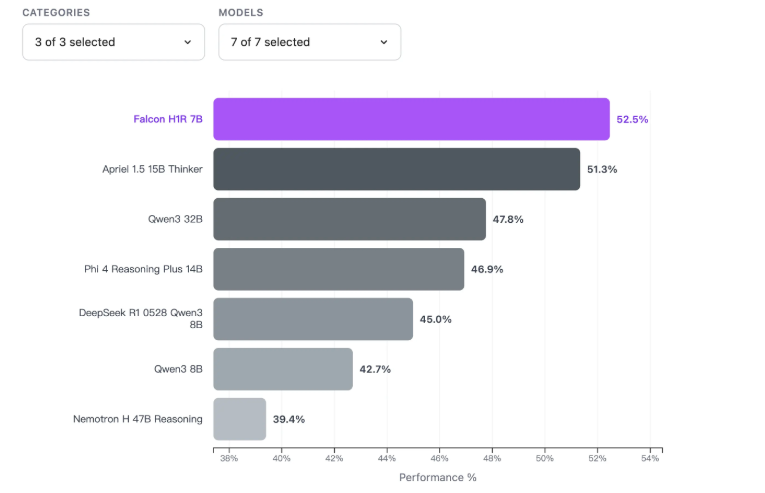

Performance That Surprises

The numbers tell an impressive story:

- 88.1% on AIME-24 math tests (beating many 15B models)

- 68.6% on LCB v6 coding challenges (top among sub-8B models)

- Competitive scores on MMLU-Pro and GPQA general reasoning tests

The secret sauce? Their "Deep Think with Confidence" (DeepConf) method generates fewer tokens while improving accuracy - like an expert who gets to the point without unnecessary rambling.

Built for Real-World Use

What really sets Falcon H1R7B apart is its practical efficiency:

- Processes up to 1500 tokens/second per GPU - nearly double some competitors

- Maintains strong performance even on lower-powered hardware

- Uses hybrid Transformer/Mamba architecture for better long-context handling

The model is already available on Hugging Face in both full and quantized versions, lowering barriers for developers and researchers.

Key Points:

- Compact powerhouse: 7B parameters outperform many larger models

- Specialized training: Two-phase approach maximizes reasoning capabilities

- Real-world ready: High throughput works across hardware setups

- Open access: Available now for community use and development