Jan's New AI Model Outshines Google Gemini in Long-Term Tasks

Jan's Breakthrough AI Model Sets New Standard for Reliability

In the race to create AI that doesn't just think but reliably acts, the open-source Jan team has pulled ahead with their latest release. Jan-v2-VL-Max isn't just another large language model - it's specifically engineered to solve one of artificial intelligence's most frustrating limitations: the tendency to veer off course during extended tasks.

Solving the "Error Snowball" Problem

Anyone who's worked with AI assistants knows the frustration - small mistakes early in a process compound into complete failures later. Current multimodal agents struggle particularly with long sequences like automated UI operations or cross-application workflows. The Jan team calls this "error accumulation," where minor deviations become major derailments.

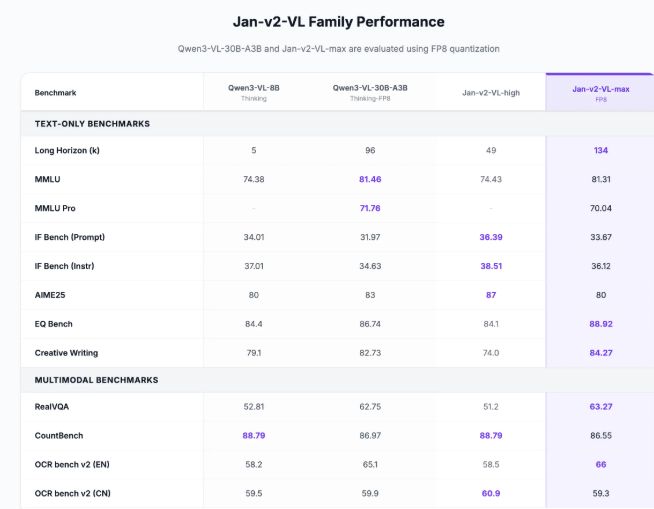

Their solution? A clever adaptation called RLVR (Reinforced Long-horizon Vision-Language Reasoning) technology. Built on LoRA architecture, this innovation maintains the Qwen3-VL-30B base model's capabilities while dramatically improving consistency. The result? An AI that can accurately complete dozens of steps without losing its way.

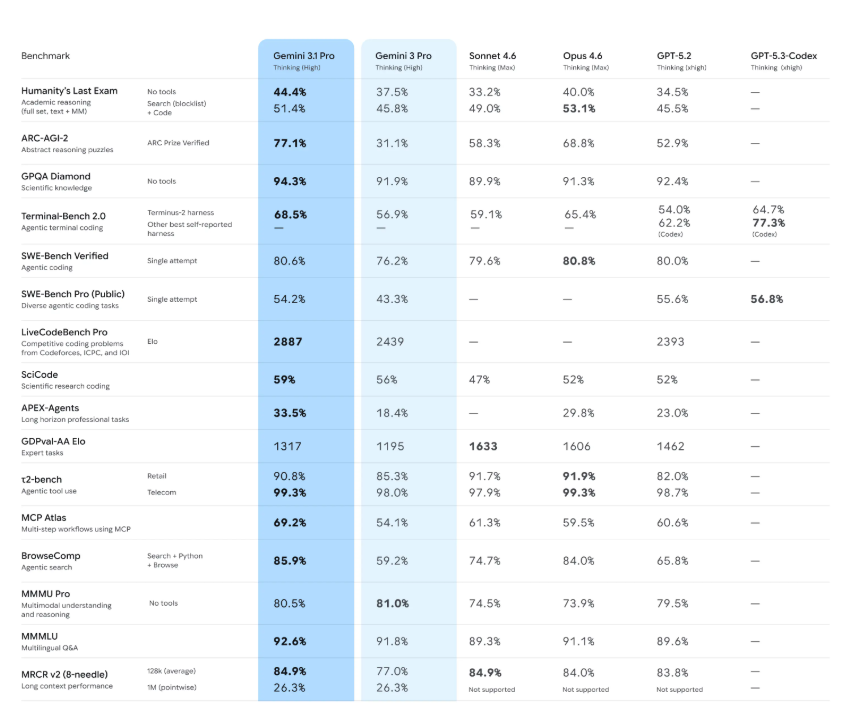

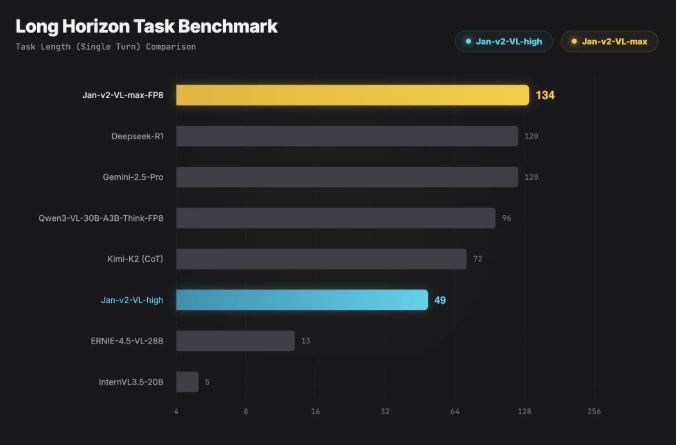

Benchmark-Busting Performance

The proof comes in specialized testing. In the "Hallucination-Decay Return" (HDR) benchmark - which measures how quickly an AI's performance degrades during prolonged tasks - Jan-v2-VL-Max leaves competitors eating its digital dust. It maintains stability where others falter, outperforming not just Google's Gemini 2.5 Pro but also DeepSeek R1.

Designed for Real-World Use

The Jan team hasn't just built impressive tech - they've made it accessible:

- Web interface: Upload images and test multi-step processes without coding

- Local deployment: Optimized vLLM solution runs efficiently on consumer GPUs

- Integration-ready: Developers can easily incorporate it into existing systems

The implications are significant for fields like UI automation, robotics, and multi-tool collaboration.

Why This Matters Now

As AI transitions from dazzling demos to daily tools, reliability becomes paramount. While competitors chase headline-grabbing capabilities, Jan focuses on making AI you can actually depend on when it matters most.

The model represents more than technical achievement—it signals a shift in priorities from "smart" to "steady," from flashy single responses to trustworthy extended performance.

Key Points:

- 30-billion parameter multimodal model excels at long-term tasks

- Solves "error accumulation" problem plaguing current AI agents

- Outperforms Google Gemini 2.5 Pro in stability benchmarks

- Offers both web interface and efficient local deployment

- Marks shift toward reliability-focused AI development