DeepSeek's Memory Boost: How AI Models Are Getting Smarter

DeepSeek's Breakthrough Makes AI More Efficient

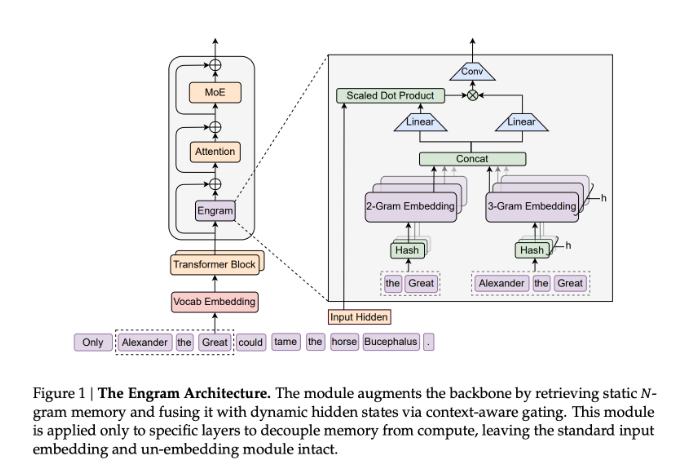

Imagine an assistant who keeps forgetting simple facts and has to look them up repeatedly - that's essentially how today's AI models operate. DeepSeek's new Engram module changes this by giving artificial intelligence something resembling human memory.

Solving AI's Forgetfulness Problem

Traditional Transformer models waste energy recomputing the same information over and over. "It's like rebuilding your grocery list from scratch every time you go to the store," explains one researcher familiar with the project. The Engram module solves this by creating specialized memory lanes for frequently used knowledge.

Unlike similar systems that try to replace existing architectures, Engram works alongside them. It modernizes old N-gram techniques into a scalable lookup system that operates at lightning speed - retrieving information in constant time (O(1) complexity for tech-savvy readers).

Real-World Performance Gains

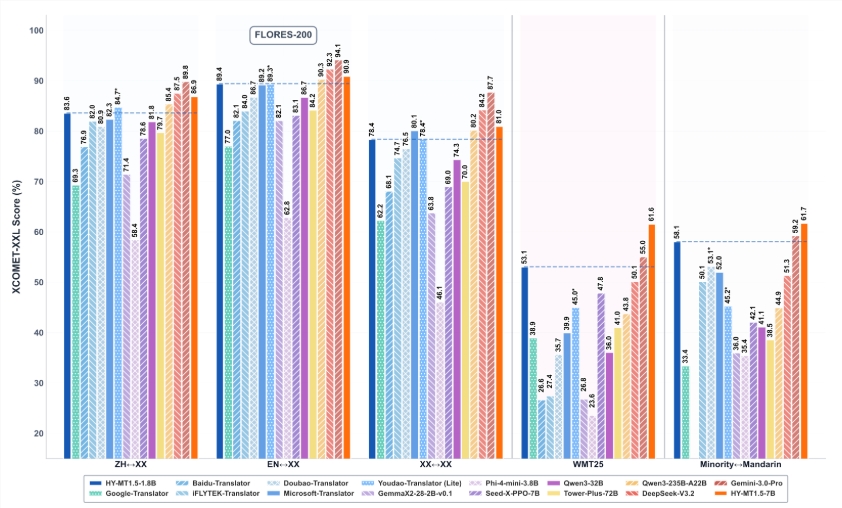

The DeepSeek team put Engram through rigorous testing using massive datasets containing 262 billion tokens. The results turned heads:

- Models dedicating 20-25% of their capacity to Engram showed significantly improved accuracy

- Both the 27-billion and 40-billion parameter versions outperformed conventional models across various benchmarks

- The system excelled particularly in mathematics, coding tasks, and general knowledge tests

The innovation shines brightest when handling long documents. With context windows stretching to 32,768 tokens (about 50 pages of text), Engram-equipped models maintained impressive accuracy in finding specific information - like locating needles in digital haystacks.

Why This Matters Beyond Benchmarks

What makes Engram special isn't just better test scores. By offloading routine memory tasks, the system effectively gives AI models deeper thinking capacity without requiring more computing power. It's akin to freeing up mental RAM so the system can tackle tougher problems.

The technology could lead to:

- More responsive chatbots that remember your preferences

- Faster research assistants capable of handling lengthy documents

- Reduced energy consumption for AI services

The DeepSeek team continues refining Engram, but early results suggest we're witnessing an important step toward more efficient artificial intelligence.

Key Points:

- Memory Upgrade: Engram creates specialized pathways for storing common knowledge while preserving reasoning capacity

- Better Performance: Tested models showed improvements in mathematics (GSM8K), general knowledge (MMLU), and coding tasks

- Long Document Mastery: The system maintains accuracy even when processing texts equivalent to 50+ pages

- Energy Efficient: Same computing power delivers smarter results by eliminating redundant calculations