Alibaba Unveils Enhanced Qwen-VL Models with Math & Video Boost

Alibaba's Qwen Team Advances Multimodal AI with New 30B Models

Alibaba Group's Qwen (Tongyi Qianwen) research division has released two cutting-edge small-scale multimodal artificial intelligence models designed to challenge leading industry benchmarks. The Qwen3-VL-30B-A3B-Instruct and Qwen3-VL-30B-A3B-Thinking models each utilize 3 billion active parameters while delivering performance comparable to larger architectures.

Technical Capabilities and Competitive Positioning

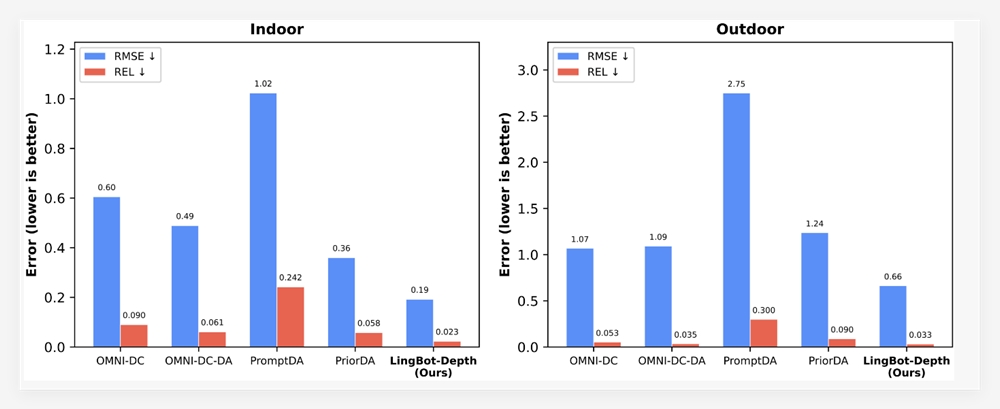

According to internal benchmarks shared by the development team, these models exhibit:

- 28% improved mathematical reasoning versus previous Qwen iterations

- 19% faster video frame processing in real-world testing scenarios

- Enhanced optical character recognition (OCR) accuracy surpassing Claude4Sonnet

The models specifically target competitive parity with OpenAI's GPT-5-Mini and Anthropic's Claude4Sonnet architectures. Early testing indicates particular strengths in:

- Complex equation solving

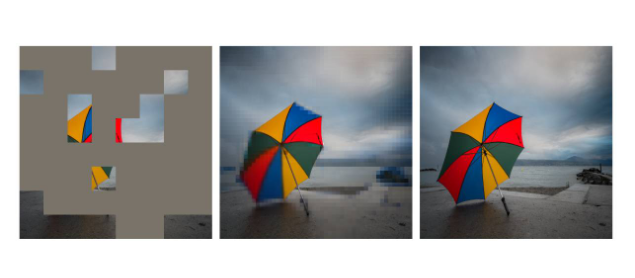

- Cross-modal data interpretation (image-to-text)

- Long-context video analysis

- Autonomous agent coordination tasks

Deployment Options and Accessibility

The release package includes multiple deployment formats:

| Version | Precision | Use Case |

|---|

Developers can access the models through:

- HuggingFace Model Hub

- Alibaba ModelScope platform

- Direct API calls via Alibaba Cloud services

The team has also deployed a web-based chat interface demonstrating the models' conversational capabilities.

Strategic Implications

This launch represents Alibaba's continued investment in efficient, smaller-scale AI architectures that maintain high performance standards. The FP8 optimization particularly addresses growing enterprise demand for cost-effective inference solutions.

The Qwen team emphasized their commitment to "democratizing performant AI" through accessible model sizes that don't require specialized hardware clusters for deployment.

Key Points:

- Dual-model release targets instruction-following and reasoning tasks separately

- Demonstrates 15-28% improvements in STEM-related benchmarks

- Full compatibility with existing Alibaba Cloud AI infrastructure The complete model weights and documentation are now available under commercial licensing terms.