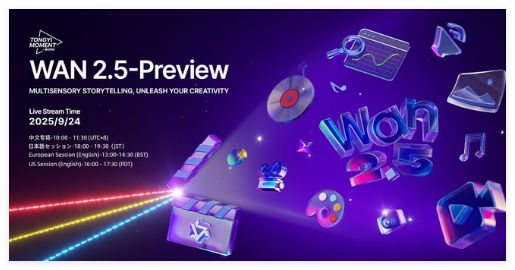

Wan2.5-Preview Unveiled: Multimodal AI for Cinematic Video

Wan2.5-Preview Revolutionizes AI Visual Generation

The artificial intelligence landscape has reached a new milestone with today's release of Wan2.5-Preview, a cutting-edge multimodal model that redefines visual content creation. Developed with an innovative unified architecture, this AI solution demonstrates unprecedented capabilities in video synchronization, cinematic aesthetics, and precise image manipulation.

Unified Multimodal Architecture

At its core, Wan2.5-Preview employs a revolutionary framework that seamlessly processes and generates content across four modalities:

- Text

- Images

- Videos

- Audio

Through joint training of these data types, the model achieves exceptional modal alignment - a critical factor for maintaining consistency in complex multimedia outputs. The development team implemented Reinforcement Learning from Human Feedback (RLHF) to refine outputs according to human aesthetic preferences.

Cinematic Video Generation Breakthroughs

The video generation capabilities represent Wan2.5-Preview's most striking advancement:

- Synchronized Audio-Visual Production: The model natively generates high-fidelity videos with perfectly timed audio components including dialogue, sound effects, and background music.

- Flexible Input Combinations: Creators can mix text prompts, reference images, and audio clips as input sources for unprecedented creative possibilities.

- Professional-Grade Output: The system produces stable 1080p videos up to 10 seconds long with cinematic framing, lighting, and motion dynamics.

Enhanced Image Creation Tools

Beyond video production, Wan2.5-Preview delivers substantial improvements in:

- Advanced Image Generation: From photorealistic renders to diverse artistic styles and professional infographics

- Precision Editing: Dialogue-driven modifications with pixel-level accuracy for complex tasks like:

- Multi-concept fusion

- Material transformation

- Product customization (e.g., color swaps)

The model's instruction-following capability has seen particular refinement through its training process.

Key Points:

- First AI model to achieve native synchronization of high-quality video and complex audio elements

- Unified architecture enables seamless switching between content modalities

- RLHF optimization ensures outputs meet professional creative standards

- Opens new possibilities for filmmakers, marketers, and digital artists