Robots Get a Human Touch: Groundbreaking Dataset Bridges Sensory Gap

Robots Gain New Sense of Touch Through Cutting-Edge Dataset

Imagine robots that can feel fabrics as delicately as a seamstress or handle fragile dishes with the care of a seasoned waiter. That future just got closer with the release of Baihu-VTouch, a revolutionary dataset that combines visual and tactile sensing for machines.

Massive Scale Meets Precision

The numbers behind Baihu-VTouch are staggering:

- 90 million+ real-world contact samples

- 60,000 minutes of high-resolution recordings

- 120Hz refresh rate capturing subtle touches

- 640×480 resolution detecting microscopic textures

"This isn't just more data - it's better data," explains Dr. Lin Wei from Weitai Robotics. "We're recording not just what robots see, but how objects actually feel during interactions."

One Dataset Fits All Robots

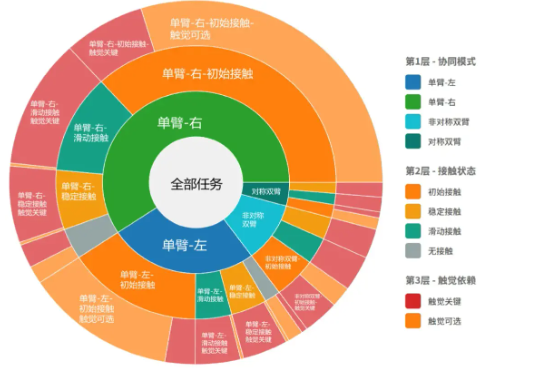

The true innovation lies in Baihu-VTouch's versatility across robotic platforms:

- Humanoid robots like the "Qinglong" model

- Wheeled robotic arms for industrial use

- Even handheld grippers used in research labs

The implications? A single AI trained on this dataset could potentially adapt its "sense of touch" across different robot bodies - cutting development time dramatically.

Practical Applications Come to Life

The researchers didn't stop at raw data collection. They structured Baihu-VTouch around real-world scenarios:

- Home environments - handling delicate glassware or adjusting grip on slippery surfaces

- Restaurant service - sensing the perfect pressure for grasping lettuce without crushing it

- Precision manufacturing - detecting microscopic imperfections through touch alone

- Hazardous conditions - operating tools by feel in low-visibility situations

Early tests show robots using this data achieve 68% better performance in tasks requiring delicate force control - potentially preventing countless broken items in future robotic applications.

The Baihu-VTouch dataset represents more than technological achievement; it's a crucial step toward robots that can safely share our physical spaces and handle everyday objects with human-like care.

Key Points:

- First cross-platform vision+touch dataset for robotics

- Captures subtle tactile sensations at unprecedented scale

- Enables more adaptable robotic perception systems

- Direct applications in service industries and manufacturing

- Available now for global research and development