Robots Get a Sense of Touch: Groundbreaking Dataset Bridges Vision and Feeling

Robots Learn to Feel: Major Leap in Tactile Perception

In what could transform robotics forever, researchers have released Baihu-VTouch, the first large-scale dataset merging visual and tactile data for machines. This isn't just another collection of numbers - it's giving robots something remarkably close to human touch.

What Makes Baihu-VTouch Special?

The dataset captures over 90 million real-world contact samples using sensors that detect minute changes at 120 times per second. Picture this: robotic fingers gently grasping a wine glass while cameras record the interaction from multiple angles - that's the level of detail we're talking about.

"Previous attempts focused on vision or touch separately," explains Dr. Li Wei from the research team. "Baihu-VTouch finally bridges these senses, much like how humans naturally combine sight and touch."

Real-World Applications Coming Soon

The implications are staggering:

- Precision manufacturing where robots handle fragile components

- Elder care assistants that can safely help with daily tasks

- Disaster response bots capable of delicate search-and-rescue operations

Early tests show robots using this data achieve 70% better performance in continuous contact tasks. That means fewer dropped objects and more natural movements.

Behind the Scenes: How They Did It

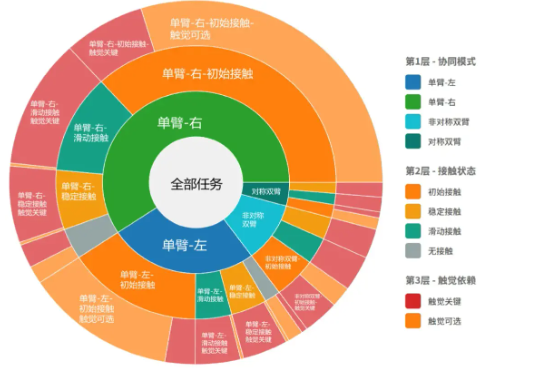

The team collected data across:

- Full-size humanoid robots (including the Qinglong model)

- Wheeled humanoid assistants

- Handheld smart devices

Each recorded not just visual data but precise force measurements and joint positions during hundreds of common tasks.

Key Points:

- 60,000+ minutes of interaction data captured

- Covers 260+ tasks across home, industrial, and service settings

- Sensors track contact at 640×480 resolution, 120 times per second

- Could enable robots to recover from errors autonomously