Radical Numerics Releases Open-Source 30B-Parameter Diffusion AI Model

Radical Numerics Unveils Open-Source Diffusion AI Breakthrough

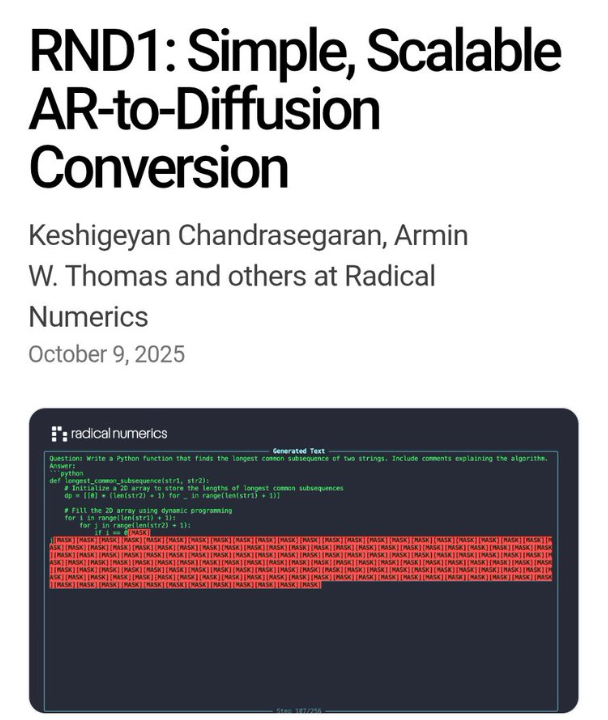

AI research firm Radical Numerics has publicly released RND1-Base, the largest open-source diffusion language model to date. The 30-billion parameter architecture marks a significant advancement in parallel text generation technology.

Technical Specifications

The model features:

- 30B total parameters (3B active via sparse expert mixture)

- Built upon Qwen3-30BA3B autoregressive base

- Trained on 500B tokens using bidirectional masking

- Supports 8M token batch sizes for stability

Performance Benchmarks

RND1-Base demonstrates superior capabilities across multiple domains:

| Benchmark | Score |

|---|

The model outperforms previous open-source diffusion models like Dream-7B and LLaDA-8B while maintaining computational efficiency through selective parameter activation.

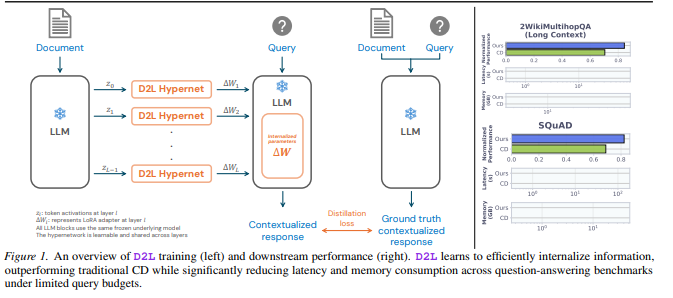

Architectural Innovations

Unlike traditional autoregressive models, RND1 treats text generation as a denoising process, enabling:

- Parallel sequence refinement

- Bidirectional attention mechanisms

- Reduced inference latency

The transition from autoregressive to diffusion paradigm was achieved through continuous pre-training with layer-specific learning rates, preserving existing knowledge while adopting new capabilities.

Research Implications

The open-source release includes:

- Complete model weights

- Training methodologies

- Inference code with FlashInfer/SGLang backends

This transparency aims to accelerate community research into post-training optimization and practical applications of diffusion language models.

Future Directions

While demonstrating strong performance, challenges remain in:

- Generalization capability

- Memory optimization Radical Numerics suggests future integration with multi-objective fine-tuning could unlock additional potential.

The team - comprising researchers from DeepMind, Meta, and Stanford - positions this as foundational work toward recursive self-improving AI systems.

Key Points:

- Largest open-source diffusion language model released (30B parameters)

- Achieves state-of-the-art benchmarks while enabling parallel generation

- Complete technical stack made available to research community

- Represents shift toward non-autoregressive AI architectures

- Foundation for future self-improving AI systems