OpenAI Bets Big on Cerebras' Giant Chips to Challenge NVIDIA

OpenAI and Cerebras Team Up on Revolutionary AI Hardware

In a move that could reshape the AI hardware landscape, OpenAI has announced a massive partnership with chipmaker Cerebras Systems. The companies plan to deploy enough computing power to light up a small city - 750 megawatts worth of Cerebras' revolutionary wafer-scale systems.

Breaking Free from GPU Limitations

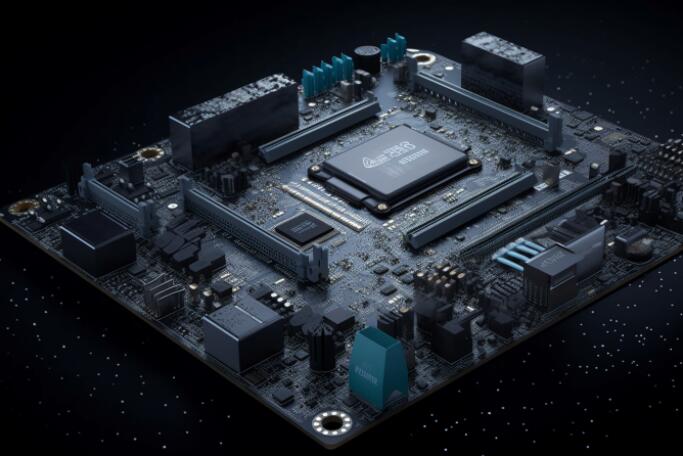

The heart of this collaboration lies in Cerebras' unconventional approach. Their chips aren't just big - they're enormous. Each contains 4 trillion transistors spread across an area equivalent to hundreds of conventional GPUs. By integrating computing, memory, and bandwidth onto a single silicon wafer, these chips eliminate the bottlenecks that plague multi-chip systems.

"We're seeing response times up to 15 times faster than GPU clusters," revealed an OpenAI engineer familiar with early testing. "For applications like real-time coding assistance or conversational AI, that difference isn't incremental - it's transformative."

A Partnership Years in the Making

The deal carries personal significance for OpenAI CEO Sam Altman, who was among Cerebras' earliest investors back in 2017. Internal documents show OpenAI has been quietly exploring alternatives to NVIDIA's ecosystem for years, experimenting with custom chips from Broadcom and AMD before committing to this ambitious Cerebras deployment.

Andrew Feldman, Cerebras CEO, shared how quickly things moved once serious talks began: "We started negotiations last fall and had everything locked down by Thanksgiving. When you see what these systems can do for large language models, hesitation isn't really an option."

The Business Case for Speed

Sachin Katti, OpenAI's infrastructure lead, explained why inference speed has become existential: "Compute power directly translates to revenue potential these days. Our users expect instantaneous responses - anything less feels broken." Internal metrics show computing demands doubling annually alongside revenue growth.

The financial stakes are enormous. While exact figures remain confidential, industry analysts estimate the total deal value exceeds $10 billion. Meanwhile, Cerebras is reportedly seeking new funding at a $22 billion valuation - nearly triple its previous worth.

Implications Beyond Two Companies

This partnership signals broader shifts in AI infrastructure:

- Commercialization pressures are forcing efficiency breakthroughs

- The industry is diversifying beyond NVIDIA's ecosystem

- Wafer-scale integration and custom ASICs are gaining traction among tech leaders

The race isn't just about building bigger models anymore - it's about delivering answers before users finish asking questions.

Key Points:

- 15x performance boost: Cerebras' wafer-scale design dramatically outpaces traditional GPUs for inference tasks

- $10B commitment: One of the largest AI infrastructure deals ever announced

- Strategic shift: Marks OpenAI's most significant move away from NVIDIA-dependent architectures

- Valuation surge: Cerebras' worth may triple amid growing demand for alternative AI hardware