NVIDIA's NitroGen learns to game like humans by watching YouTube

NVIDIA Teaches AI to Master Games Just By Watching

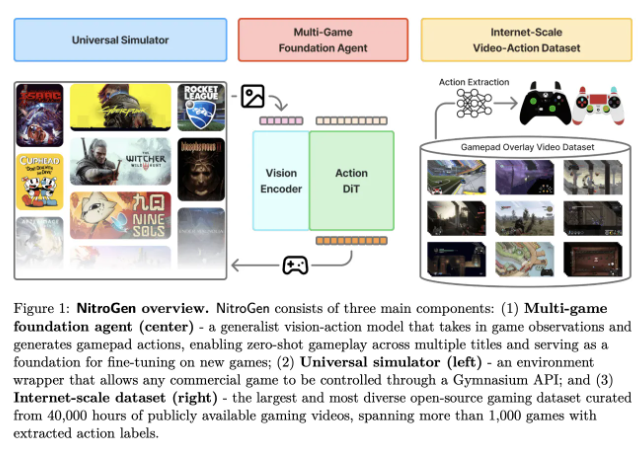

Imagine learning to play Dark Souls or Street Fighter just by watching Twitch streams. That's essentially what NVIDIA's new NitroGen AI model can do. This groundbreaking system analyzes gameplay videos complete with controller inputs displayed on-screen, then teaches itself how to play.

Learning From the Gaming Community

The research team fed NitroGen a massive diet of gaming content - initially collecting 71,000 hours of raw footage before refining it down to 40,000 high-quality hours. These videos came from 818 different creators and covered an impressive variety:

- Action RPGs (35% of total footage)

- Platformers (18%)

- Action-adventure games (9%)

- Sports, racing and roguelike titles rounding out the collection

The final dataset represents gameplay from 846 distinct titles - essentially giving NitroGen what amounts to a comprehensive gaming education.

How It Works Behind the Scenes

The magic happens in three stages:

- Controller Detection: The system scans frames using templates for common controller layouts

- Input Interpretation: A specialized segmentation model deciphers exactly what buttons are being pressed

- Action Refinement: Coordinates get fine-tuned for precision movement controls

This meticulous approach allows NitroGen to effectively "watch and learn" like human players do when studying advanced techniques.

Practical Applications

The implications extend beyond just impressive tech demos:

- Game Testing: Developers could automate quality assurance processes

- Accessibility Tools: Could help create adaptive controllers for players with disabilities

- Training Bots: Esports teams might use similar tech for practice opponents

- Content Creation: Streamers could generate highlight reels automatically

The system even includes a general simulator that lets it interface with commercial Windows games without modifying their code - meaning it could theoretically learn any PC title.

Performance That Speaks Volumes

The numbers tell an impressive story:

- Achieves 45-60% success rates on unfamiliar games immediately (zero-shot evaluation)

- Shows up to 52% better performance compared to training from scratch when adapting to new titles

- Processes game visuals at 256×256 resolution, balancing detail with computational efficiency

The model uses Diffusion Transformer architecture - cutting-edge tech that helps maintain this balance between visual understanding and responsive controls.

Key Points:

- 🎮 Learns game mechanics purely from video observation like human players do

- 📊 Trained on massive dataset: 40k hours across 1k+ game titles

- ⚡ Shows remarkable adaptability - up to 52% improvement versus fresh training