New AI Turns Photos Into Ready-to-Simulate 3D Objects

From Flat Photos to Functional 3D Models

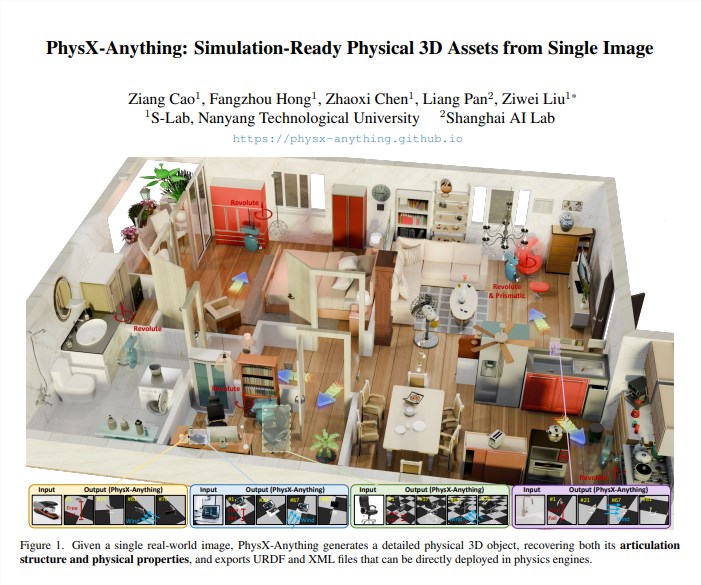

Imagine snapping a picture of a chair and instantly getting a digital version that behaves just like the real thing - complete with proper weight distribution, moving parts, and realistic physics. That's exactly what PhysX-Anything delivers. Developed jointly by Nanyang Technological University and Shanghai Artificial Intelligence Lab, this breakthrough technology bridges the gap between computer vision and physical simulation.

How It Works

The system uses a clever two-step approach. First, it analyzes the overall physical properties of objects - things like mass distribution and surface friction. Then it zooms in to refine individual components and their movement ranges. This prevents the common pitfall where visually accurate models behave strangely in simulations.

What really sets PhysX-Anything apart is its efficient encoding method. It packs all the necessary information - shape, joints, physics - into compact digital packages that reconstruct quickly during use. This makes the process about twice as fast as current state-of-the-art methods.

Real-World Performance

Tests show impressive results:

- Geometry accuracy improved by 18%

- Physics errors reduced by 27%

- Scale accurate within 2 centimeters

- Joint movements precise to within 5 degrees

The practical benefits are even more striking. When used for robot training simulations:

- Grasping success rates jumped by 12%

- Required training time dropped by nearly a third

The team validated these improvements using everyday objects from IKEA furniture to kitchen utensils.

Open Access Future

The researchers have made everything publicly available - code, trained models, datasets - hoping to accelerate development in this space. They're already working on version 2.0, which will accept video input to capture how objects move over time.

For roboticists and game developers alike, this technology could dramatically simplify creating digital twins of real-world objects. Instead of painstakingly modeling every physical property manually, they might soon just snap pictures and get working simulations.

The implications extend beyond robotics too - imagine architects testing furniture arrangements or product designers prototyping new ideas using photos of existing items as starting points.

Key Points:

- Converts single images into simulation-ready 3D assets

- Preserves both visual appearance and physical behavior

- Open-source framework available now on GitHub

- Version supporting video input coming early next year