MiniMax Opens Floodgates with M2.5 Release: AI Just Got Cheaper

MiniMax Levels the AI Playing Field

The tech world buzzed today as MiniMax unveiled its M2.5 model, marking the third iteration in its rapidly evolving M2 series. What makes this release special? It's not just another incremental update - it's a game-changer that brings enterprise-grade AI within reach of everyday developers and businesses.

Performance That Turns Heads

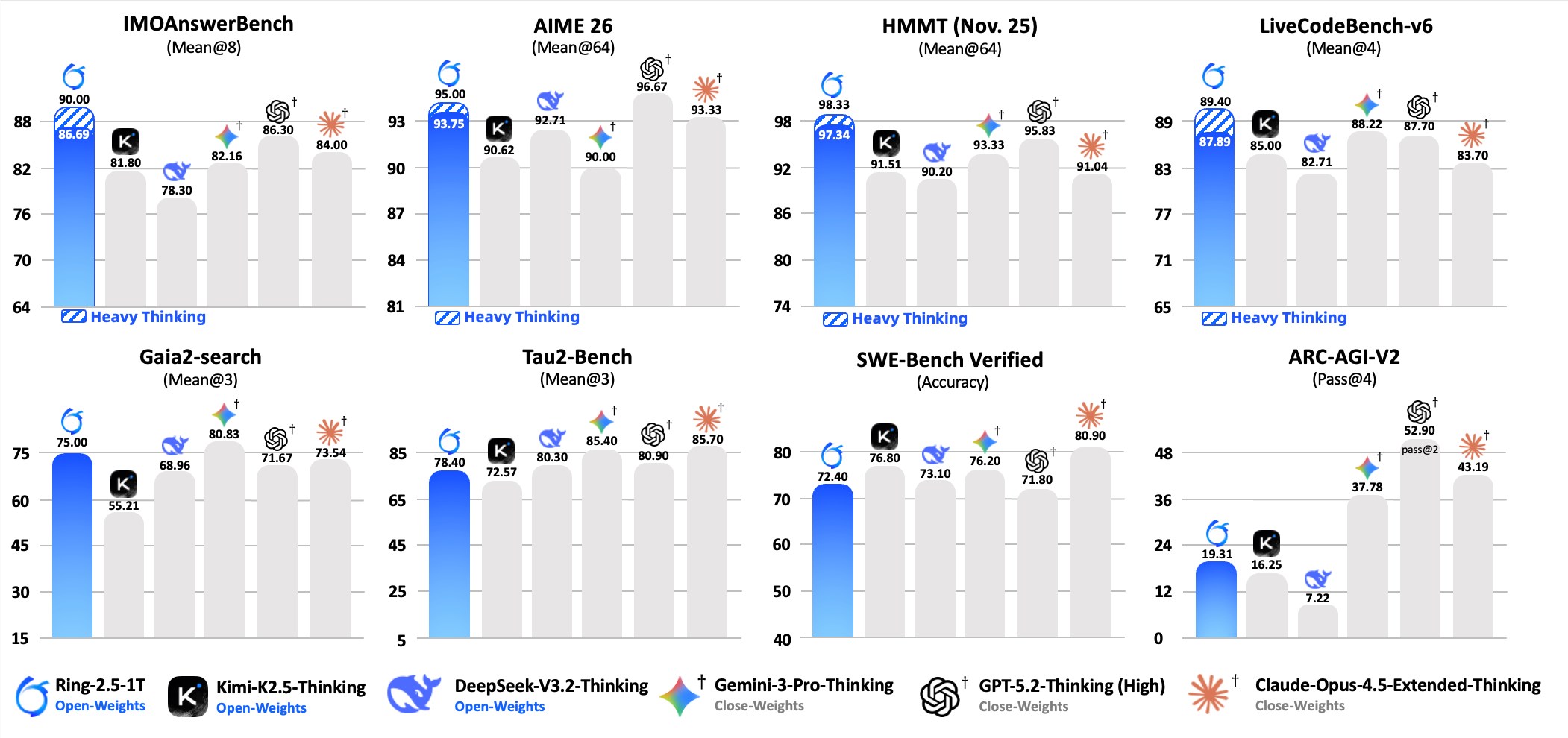

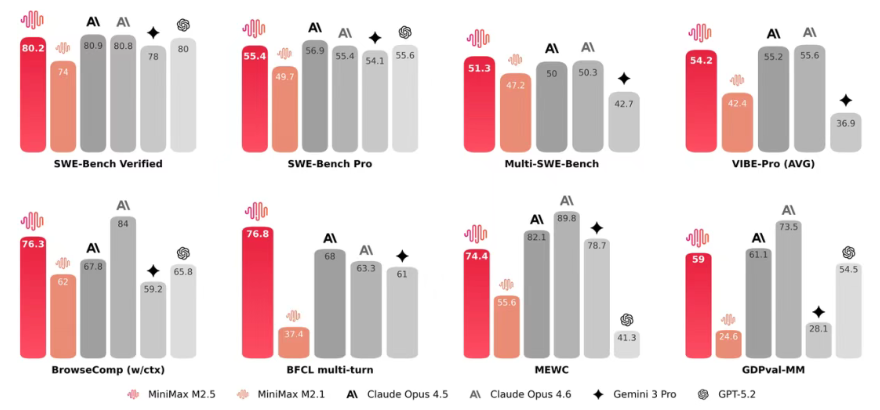

Benchmark results tell an impressive story. The M2.5 clocks an 80.2% score on SWE-Bench Verified, outperforming GPT-5.2 and breathing down Claude Opus4.5's neck. For multilingual coding tasks, it leads the pack with a 51.3% Multi-SWE-Bench score - proving you don't need to break the bank for top-tier programming assistance.

Where this model really shines is in practical applications:

- Programming: Handles everything from architectural planning to cross-platform development

- Search: Cuts query rounds by 20%, ideal for complex research tasks

- Office Work: Integrates specialized knowledge across finance, law and more

The kicker? It delivers these results at just one-tenth Claude Opus4.6's cost while matching its speed.

Behind the Breakneck Development Pace

How did MiniMax achieve three major releases in just 108 days? Three technological leaps made it possible:

- Their proprietary Forge framework accelerates training by 40x

- The CISPO algorithm solves stubborn context allocation problems

- Innovative Reward balancing keeps responses both quick and accurate The result? About 30% of MiniMax's daily tasks now run on M2.5 - including most new code submissions.

Deployment Made Simple

Whether you're a coding novice or enterprise architect, M2.5 fits your workflow:

- Plug-and-play web interface with thousands of pre-built "Expert" agents

- Developer-friendly APIs (including free options on ModelScope)

- Full local deployment supporting everything from Mac development to high-volume production The Lightning API version particularly stands out - offering Claude-level performance at beer-budget prices.

Tool Integration Without Headaches

Developers will appreciate how M2.5 handles tool calls:

- Native support for parallel tool execution

- Works seamlessly with OpenAI SDK formats when using vLLM/SGLang

- Clear documentation guides you through XML parsing for other frameworks The team even provides optimized parameter recommendations (temperature=1.0 fans take note) tailored for different use cases.

Key Points:

- Cost Revolution: Professional AI at consumer prices (1/10th competitor costs)

- Speed Demon: Matches Claude Opus4.6's response times

- Flexible Friend: Three deployment options suit any technical level

- Polyglot Programmer: Leads in multilingual coding benchmarks

- Real-World Ready: Specialized solutions for tools and parameter tuning