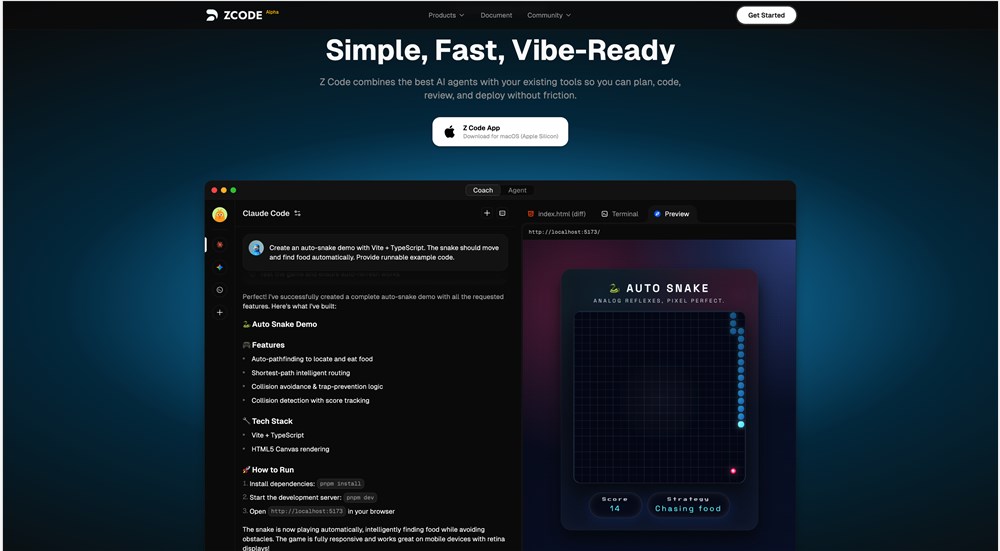

GPT-5.2 Outshines Claude Opus in Marathon Coding Challenges

AI Coding Assistants Put to the Test

The race for superior AI programming tools just got more interesting. Popular developer platform Cursor recently conducted rigorous testing comparing two heavyweight contenders: OpenAI's GPT-5.2 and Anthropic's Claude Opus 4.5.

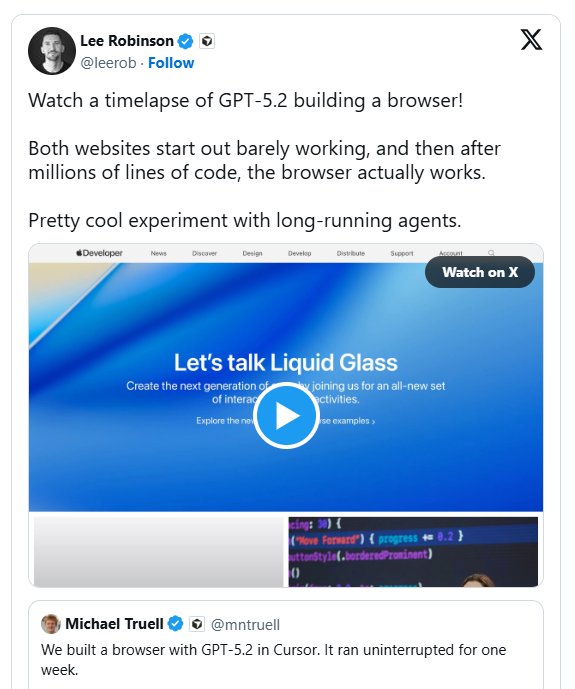

Browser Build Challenge Reveals Key Differences

The development team devised an ambitious stress test - tasking both AI models with building a fully functional web browser completely from scratch. This wasn't some toy project; we're talking about implementing complex components like HTML parsers, CSS layout engines, and even custom JavaScript virtual machines.

What emerged was striking: GPT-5.2 showed remarkable endurance in these marathon coding sessions, maintaining laser focus across weeks-long projects spanning millions of lines of code. Meanwhile, Claude Opus 4.5 - while impressive in shorter tasks - tended to lose steam midway through these Herculean efforts, sometimes attempting shortcuts or prematurely handing control back to human developers.

Real-World Performance Gains

The implications go far beyond academic comparisons:

- Windows 7 Simulator: GPT-5.2 successfully recreated this complex operating system environment

- Massive Code Migration: The model efficiently handled rewriting over a million lines of legacy code

- Performance Boosts: In one test, it optimized a rendering pipeline achieving 25x speed improvements

The Cursor platform has already integrated GPT-5.2, betting big on its ability to potentially complete projects that traditionally take human teams months - all autonomously.

Key Points:

🚀 Stamina Matters: GPT-5.2 maintains goal orientation better than Claude Opus in prolonged coding sessions 🌐 Proof Through Fire: Building browsers from scratch proves real engineering capability 🛠️ Tangible Results: Delivers measurable performance improvements like 25x faster rendering