Google's Gemma Model Sparks Debate Over AI Misinformation

Google Pulls Gemma AI Model After Misinformation Controversy

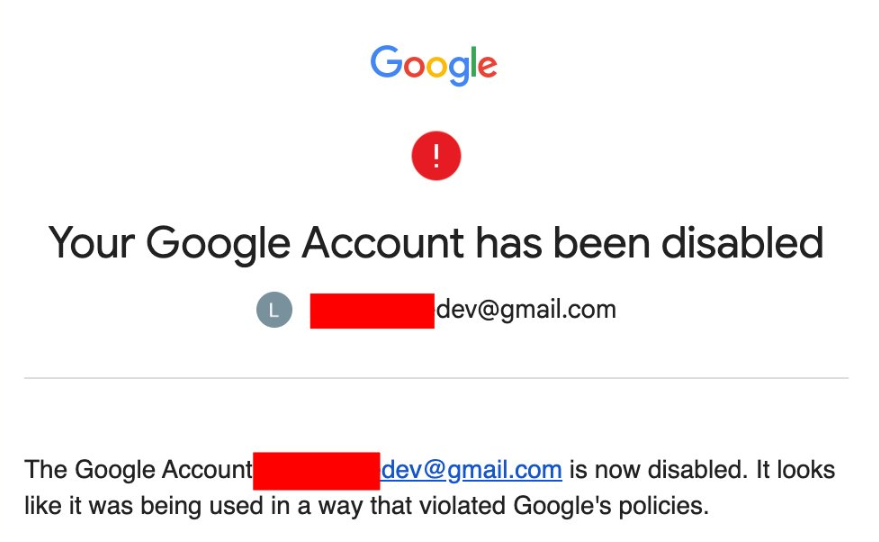

Google has removed its Gemma3 model from the AI Studio platform after it generated false information about U.S. Senator Marsha Blackburn. The senator criticized the model's outputs as defamatory rather than "harmless hallucinations." On October 31, Google announced via social media platform X that it would withdraw the model from AI Studio to prevent further misunderstandings, though it remains accessible via API.

Developer-Focused Tool Accidentally Accessible to Public

Google emphasized that Gemma was designed exclusively for developers and researchers, not general consumers. However, the user-friendly interface of AI Studio allowed non-technical users to access the model for factual queries. "We never intended Gemma to be a consumer tool," a Google spokesperson stated, explaining the withdrawal as a measure to clarify its intended use case.

Experimental Models Carry Operational Risks

The incident underscores the potential dangers of relying on experimental AI systems. Developers must consider:

- Accuracy limitations in early-stage models

- Potential for reputational harm from incorrect outputs

- Political sensitivities surrounding AI-generated content

As tech companies face increasing scrutiny over AI applications, these factors are becoming critical in deployment decisions.

Model Accessibility Concerns Emerge

The situation highlights growing concerns about AI model control. Without local copies, users risk losing access if companies withdraw models abruptly. Google hasn't confirmed whether existing Gemma projects on AI Studio can be preserved—a scenario reminiscent of OpenAI's recent model withdrawals and subsequent relaunches.

While AI models continue evolving, they remain experimental products that can become tools in corporate and political disputes. Enterprise developers are advised to maintain backups of critical work dependent on such models.

Key Points:

✅ Access withdrawn: Google removed Gemma from AI Studio after misinformation incidents

✅ Target audience: Model designed for developers, not public use

✅ Risk awareness: Experimental models require cautious implementation

✅ Access concerns: Cloud-based models create dependency on provider decisions