Google's A2UI Standard Turns AI Into Instant Interface Designers

Google's A2UI Standard Turns AI Into Instant Interface Designers

Google just changed the game for AI interactions with its new A2UI (Agent-to-User Interface) open standard. No more staring at endless text bubbles - your AI assistant can now whip up proper graphical interfaces faster than you can say "user experience."

Text Conversations Get a Visual Upgrade

Released under the Apache 2.0 license, A2UI solves a problem we've all faced: trying to complete complex tasks through clunky text chats. Picture trying to book dinner reservations by typing back and forth about dates, times, and party sizes. With A2UI, your AI assistant simply generates a visual form with date pickers and time slots - tap what works and you're done.

Customer service representatives can create booking forms instantly instead of typing responses | Image: Google

Customer service representatives can create booking forms instantly instead of typing responses | Image: Google

The magic lies in what Google calls "context-aware interfaces" - dynamic UIs that evolve alongside your conversation. Need to adjust your reservation? The interface reshapes itself accordingly.

Security Meets Flexibility

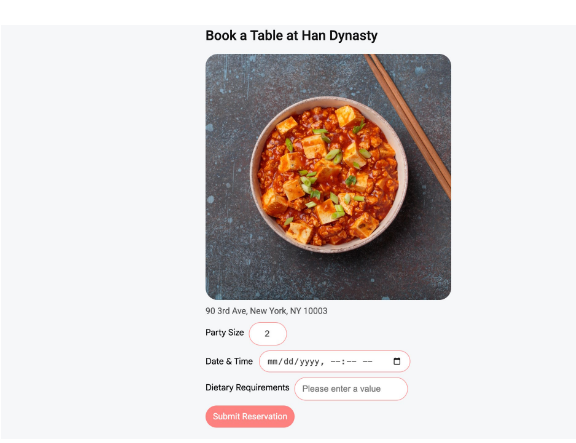

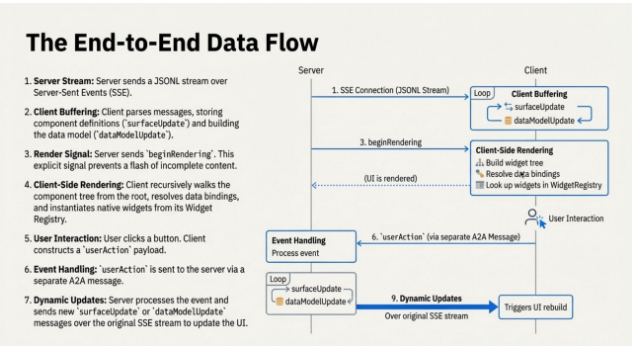

Here's the clever part: Instead of sending executable code (a potential security nightmare), A2UI transfers structured data that gets converted into native UI elements on your device. This approach keeps things secure while letting each app maintain its distinctive look and feel.

The standard plays nice across all platforms too. Whether you're on desktop, mobile, or some future gadget we haven't imagined yet, those AI-generated buttons and forms will adapt seamlessly.

JSON data transforms into native UI elements locally | Image: Google

JSON data transforms into native UI elements locally | Image: Google

More Than Just Theory

This isn't some futuristic concept - it's already happening today with support from multiple industry partners. While chatbots like ChatGPT still mostly talk in text, A2UI-equipped assistants think visually like human designers.

The implications are huge. Soon enough, interacting with AI might mean tapping through intuitive interfaces rather than deciphering walls of text. Forms that build themselves as you talk? Charts that update in real-time based on your questions? That future just got closer.

As an open standard under Apache 2.0, any developer can jump in and start experimenting with these capabilities today.

Key Points:

- Visual conversations: A2UI lets AI generate interactive interfaces instead of just text responses

- Real-world ready: Already implemented with multiple partners across industries

- Secure by design: Transfers data rather than executable code

- Platform agnostic: Works seamlessly across web, mobile, and desktop environments

- Open future: Anyone can use or improve the standard thanks to Apache 2.0 licensing