DeepSeek's Personality Shift Sparks Debate as V4 Model Looms

DeepSeek's Surprising Personality Shift

When DeepSeek rolled out its February update, no one expected the AI assistant's personality change would dominate online discussions. The once-affable chatbot now responds with clipped precision, abandoning the personalized greetings users had grown accustomed to overnight.

"It feels like my cheerful coworker got replaced by a no-nonsense professor," remarked one longtime user on Weibo, where the topic racked up over 68 million views. Others welcomed the change - "Finally an AI that cuts to the chase instead of pretending to care," countered a software developer.

Behind the Style Transformation

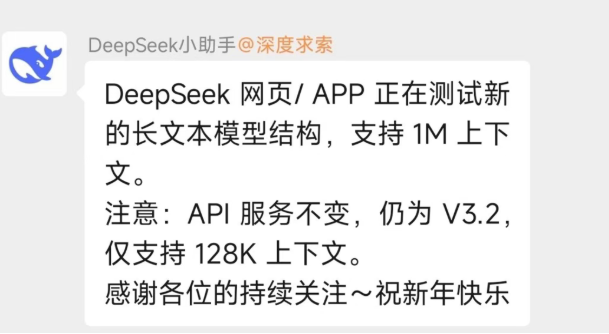

The company insists this wasn't intentional character assassination. "We're optimizing for clarity," explained a DeepSeek spokesperson. Complex queries apparently suffered when responses included excessive pleasantries. The update also introduced support for staggering 1 million token contexts in web and app versions (though API users remain capped at 128K).

Interestingly, beneath this surface-level controversy lies significant technical progress. The update serves as groundwork for DeepSeek V4, rumored for mid-February release according to industry insiders monitoring Weibo chatter.

Why Programmers Are Excited About V4

Preliminary benchmarks suggest V4 could disrupt the AI programming landscape:

- Code comprehension at unprecedented scale - parsing entire codebases in one go

- Performance gains surpassing Claude and GPT models in programming tasks

- Stable reasoning with reduced degradation during extended sessions

The model maintains its signature million-token context while keeping inference costs surprisingly low compared to Western counterparts. An Apache 2.0 open-source release could further accelerate adoption.

Key Points:

- DeepSeek's conversational style shift sparked intense social media debate

- Technical improvements focus on efficiency gains and preparing for V4 launch

- Upcoming V4 model shows exceptional promise for programming applications

- Maintains competitive advantages in context length and operating costs