DeepSeek Finds Smarter AI Doesn't Need Bigger Brains

AI Gets Smarter Without Growing Bigger

In a finding that could reshape how we build artificial intelligence, DeepSeek researchers have demonstrated that smarter AI doesn't necessarily require bigger models. Their groundbreaking study shows thoughtful architectural tweaks can outperform brute-force parameter increases.

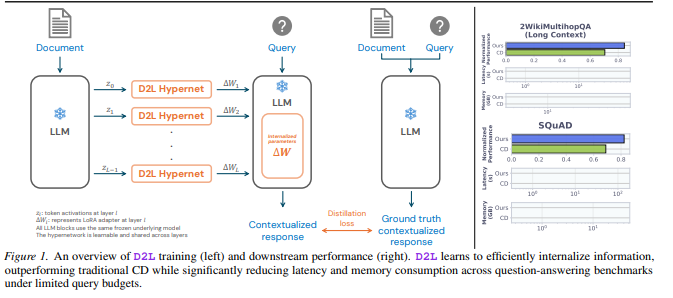

Rethinking How AI Learns

The team focused on solving fundamental issues plaguing large language models. "We noticed traditional architectures struggle with unstable signal propagation," explains lead researcher Dr. Li Wei. "It's like trying to have a coherent conversation while standing in a wind tunnel - the message gets distorted."

Their solution? Introducing carefully designed "constraint" mechanisms that stabilize information flow while maintaining flexibility. Imagine giving AI both better highways and traffic control systems rather than just adding more lanes.

Measurable Improvements Across the Board

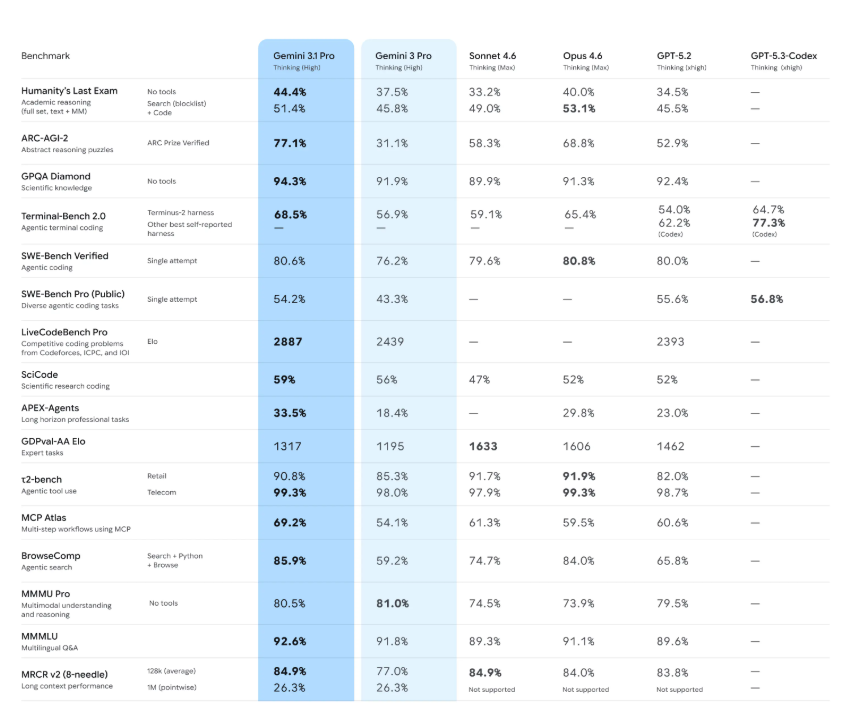

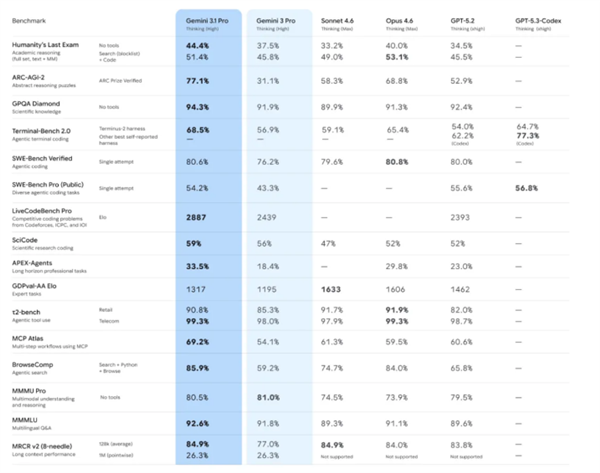

The results speak volumes:

- 7.2% boost in complex reasoning (BIG-Bench Hard)

- Notable gains in mathematical problem-solving (GSM8K)

- Improved logical reasoning scores (DROP)

What makes these numbers remarkable? They came with just 6-7% additional training cost - pocket change compared to traditional scaling approaches.

Challenging Industry Assumptions

For years, the AI field operated on a simple premise: more parameters equal smarter systems. DeepSeek's work proves there's another way. "We're showing you can teach an old dog new tricks," jokes Dr. Li, "or rather, teach existing architectures to perform much better."

The implications are significant for companies struggling with ballooning AI development costs. This approach offers a path to better performance without requiring exponentially more computing power.

What This Means Going Forward

The research suggests we may be entering an era of "smarter scaling" where architectural innovation complements traditional model growth. As companies face practical limits on how big models can get, solutions like DeepSeek's will become increasingly valuable.

Key Points:

- 🧠 Quality Over Quantity: Architectural refinements outperform simple parameter increases

- 📊 Measurable Gains: Clear improvements across reasoning, math and logic tests

- 💰 Cost-Effective: Major performance boosts for minimal additional training expense