ByteDance's InfinityStar Cuts Video Creation Time Dramatically

ByteDance's Game-Changing Video Tech

Imagine creating professional-quality videos faster than brewing your morning coffee. That's what ByteDance promises with its new InfinityStar framework, which reduces generation time for a 5-second HD video to under a minute.

Rethinking Video from the Ground Up

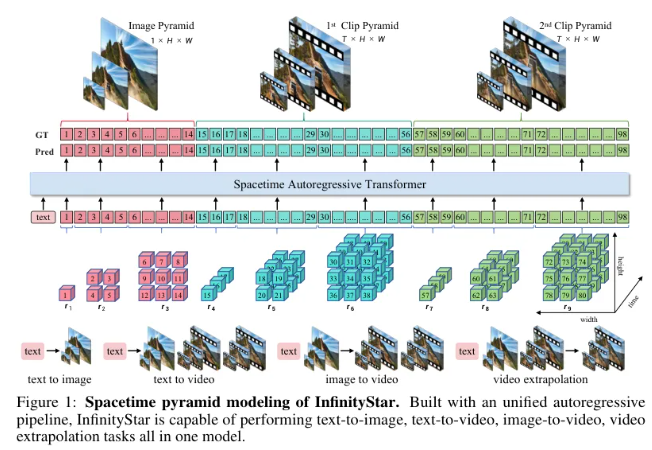

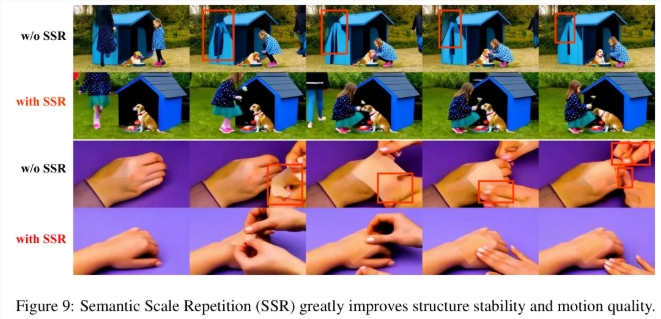

The secret lies in InfinityStar's novel approach. While traditional systems treat videos as monolithic blocks, ByteDance's engineers built a spatiotemporal pyramid model that separates visual elements from motion data. Think of it like analyzing a dance performance by studying the choreography and costumes separately rather than all at once.

"This isn't just incremental improvement," explains Dr. Li Wen, lead researcher on the project. "We're fundamentally changing how AI understands moving images."

Smart Learning Saves Time and Power

The team implemented clever efficiency tricks:

- Leveraging existing AI models through knowledge inheritance

- Using pre-trained components as building blocks

- Focusing computational power where it matters most

The result? Training times cut nearly in half without sacrificing quality.

What This Means for Creators

For content creators struggling with render times, InfinityStar could be revolutionary:

- Rapid prototyping of video concepts

- Faster iterations during editing

- Lower hardware requirements

The framework handles everything from simple animations to complex scene transitions - all through one unified system.

The GitHub repository (github.com/FoundationVision/InfinityStar) already shows promising community engagement since yesterday's launch.

Key Points:

- ⏱️ 58-second generation for 5-second HD clips

- 🧩 Spatiotemporal separation improves quality

- ⚡ Efficient training reduces computing costs

- 🎨 Unified system handles multiple visual tasks